Support Questions

- Cloudera Community

- Support

- Support Questions

- Failure in accessing HDFS and Hive via Knox

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Failure in accessing HDFS and Hive via Knox

Created on 01-30-2017 08:38 AM - edited 08-18-2019 05:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've been following the Hortonworks tutorial on all things related to Knox.

- tutorial-420: http://hortonworks.com/hadoop-tutorial/securing-hadoop-infrastructure-apache-knox/

- tutorial-560: http://hortonworks.com/hadoop-tutorial/secure-jdbc-odbc-clients-access-hiveserver2-using-apache-knox...

Our goal is use Knox as a gateway or single point of entry for microservices that intend to connect to the cluster using REST API calls.

However, my Hadoop environment is not the HDP 2.5 Sandbox but an HDP 2.5 stack built through Ambari on a single node Azure VM, so the configurations may differ and that should partially explain why the tutorials may not work. (This is a POC for a multinode cluster build)

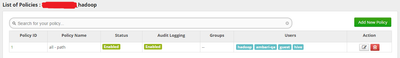

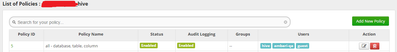

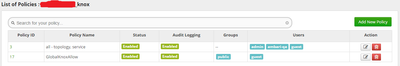

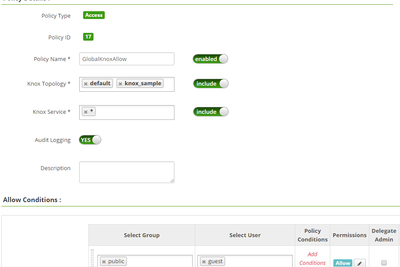

I’m testing the WebHDFS and Hive part. For Ranger, I created a temporary guest account that has global access to HDFS and Hive, as well as copying the GlobalKnoxAllow configured in the Sandbox VM (I'll take care of the more fine-grained Ranger ACL later). We didn't setup this security in LDAP mode though, just the plain Unix ACL.

I also created a sample database named microservice, which I can connect to and query via beeline.

From tutorial-420,

Step 1: I started the Start Demo LDAP

Step 2:

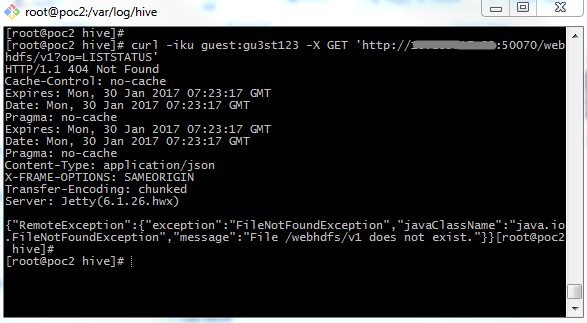

touch /usr/hdp/current/knox-server/conf/topologies/knox_sample.xml curl -iku guest:<guestpw> -X GET 'http://<my-vm-ip>:50070/webhdfs/v1/?op=LISTSTATUS'

This is what I get:

HTTP/1.1 404 Not Found

Cache-Control: no-cache

Expires: Mon, 30 Jan 2017 07:23:17 GMT

Date: Mon, 30 Jan 2017 07:23:17 GMT

Pragma: no-cache

Expires: Mon, 30 Jan 2017 07:23:17 GMT

Date: Mon, 30 Jan 2017 07:23:17 GMT

Pragma: no-cache

Content-Type: application/json

X-FRAME-OPTIONS: SAMEORIGIN

Transfer-Encoding: chunked

Server: Jetty(6.1.26.hwx)

{"RemoteException":{"exception":"FileNotFoundException","javaClassName":"java.io.FileNotFoundException","message":"File /webhdfs/v1 does not exist."}}

Step 3:

curl -iku guest:<guestpw> -X GET 'https://<my-vm-ip>:8443/gateway/default/webhdfs/v1/?op=LISTSTATUS'

This is what I get:

HTTP/1.1 401 Unauthorized Date: Mon, 30 Jan 2017 07:25:51 GMT Set-Cookie: rememberMe=deleteMe; Path=/gateway/default; Max-Age=0; Expires=Sun, 29-Jan-2017 07:25:51 GMT WWW-Authenticate: BASIC realm="application" Content-Length: 0 Server: Jetty(9.2.15.v20160210)

I jumped to tutorial-560:

Step 1: Knox is started

Step 2: In Ambari, hive.server2.transport.mode is changed to http from binary and Hive Server 2 is restarted.

Step 3: SSH on my Azure VM

Step 4: Connect to Hive Server 2 using beeline via Knox

[root@poc2 hive]# beeline !connect jdbc:hive2:// <my-vm-ip>:8443/microservice;ssl=true;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox?hive.server2.transport.mode=http;hive.server2.thrift.http.path=gateway/default/hive

This is the result:

Connecting to jdbc:hive2://<my-vm-ip>:8443/microservice;ssl=true;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox?hive.server2.transport.mode=http;hive.server2.thrift.http.path=gateway/default/hive Enter username for jdbc:hive2://... : guest Enter password for jdbc:hive2://... : ******** 17/01/30 15:28:21 [main]: WARN jdbc.Utils: ***** JDBC param deprecation ***** 17/01/30 15:28:21 [main]: WARN jdbc.Utils: The use of hive.server2.transport.mode is deprecated. 17/01/30 15:28:21 [main]: WARN jdbc.Utils: Please use transportMode like so: jdbc:hive2://<host>:<port>/dbName;transportMode=<transport_mode_value> 17/01/30 15:28:21 [main]: WARN jdbc.Utils: ***** JDBC param deprecation ***** 17/01/30 15:28:21 [main]: WARN jdbc.Utils: The use of hive.server2.thrift.http.path is deprecated. 17/01/30 15:28:21 [main]: WARN jdbc.Utils: Please use httpPath like so: jdbc:hive2://<host>:<port>/dbName;httpPath=<http_path_value> Error: Could not create an https connection to jdbc:hive2://<my-vm-ip>:8443/microservice;ssl=true;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox?hive.server2.transport.mode=http;hive.server2.thrift.http.path=gateway/default/hive. Keystore was tampered with, or password was incorrect (state=08S01,code=0) 0: jdbc:hive2://<my-vm-ip>:8443/microservi (closed)>

The same thing I get even if replace hive.server2.thrift.http.path=cliserver (based from hive-site.xml).

In hive-site.xml, ssl=false, so I tried substituting that on the JDBC connection URL:

[root@poc2 hive]# beeline !connect jdbc:hive2:// <my-vm-ip>:8443/microservice;ssl=false;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox?hive.server2.transport.mode=http;hive.server2.thrift.http.path=gateway/default/hive

This is the result:

Connecting to jdbc:hive2://<my-vm-ip>:8443/microservice;ssl=false;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox?hive.server2.transport.mode=http;hive.server2.thrift.http.path=gateway/default/hive

Enter username for jdbc:hive2://... : guest

Enter password for jdbc:hive2://... : ********

17/01/30 15:33:29 [main]: WARN jdbc.Utils: ***** JDBC param deprecation *****

17/01/30 15:33:29 [main]: WARN jdbc.Utils: The use of hive.server2.transport.mode is deprecated.

17/01/30 15:33:29 [main]: WARN jdbc.Utils: Please use transportMode like so: jdbc:hive2://<host>:<port>/dbName;transportMode=<transport_mode_value>

17/01/30 15:33:29 [main]: WARN jdbc.Utils: ***** JDBC param deprecation *****

17/01/30 15:33:29 [main]: WARN jdbc.Utils: The use of hive.server2.thrift.http.path is deprecated.

17/01/30 15:33:29 [main]: WARN jdbc.Utils: Please use httpPath like so: jdbc:hive2://<host>:<port>/dbName;httpPath=<http_path_value>

17/01/30 15:34:32 [main]: ERROR jdbc.HiveConnection: Error opening session

org.apache.thrift.transport.TTransportException: org.apache.http.conn.ConnectTimeoutException: Connect to <my-vm-ip>:8443 [/<my-vm-ip>] failed: Connection timed out

at org.apache.thrift.transport.THttpClient.flushUsingHttpClient(THttpClient.java:297)

at org.apache.thrift.transport.THttpClient.flush(THttpClient.java:313)

at org.apache.thrift.TServiceClient.sendBase(TServiceClient.java:73)

at org.apache.thrift.TServiceClient.sendBase(TServiceClient.java:62)

at org.apache.hive.service.cli.thrift.TCLIService$Client.send_OpenSession(TCLIService.java:154)

at org.apache.hive.service.cli.thrift.TCLIService$Client.OpenSession(TCLIService.java:146)

at org.apache.hive.jdbc.HiveConnection.openSession(HiveConnection.java:552)

at org.apache.hive.jdbc.HiveConnection.<init>(HiveConnection.java:170)

at org.apache.hive.jdbc.HiveDriver.connect(HiveDriver.java:105)

at java.sql.DriverManager.getConnection(DriverManager.java:571)

at java.sql.DriverManager.getConnection(DriverManager.java:187)

at org.apache.hive.beeline.DatabaseConnection.connect(DatabaseConnection.java:146)

at org.apache.hive.beeline.DatabaseConnection.getConnection(DatabaseConnection.java:211)

at org.apache.hive.beeline.Commands.connect(Commands.java:1190)

at org.apache.hive.beeline.Commands.connect(Commands.java:1086)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hive.beeline.ReflectiveCommandHandler.execute(ReflectiveCommandHandler.java:52)

at org.apache.hive.beeline.BeeLine.dispatch(BeeLine.java:989)

at org.apache.hive.beeline.BeeLine.execute(BeeLine.java:832)

at org.apache.hive.beeline.BeeLine.begin(BeeLine.java:790)

at org.apache.hive.beeline.BeeLine.mainWithInputRedirection(BeeLine.java:490)

at org.apache.hive.beeline.BeeLine.main(BeeLine.java:473)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:233)

at org.apache.hadoop.util.RunJar.main(RunJar.java:148)

Caused by: org.apache.http.conn.ConnectTimeoutException: Connect to <my-vm-ip>:8443 [/<my-vm-ip>] failed: Connection timed out

at org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:156)

at org.apache.http.impl.conn.PoolingHttpClientConnectionManager.connect(PoolingHttpClientConnectionManager.java:353)

at org.apache.http.impl.execchain.MainClientExec.establishRoute(MainClientExec.java:380)

at org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:236)

at org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:184)

at org.apache.http.impl.execchain.RetryExec.execute(RetryExec.java:88)

at org.apache.http.impl.execchain.RedirectExec.execute(RedirectExec.java:110)

at org.apache.http.impl.execchain.ServiceUnavailableRetryExec.execute(ServiceUnavailableRetryExec.java:84)

at org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:184)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:117)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:55)

at org.apache.thrift.transport.THttpClient.flushUsingHttpClient(THttpClient.java:251)

... 30 more

Caused by: java.net.ConnectException: Connection timed out

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:339)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:200)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:182)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:579)

at org.apache.http.conn.socket.PlainConnectionSocketFactory.connectSocket(PlainConnectionSocketFactory.java:74)

at org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:141)

... 41 more

Error: Could not establish connection to jdbc:hive2://<my-vm-ip>:8443/microservice;ssl=false;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox?hive.server2.transport.mode=http;hive.server2.thrift.http.path=gateway/default/hive: org.apache.http.conn.ConnectTimeoutException: Connect to <my-vm-ip>:8443 [/<my-vm-ip>] failed: Connection timed out (state=08S01,code=0)

0: jdbc:hive2://<my-vm-ip>:8443/microservi (closed)>

Again I tried replacing hive.server2.thrift.http.path=cliserver and I get the same result.

Does anyone here able to configure to Knox correctly and working?

Created 01-30-2017 09:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you post content of knox_sample and default topologies? 404 error show topology deployment issue and 401 means problem authenticating. You can check if Knox and Demo LDAP is working properly by logging in to Knox Host and issue below command:

curl -iku admin:admin-password -X GET 'https://localhost:8443/gateway/admin/api/v1/version'

This should return Knox Server version, something similar to below:

HTTP/1.1 200 OK Date: Mon, 30 Jan 2017 09:06:35 GMT Set-Cookie: JSESSIONID=12phoc1xgseuiwm1m48kvs6cw;Path=/gateway/admin;Secure;HttpOnly Expires: Thu, 01 Jan 1970 00:00:00 GMT Set-Cookie: rememberMe=deleteMe; Path=/gateway/admin; Max-Age=0; Expires=Sun, 29-Jan-2017 09:06:35 GMT Content-Type: application/xml Content-Length: 170 Server: Jetty(9.2.15.v20160210) <?xml version="1.0" encoding="UTF-8"?> <ServerVersion> <version>0.11.0.2.6.0.0-405</version> <hash>b4ad4748eeff4c9b9493a0cc082a0825200c4668</hash> </ServerVersion>

Created 01-30-2017 10:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The knox_sample topology seems to be empty based from the tutorial. The guide just instructs the user to use touch command.

The result of the command you gave is:

HTTP/1.1 401 Unauthorized Date: Mon, 30 Jan 2017 09:56:48 GMT Set-Cookie: rememberMe=deleteMe; Path=/gateway/admin; Max-Age=0; Expires=Sun, 29-Jan-2017 09:56:48 GMT WWW-Authenticate: BASIC realm="application" Content-Length: 0 Server: Jetty(9.2.15.v20160210)

Created 01-30-2017 10:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This means either Demo LDAP is not properly configured or running. Can you post default.xml from /etc/knox/conf/topologies directory? Also knox_sample.xml shouldn't be empty, it should have all service information. Also do run below command and provide the output:

ps aux | grep ldap

Created 01-30-2017 10:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Then there is something wrong with Knox tutorial in Hortonworks. The knox_sample.xml file does not exist even in the Sandbox VM and using touch will simply create an empty file.

What would be the admin username and password here? Is it Ranger's or Knox's admin?

Here's the output of ps aux | grep ldap :

knox 562103 0.0 0.2 5400580 33376 ? Sl Jan25 2:59 /usr/lib/jvm/java-1.7.0-oracle/bin/java -jar /usr/hdp/current/knox-server/bin/ldap.jar /usr/hdp/current/knox-server/conf root 675001 0.0 0.0 103320 868 pts/0 S+ 18:32 0:00 grep ldap

And here's the default.xml :

<topology>

<gateway>

<provider>

<role>authentication</role>

<name>ShiroProvider</name>

<enabled>true</enabled>

<param>

<name>sessionTimeout</name>

<value>30</value>

</param>

<param>

<name>main.ldapRealm</name>

<value>org.apache.hadoop.gateway.shirorealm.KnoxLdapRealm</value>

</param>

<param>

<name>main.ldapRealm.userDnTemplate</name>

<value>uid={0},ou=people,dc=hadoop,dc=apache,dc=org</value>

</param>

<param>

<name>main.ldapRealm.contextFactory.url</name>

<value>ldap://<my-vm-hostname>.com:33389</value>

</param>

<param>

<name>main.ldapRealm.contextFactory.authenticationMechanism</name>

<value>simple</value>

</param>

<param>

<name>urls./**</name>

<value>authcBasic</value>

</param>

</provider>

<provider>

<role>identity-assertion</role>

<name>Default</name>

<enabled>true</enabled>

</provider>

<provider>

<role>authorization</role>

<name>XASecurePDPKnox</name>

<enabled>true</enabled>

</provider>

</gateway>

<service>

<role>NAMENODE</role>

<url>hdfs://<my-vm-hostname>:8020</url>

</service>

<service>

<role>JOBTRACKER</role>

<url>rpc://<my-vm-hostname>:8050</url>

</service>

<service>

<role>WEBHDFS</role>

<url>http://<my-vm-hostname>:50070/webhdfs</url>

</service>

<service>

<role>WEBHCAT</role>

<url>http://<my-vm-hostname>:50111/templeton</url>

</service>

<service>

<role>OOZIE</role>

<url>http://<my-vm-hostname>:11000/oozie</url>

</service>

<service>

<role>WEBHBASE</role>

<url>http://<my-vm-hostname>:8080</url>

</service>

<service>

<role>HIVE</role>

<url>http://<my-vm-hostname>:10001/cliservice</url>

</service>

<service>

<role>RESOURCEMANAGER</role>

<url>http://<my-vm-hostname>:8088/ws</url>

</service>

</topology>

Created 01-30-2017 11:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have already given you default credentials for Knox Admin user in previous curl command. Can you please provide "default.xml" i.e. default topology which contains configuration details of services and even Knox's Demo LDAP. Ideally it should be pointing to "ldap://<ldap_server_host>:33389".

Created 01-30-2017 11:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I already pasted the default.xml above. I tried using the admin:admin-password (earlier I didn't, as I was confused) :

curl -iku admin:admin-password -X GET 'https://localhost:8443/gateway/admin/api/v1/version'

and I now get

HTTP/1.1 200 OK Date: Mon, 30 Jan 2017 11:04:24 GMT Set-Cookie: JSESSIONID=1qfuugnqxqf1hf4exm5kpvkmd;Path=/gateway/admin;Secure;HttpOnly Expires: Thu, 01 Jan 1970 00:00:00 GMT Set-Cookie: rememberMe=deleteMe; Path=/gateway/admin; Max-Age=0; Expires=Sun, 29-Jan-2017 11:04:24 GMT Content-Type: application/xml Content-Length: 170 Server: Jetty(9.2.15.v20160210) <?xml version="1.0" encoding="UTF-8"?> <ServerVersion> <version>0.9.0.2.5.0.0-1245</version> <hash>09990487b383298f8e1c9e72dceb0a8e3ff33d17</hash> </ServerVersion>

Created 01-30-2017 11:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@J. D. Bacolod Congratulations!! The last command output means your Knox instance is working fine and is able to authenticate using Knox Demo LDAP for authentication. You should be able to execute even below command now and get output.

curl -iku guest:guest-password -X GET 'https://localhost:8443/gateway/default/webhdfs/v1/?op=LISTSTATUS'If you are getting 200 OK status code for above as well, make a copy of "default.xml" and name it "knox_sample.xml" and carry on with what you were doing. Just make sure the topology/xml file is pointing to correct Service Endpoints.

Created 01-30-2017 11:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Alright the HDFS part seems to be working, but I have to use

guest:guest-password

I thought it is something I can add to Unix and Ranger as users (which I named guest as well, with a different password; which is why it won't work). If I understand correctly, this guest should be a Knox specific username, right?

But how about Hive? It still won't work, even though I used guest:guest-password. I am getting the same result as the original.

Thanks for your help.

Created 01-30-2017 12:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@J. D. Bacolod You can use Unix users by configuring topology to use PAM based authentication. Refer http://knox.apache.org/books/knox-0-11-0/user-guide.html#PAM+based+Authentication About Hive, the JDBC connection string is wrong. You don't have to specify database name i.e. microservice with Knox URL. Replace <PATH_TO_KNOX_KEYSTORE> with location of gateway.jks on your Knox Host and Try something like below:

beeline --silent=true -u "jdbc:hive2://localhost:8443/;ssl=true;sslTrustStore=<PATH_TO_KNOX_KEYSTORE>/gateway.jks;trustStorePassword=knoxsecret;transportMode=http;httpPath=gateway/default/hive" -d org.apache.hive.jdbc.HiveDriver -n guest -p guest-password -e "show databases;"