Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Failure in accessing HDFS and Hive via Knox

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Failure in accessing HDFS and Hive via Knox

Created on 01-30-2017 08:38 AM - edited 08-18-2019 05:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've been following the Hortonworks tutorial on all things related to Knox.

- tutorial-420: http://hortonworks.com/hadoop-tutorial/securing-hadoop-infrastructure-apache-knox/

- tutorial-560: http://hortonworks.com/hadoop-tutorial/secure-jdbc-odbc-clients-access-hiveserver2-using-apache-knox...

Our goal is use Knox as a gateway or single point of entry for microservices that intend to connect to the cluster using REST API calls.

However, my Hadoop environment is not the HDP 2.5 Sandbox but an HDP 2.5 stack built through Ambari on a single node Azure VM, so the configurations may differ and that should partially explain why the tutorials may not work. (This is a POC for a multinode cluster build)

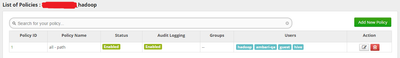

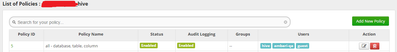

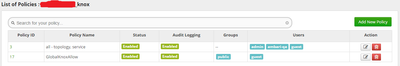

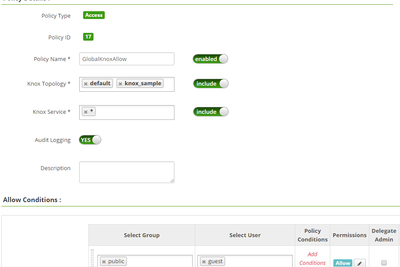

I’m testing the WebHDFS and Hive part. For Ranger, I created a temporary guest account that has global access to HDFS and Hive, as well as copying the GlobalKnoxAllow configured in the Sandbox VM (I'll take care of the more fine-grained Ranger ACL later). We didn't setup this security in LDAP mode though, just the plain Unix ACL.

I also created a sample database named microservice, which I can connect to and query via beeline.

From tutorial-420,

Step 1: I started the Start Demo LDAP

Step 2:

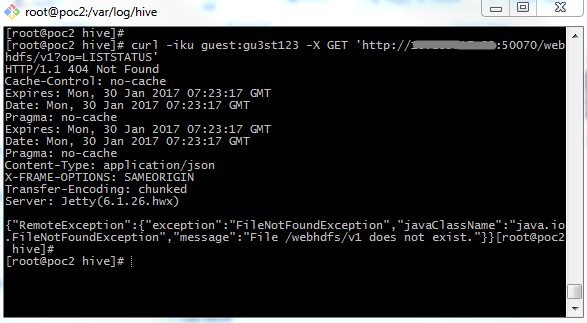

touch /usr/hdp/current/knox-server/conf/topologies/knox_sample.xml curl -iku guest:<guestpw> -X GET 'http://<my-vm-ip>:50070/webhdfs/v1/?op=LISTSTATUS'

This is what I get:

HTTP/1.1 404 Not Found

Cache-Control: no-cache

Expires: Mon, 30 Jan 2017 07:23:17 GMT

Date: Mon, 30 Jan 2017 07:23:17 GMT

Pragma: no-cache

Expires: Mon, 30 Jan 2017 07:23:17 GMT

Date: Mon, 30 Jan 2017 07:23:17 GMT

Pragma: no-cache

Content-Type: application/json

X-FRAME-OPTIONS: SAMEORIGIN

Transfer-Encoding: chunked

Server: Jetty(6.1.26.hwx)

{"RemoteException":{"exception":"FileNotFoundException","javaClassName":"java.io.FileNotFoundException","message":"File /webhdfs/v1 does not exist."}}

Step 3:

curl -iku guest:<guestpw> -X GET 'https://<my-vm-ip>:8443/gateway/default/webhdfs/v1/?op=LISTSTATUS'

This is what I get:

HTTP/1.1 401 Unauthorized Date: Mon, 30 Jan 2017 07:25:51 GMT Set-Cookie: rememberMe=deleteMe; Path=/gateway/default; Max-Age=0; Expires=Sun, 29-Jan-2017 07:25:51 GMT WWW-Authenticate: BASIC realm="application" Content-Length: 0 Server: Jetty(9.2.15.v20160210)

I jumped to tutorial-560:

Step 1: Knox is started

Step 2: In Ambari, hive.server2.transport.mode is changed to http from binary and Hive Server 2 is restarted.

Step 3: SSH on my Azure VM

Step 4: Connect to Hive Server 2 using beeline via Knox

[root@poc2 hive]# beeline !connect jdbc:hive2:// <my-vm-ip>:8443/microservice;ssl=true;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox?hive.server2.transport.mode=http;hive.server2.thrift.http.path=gateway/default/hive

This is the result:

Connecting to jdbc:hive2://<my-vm-ip>:8443/microservice;ssl=true;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox?hive.server2.transport.mode=http;hive.server2.thrift.http.path=gateway/default/hive Enter username for jdbc:hive2://... : guest Enter password for jdbc:hive2://... : ******** 17/01/30 15:28:21 [main]: WARN jdbc.Utils: ***** JDBC param deprecation ***** 17/01/30 15:28:21 [main]: WARN jdbc.Utils: The use of hive.server2.transport.mode is deprecated. 17/01/30 15:28:21 [main]: WARN jdbc.Utils: Please use transportMode like so: jdbc:hive2://<host>:<port>/dbName;transportMode=<transport_mode_value> 17/01/30 15:28:21 [main]: WARN jdbc.Utils: ***** JDBC param deprecation ***** 17/01/30 15:28:21 [main]: WARN jdbc.Utils: The use of hive.server2.thrift.http.path is deprecated. 17/01/30 15:28:21 [main]: WARN jdbc.Utils: Please use httpPath like so: jdbc:hive2://<host>:<port>/dbName;httpPath=<http_path_value> Error: Could not create an https connection to jdbc:hive2://<my-vm-ip>:8443/microservice;ssl=true;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox?hive.server2.transport.mode=http;hive.server2.thrift.http.path=gateway/default/hive. Keystore was tampered with, or password was incorrect (state=08S01,code=0) 0: jdbc:hive2://<my-vm-ip>:8443/microservi (closed)>

The same thing I get even if replace hive.server2.thrift.http.path=cliserver (based from hive-site.xml).

In hive-site.xml, ssl=false, so I tried substituting that on the JDBC connection URL:

[root@poc2 hive]# beeline !connect jdbc:hive2:// <my-vm-ip>:8443/microservice;ssl=false;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox?hive.server2.transport.mode=http;hive.server2.thrift.http.path=gateway/default/hive

This is the result:

Connecting to jdbc:hive2://<my-vm-ip>:8443/microservice;ssl=false;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox?hive.server2.transport.mode=http;hive.server2.thrift.http.path=gateway/default/hive

Enter username for jdbc:hive2://... : guest

Enter password for jdbc:hive2://... : ********

17/01/30 15:33:29 [main]: WARN jdbc.Utils: ***** JDBC param deprecation *****

17/01/30 15:33:29 [main]: WARN jdbc.Utils: The use of hive.server2.transport.mode is deprecated.

17/01/30 15:33:29 [main]: WARN jdbc.Utils: Please use transportMode like so: jdbc:hive2://<host>:<port>/dbName;transportMode=<transport_mode_value>

17/01/30 15:33:29 [main]: WARN jdbc.Utils: ***** JDBC param deprecation *****

17/01/30 15:33:29 [main]: WARN jdbc.Utils: The use of hive.server2.thrift.http.path is deprecated.

17/01/30 15:33:29 [main]: WARN jdbc.Utils: Please use httpPath like so: jdbc:hive2://<host>:<port>/dbName;httpPath=<http_path_value>

17/01/30 15:34:32 [main]: ERROR jdbc.HiveConnection: Error opening session

org.apache.thrift.transport.TTransportException: org.apache.http.conn.ConnectTimeoutException: Connect to <my-vm-ip>:8443 [/<my-vm-ip>] failed: Connection timed out

at org.apache.thrift.transport.THttpClient.flushUsingHttpClient(THttpClient.java:297)

at org.apache.thrift.transport.THttpClient.flush(THttpClient.java:313)

at org.apache.thrift.TServiceClient.sendBase(TServiceClient.java:73)

at org.apache.thrift.TServiceClient.sendBase(TServiceClient.java:62)

at org.apache.hive.service.cli.thrift.TCLIService$Client.send_OpenSession(TCLIService.java:154)

at org.apache.hive.service.cli.thrift.TCLIService$Client.OpenSession(TCLIService.java:146)

at org.apache.hive.jdbc.HiveConnection.openSession(HiveConnection.java:552)

at org.apache.hive.jdbc.HiveConnection.<init>(HiveConnection.java:170)

at org.apache.hive.jdbc.HiveDriver.connect(HiveDriver.java:105)

at java.sql.DriverManager.getConnection(DriverManager.java:571)

at java.sql.DriverManager.getConnection(DriverManager.java:187)

at org.apache.hive.beeline.DatabaseConnection.connect(DatabaseConnection.java:146)

at org.apache.hive.beeline.DatabaseConnection.getConnection(DatabaseConnection.java:211)

at org.apache.hive.beeline.Commands.connect(Commands.java:1190)

at org.apache.hive.beeline.Commands.connect(Commands.java:1086)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hive.beeline.ReflectiveCommandHandler.execute(ReflectiveCommandHandler.java:52)

at org.apache.hive.beeline.BeeLine.dispatch(BeeLine.java:989)

at org.apache.hive.beeline.BeeLine.execute(BeeLine.java:832)

at org.apache.hive.beeline.BeeLine.begin(BeeLine.java:790)

at org.apache.hive.beeline.BeeLine.mainWithInputRedirection(BeeLine.java:490)

at org.apache.hive.beeline.BeeLine.main(BeeLine.java:473)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:233)

at org.apache.hadoop.util.RunJar.main(RunJar.java:148)

Caused by: org.apache.http.conn.ConnectTimeoutException: Connect to <my-vm-ip>:8443 [/<my-vm-ip>] failed: Connection timed out

at org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:156)

at org.apache.http.impl.conn.PoolingHttpClientConnectionManager.connect(PoolingHttpClientConnectionManager.java:353)

at org.apache.http.impl.execchain.MainClientExec.establishRoute(MainClientExec.java:380)

at org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:236)

at org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:184)

at org.apache.http.impl.execchain.RetryExec.execute(RetryExec.java:88)

at org.apache.http.impl.execchain.RedirectExec.execute(RedirectExec.java:110)

at org.apache.http.impl.execchain.ServiceUnavailableRetryExec.execute(ServiceUnavailableRetryExec.java:84)

at org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:184)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:117)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:55)

at org.apache.thrift.transport.THttpClient.flushUsingHttpClient(THttpClient.java:251)

... 30 more

Caused by: java.net.ConnectException: Connection timed out

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:339)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:200)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:182)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:579)

at org.apache.http.conn.socket.PlainConnectionSocketFactory.connectSocket(PlainConnectionSocketFactory.java:74)

at org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:141)

... 41 more

Error: Could not establish connection to jdbc:hive2://<my-vm-ip>:8443/microservice;ssl=false;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox?hive.server2.transport.mode=http;hive.server2.thrift.http.path=gateway/default/hive: org.apache.http.conn.ConnectTimeoutException: Connect to <my-vm-ip>:8443 [/<my-vm-ip>] failed: Connection timed out (state=08S01,code=0)

0: jdbc:hive2://<my-vm-ip>:8443/microservi (closed)>

Again I tried replacing hive.server2.thrift.http.path=cliserver and I get the same result.

Does anyone here able to configure to Knox correctly and working?

Created 02-01-2017 07:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It works now when I use "jdbc:hive2://<my-vm-hostname>:8443/;ssl=true...".

However, when I use 'localhost', '127.0.0.1' and even <my-vm-ip>, I get this message:

17/02/01 15:38:51 [main]: ERROR jdbc.HiveConnection: Error opening session

org.apache.thrift.transport.TTransportException: javax.net.ssl.SSLPeerUnverifiedException: Host name '<my-vm-ip>' does not match the certificate subject provided by the peer (CN=<my-vm-hostname>, OU=Test, O=Hadoop, L=Test, ST=Test, C=US)

at org.apache.thrift.transport.THttpClient.flushUsingHttpClient(THttpClient.java:297)

...

Caused by: javax.net.ssl.SSLPeerUnverifiedException: Host name '<my-vm-ip>' does not match the certificate subject provided by the peer (CN=<my-vm-hostname>, OU=Test, O=Hadoop, L=Test, ST=Test, C=US)

at org.apache.http.conn.ssl.SSLConnectionSocketFactory.verifyHostname(SSLConnectionSocketFactory.java:465)

...

... 30 more

Error: Could not establish connection to jdbc:hive2://<my-vm-ip>:8443/;ssl=true;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox_123;transportMode=http;httpPath=gateway/default/hive: javax.net.ssl.SSLPeerUnverifiedException: Host name '<my-vm-ip>' does not match the certificate subject provided by the peer (CN=<my-vm-hostname>, OU=Test, O=Hadoop, L=Test, ST=Test, C=US) (state=08S01,code=0)

17/02/01 15:38:51 [main]: ERROR jdbc.HiveConnection: Error opening session

org.apache.thrift.transport.TTransportException: javax.net.ssl.SSLPeerUnverifiedException: Host name '<my-vm-ip>' does not match the certificate subject provided by the peer (CN=<my-vm-hostname>, OU=Test, O=Hadoop, L=Test, ST=Test, C=US)

at org.apache.thrift.transport.THttpClient.flushUsingHttpClient(THttpClient.java:297)

...

Caused by: javax.net.ssl.SSLPeerUnverifiedException: Host name '<my-vm-ip>' does not match the certificate subject provided by the peer (CN=<my-vm-hostname>, OU=Test, O=Hadoop, L=Test, ST=Test, C=US)

at org.apache.http.conn.ssl.SSLConnectionSocketFactory.verifyHostname(SSLConnectionSocketFactory.java:465)

...

Caused by: javax.net.ssl.SSLPeerUnverifiedException: Host name '<my-vm-ip>' does not match the certificate subject provided by the peer (CN=<my-vm-hostname>, OU=Test, O=Hadoop, L=Test, ST=Test, C=US)

at org.apache.http.conn.ssl.SSLConnectionSocketFactory.verifyHostname(SSLConnectionSocketFactory.java:465)

...

at org.apache.thrift.transport.THttpClient.flushUsingHttpClient(THttpClient.java:251)

... 24 more

Error: Could not establish connection to jdbc:hive2://<my-vm-ip>:8443/;ssl=true;sslTrustStore=/var/lib/knox/data-2.5.0.0-1245/security/keystores/gateway.jks;trustStorePassword=knox_123;transportMode=http;httpPath=gateway/default/hive: javax.net.ssl.SSLPeerUnverifiedException: Host name '<my-vm-ip>' does not match the certificate subject provided by the peer (CN=<my-vm-hostname>, OU=Test, O=Hadoop, L=Test, ST=Test, C=US) (state=08S01,code=0)

Is there anyway to connect using <my-vm-ip> in order for a remote machine to access it? The <my-vm-hostname> is not network visible, it's just locally known within the VM's /etc/hosts.

Thanks for all your help. Appreciate it.

Created 02-01-2017 11:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This may be happening coz the SSL certificate generated has your VM Hostname as CN. I will suggest making an hostname, IP address mapping entry in your remote machine's /etc/hosts file and access it using the hostname only. Also you can export the Knox certificate using below command:

$<JAVA_HOME>/bin/keytool -export -alias gateway-identity -rfc -file <cert.pem> -keystore /usr/hdp/current/knox-server/data/security/keystores/gateway.jks

and import the same in your remote host using below command:

$<JAVA_HOME>/bin/keytool -import -alias knoxsso -keystore <JAVA_HOME>/jre/lib/security/cacerts -storepass changeit -file <cert.pem>

Created 07-25-2018 01:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I am facing the same issue. Can you pls help me on this.?

Error: Could not establish connection to jdbc:hive2://sandbox-hdp.hortonworks.com:8443/;ssl=true;sslTrustStore=/var/lib/knox/data-2.6.5.0-292/security/keystores/gateway.jks;trustStorePassword=knox;transportMode=http;httpPath=gateway/default/hive: HTTP Response code: 500 (state=08S01,code=0)

regards

Ashokkumar.R

- « Previous

-

- 1

- 2

- Next »