Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Falcon Mirroring: HDFS To S3 AWS Credentials

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Falcon Mirroring: HDFS To S3 AWS Credentials

- Labels:

-

Apache Falcon

Created 10-08-2015 07:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Where do you define your AWS access key and secret key credentials for mirroring data from a local Falcon cluster to S3? I have the job setup but it is failing because those values are not defined.

Created on 10-09-2015 12:53 PM - edited 08-19-2019 05:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

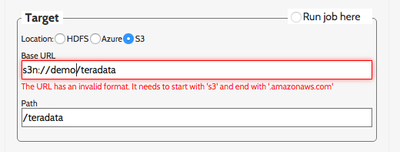

It seems the issue is specific to Falcon UI. The Falcon UI attempts to validate the S3 URI and enforces that it ends with amazonaws.com however this is not the format expected by "Jets3tNativeFileSystemStore" which distcp ultimately invokes. The format needs to be s3n://BUCKET/PATH. This was causing the authentication to fail even with the proper credentials in place since the wrong endpoint was being hit.

A work around is to download the xml from the Falcon UI and then edit the s3n URI manually and then re upload the xml file through the Falcon UI.

Created 10-08-2015 08:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you add the following properties to your core-site.xml?

fs.s3n.awsAccessKeyId

fs.s3n.awsSecretAccessKey

Created on 10-09-2015 12:53 PM - edited 08-19-2019 05:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems the issue is specific to Falcon UI. The Falcon UI attempts to validate the S3 URI and enforces that it ends with amazonaws.com however this is not the format expected by "Jets3tNativeFileSystemStore" which distcp ultimately invokes. The format needs to be s3n://BUCKET/PATH. This was causing the authentication to fail even with the proper credentials in place since the wrong endpoint was being hit.

A work around is to download the xml from the Falcon UI and then edit the s3n URI manually and then re upload the xml file through the Falcon UI.

Created 10-24-2015 12:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue raised by @Jeremy Dyer will be fixed by Falcon team in Dal-M20 release.

Created 11-28-2017 09:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Team, I have tried above and I see the Job status KILLED after running the workflow. After launching Oozie, I can see the workflow changing status from RUNNING to KILLED. Is there a way to troubleshoot. I can run hadoop fs -ls commands on my s3 bucket so definitely got access. I suspect its the s3 URL. I tried downloading the xml changing the URL and uploading with no luck. Any other suggestions. Appreciate all your help/support in advance. Regards

Anil