Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Flowfile absolute path Nifi

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Flowfile absolute path Nifi

- Labels:

-

Apache NiFi

Created on 03-20-2018 09:30 AM - edited 08-18-2019 12:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm trying to load the flow files into MySQL database using bulk load option. Below is the query I'm using as part of the UpdateAttribute processor and passing that query to PutSQL after updating the parameters to do bulk load.

LOAD DATA INFILE '${absolute.path}${filename}' INTO TABLE ${dest.database}.${db.table.name} FIELDS TERMINATED BY',' LINES TERMINATED BY'\n'When I ran the flow it's failing saying file not found exception.

There were a total of1 FlowFiles that failed,0 that succeeded,and0 that were notexecuteand will be routed to retry;: java.sql.BatchUpdateException: Unable toopenfile'data.csv'for'LOAD DATA INFILE command.Due to underlying IOException:`**BEGIN NESTED EXCEPTION ** java.io.FileNotFoundException MESSAGE: data.csv (No such fileor directory) java.io.FileNotFoundException: data.csv (No such fileor directory).

Here MySQL Server and Nifi are on different nodes so I can't use LOAD DATA LOCAL INFILE query.

I'm not sure why I'm getting file not found exception even though I mentioned the complete absolute path of the flow file in the SQL Query.

When I use query with hard coded file name and providing the absolute path of the file in nifi node, it's working as expected.

Working:

<code>LOAD DATA LOCAL INFILE '/path/in/nifi/node/to/file/data.csv' INTO TABLE ${dest.database}.${db.table.name} FIELDS TERMINATED BY',' LINES TERMINATED BY'\n'}Question is how to get the absolute path of the flow file and load the same flow file into mysql.

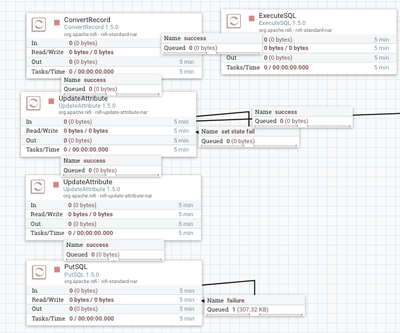

Flow:

Created 03-21-2018 01:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"Absolute path" of the flow file is where it is read from and where it is written to! For example "ListFile" lists all the files in a given directory and creates an attribute "absolute.path" which DO NOT include the file name. Another example can be PutHDFS. It writes the file to HDFS and, no prize for guessing, create an attribute called "absolute.path" similar to the previous example. Question is do you have any such processor in your flow? At least the snapshot doesn't show any! Second, do you need to commit the data to disk before writing to MySQL, probably not!

So how to write the data to MySQL? I would recommend using PutDatabaseRecord processor! It fits the bill in this use case.

Hope that helps!

Created 03-21-2018 05:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Rahul Soni,

I tried the approach and it's working as expected, however there are billions of records I need to write to MySQL table and when I checked the performance for PutDatabaseRecord, it's taking quite long time. As other possible solution to improve the performance(Bulk load data into MySQL).

Created 04-01-2018 04:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can use multiple options. But they have there if's and buts! 🙂

Follows the best option that I can think of!

Use MergeContent to merge multiple flow files to one bigger file, put the bigger flow file on Local disk and use "LOAD DATA" statement from MySQL. Will be very fast!

Let me know if you need additional help on the topic!

If the answer helped you resolve your query, actual or new :), please mark the answer as Accepted!