Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HDFS Datanode Directory

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HDFS Datanode Directory

- Labels:

-

Apache Hadoop

Created 02-04-2019 06:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I have 6 datanodes in my cluster. Those 6 servers have different mount points.

on .50 : /datadrv1, /datadrv2, /datadrv3

on 51: /datadrv1, /datadrv2

on 52 : /data1

on 53 : /datadrv1

on 54 : /data

on 55 : /data1

And in HDFS config, datanode specified as /datadrv1/hadoop/hdfs/data and /data1/hadoop/hdfs/data.

And due to this differents mount points data is going on root as hadoop is not getting exact path on servers. So it is creating the directories which are not present on that server under root.

So my question is, does HDFS grouping solve this problem? If yes please provide me the steps.

PS: Don't provide the document link. If you are attaching the document then please tell me exact steps to follow.

Thanks and Regards.

Created 02-04-2019 09:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From your configuration and the errors, you have encountered it seems disks mounted /datadrv1 and /data1 are completely full yet /datadrv2, /datadrv3 and /data are not used. HDFS picks the valid HDFS gets the value/location from the dfs.datanode.data.dir which should have the below comma separated values !!

/datadrv1/hadoop/hdfs/data,/data1/hadoop/hdfs/data,/data/hadoop/hdfs/data,/datadrv2/hadoop/hdfs/data,/datadrv3/hadoop/hdfs/data

Instead of ONLY /datadrv1/hadoop/hdfs/data and /data1/hadoop/hdfs/data.

Solution

First, run the below command as hdfs user ,you should see all the 6 data nodes report here with the DFS Remaining, DFS used, used% and not all the values

$ hdfs dfsadmin -report Configured Capacity: 8152940544 (7.59 GB) Present Capacity: 6912588663 (6.44 GB) DFS Remaining: 3826170743 (3.56 GB) DFS Used: 3086417920 (2.87 GB) DFS Used%: 44.65% Under replicated blocks: 1389 Blocks with corrupt replicas: 0 Missing blocks: 0 Missing blocks (with replication factor 1): 0

----Output----

Live datanodes (1): Name: 192.xxx.0.31:50010 (beta.frog.cr) Hostname: beta.frog.cr Decommission Status : Normal Configured Capacity: 8152940544 (7.59 GB) DFS Used: 3086417920 (2.87 GB) Non DFS Used: 0 (0 B) DFS Remaining: 3826170743 (3.56 GB) DFS Used%: 37.86% DFS Remaining%: 46.93% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) Cache Remaining: 0 (0 B) Cache Used%: 100.00% Cache Remaining%: 0.00% Xceivers: 12 Last contact: Mon Feb 04 22:13:13 CET 2019 Last Block Report: Mon Feb 04 21:26:27 CET 2019

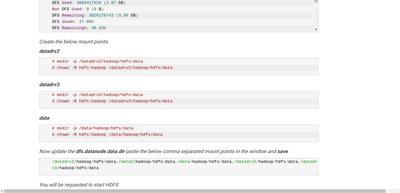

Create the below mount points

datadrv2

# mkdir -p /datadrv2/hadoop/hdfs/data # chown -R hdfs:hadoop /datadrv2/hadoop/hdfs/data

datadrv3

# mkdir -p /datadrv3/hadoop/hdfs/data # chown -R hdfs:hadoop /datadrv3/hadoop/hdfs/data

data

# mkdir -p /data/hadoop/hdfs/data # chown -R hdfs:hadoop /data/hadoop/hdfs/data

Now update the dfs.datanode.data.dir paste the below comma separated mount points in the window and save

/datadrv1/hadoop/hdfs/data,/data1/hadoop/hdfs/data,/data/hadoop/hdfs/data,/datadrv2/hadoop/hdfs/data,/datadrv3/hadoop/hdfs/data

You will be requested to start HDFS

At this point run the HDFS rebalancer, depending on the data to copy You mount points and they should look like this

After the above change restart the data nodes.

Rebalancing HDFS

HDFS provides a “balancer” utility to help balance the blocks across DataNodes in the cluster. To initiate a balancing process, follow these steps:

- In Ambari Web, browse to Services > HDFS > Summary.

- Click Service Actions, and then click Rebalance HDFS.

- Enter the Balance Threshold value as a percentage of disk capacity.

- Click Start

This balancer command uses the default threshold of 10 per cent. This means that the balancer will balance data by moving blocks from over-utilized to under-utilized nodes until each DataNode’s disk usage differs by no more than plus or minus 10 per cent of the average disk usage in the cluster. Sometimes, you may wish to set the threshold to a different level—for example, when free space in the cluster is getting low and you want to keep the used storage levels on the individual DataNodes within a smaller range than the default of plus or minus 10 per cent .e.g 5

This should resolve your problem, please let me know

Created on 02-05-2019 09:14 AM - edited 08-17-2019 02:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@geoffrey Shelton okot

As you have suggested, to create mount points. But where to create it, because it is present on other datanodes.

Are you asking to create the mount points on every datanode present in cluster?

Its like on one server there are three mount points, on second there are 2, on third there is only one and so on like this. What is happening is, whenever data is coming to hdfs, if it does not get that path which is in config file then it is creating that directory on root.

If i mention all mount points which I have on different different server, then won't it be create duplicate datablock? Means one block is written twice as I have mentioned every mount points in dfs.datanode.data.dir.

Created 02-05-2019 09:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The following mount points already exist on the servers 50 and 52

/datadrv1/hadoop/hdfs/data and /data1/hadoop/hdfs/data.

Create the new ones on these servers

Datadrv2 on server 50 and 51

# mkdir -p /datadrv2/hadoop/hdfs/data # chown -R hdfs:hadoop /datadrv2/hadoop/hdfs/data

Datadrv3 on server 50

# mkdir -p /datadrv3/hadoop/hdfs/data # chown -R hdfs:hadoop /datadrv3/hadoop/hdfs/data

Data on server 54

# mkdir -p /data/hadoop/hdfs/data # chown -R hdfs:hadoop /data/hadoop/hdfs/data

Can you include the full output of the below command please tokenize your sensitive data

$ hdfs dfsadmin -report

Please revert

Created 02-05-2019 10:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

But Geoffrey Whatever you are suggesting, that is already exist on respective server.

Like you are saying, create datadrv2 on 50 and 51. But as i mentioned earlier, it is already present.

Please see this again:

on .50 : /datadrv1, /datadrv2, /datadrv3

on 51: /datadrv1, /datadrv2

on 52 : /data1

on 53 : /datadrv1

on 54 : /data

on 55 : /data1

These directories are already there on respective servers.

So when the data is coming to HDFS, it automatically adding data to mentioned path (In HDFS Config) {/datadrv1/hadoop/hdfs/data,/data1/hadoop/hdfs/data}

But if it does not get the path,

suppose /datadrv1 is not there on 52, So it is creating that directory /datadrv1 on root and putting data there. So thats why root space is getting full. Because data should be going to mentioned directories as it supposed to be. But it is not going there.

And same with other servers too.

Did you get my error now?