Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HDFS- Non DFS space allocation/capacity

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HDFS- Non DFS space allocation/capacity

- Labels:

-

Apache Hadoop

Created on 04-14-2016 03:32 PM - edited 08-18-2019 03:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

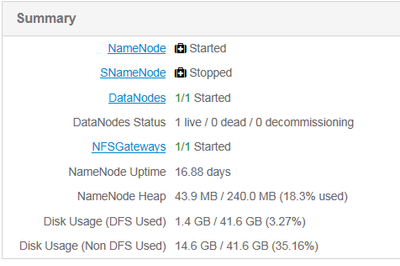

I have a query in relation to Space Allocation within HDFS. I am currently trying to run a large query in Hive (a wordsplit on a large file). However, I am unable to complete this due to running out of disk space.

I have deleted any unnessary files from HDFS and have reduced my starting Disk Usage to 38%.

However, I am wondering what non DFS is as this appears to be taking up the majority of my disk space.

How can I go about reducing the disk space that Non DFS takes up?

Any help is greatly appreciated.

Thanks in advance.

Created 04-14-2016 06:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One thing to keep in mind is that your queries will fail if too many tasks fail. This can happen if one or some of your local dirs is on a small partition as well. Not sure about your cluster setup but sometimes ambari configures the local dirs simply by taking all available non root partitions and this can lead to these problems. I think its hard to believe that your query fails because yarn runs out of disc space given the small amount of data you have in the system. I think it is likelier that one of the local dirs has been set in a small partition.

Check: yarn.nodemanager.local-dirs and see if one of the folders in there is in a small partition. You can simple change that and re run your jobs.

Created 04-14-2016 03:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can decrease space for non-HDFS use by setting dfs.datanode.du.reserved to a low value. It's set per disk volume. You can also freed it up by deleting any unwanted files from the datanode machine such as hadoop logs, any non hadoop related files (other information on the disk), etc. It cannot be done by using any hadoop commands.

Created 04-14-2016 03:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the quick response! Are these things I can do on the Hortonworks console or do I need to ssh into the instance?

I am new to Hadoop so apologies if the above question seems elementary!

Created 04-14-2016 04:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe to delete the logs and other non hdfs data you need to login into machine and execute rm command, but for setting up dfs.datanode.du.reserved property you can login to ambari and search for this property in HDFS > config section(please see attached screenshot). However I think the default value of dfs.datanode.du.reserved is sufficient in most of the cases. Regarding your job whats the data size you are try to process?

Created 04-15-2016 08:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, please let me know if you are still stuck in this issue. Thanks

Created 04-17-2016 06:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks very much for your help. This issue has been resolved.

Created 04-14-2016 06:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One thing to keep in mind is that your queries will fail if too many tasks fail. This can happen if one or some of your local dirs is on a small partition as well. Not sure about your cluster setup but sometimes ambari configures the local dirs simply by taking all available non root partitions and this can lead to these problems. I think its hard to believe that your query fails because yarn runs out of disc space given the small amount of data you have in the system. I think it is likelier that one of the local dirs has been set in a small partition.

Check: yarn.nodemanager.local-dirs and see if one of the folders in there is in a small partition. You can simple change that and re run your jobs.

Created 04-17-2016 06:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Benjamin Leonhardi - This was indeed part of the reason. Thank you very much for your help!