Support Questions

- Cloudera Community

- Support

- Support Questions

- HDFS space allocation question

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HDFS space allocation question

- Labels:

-

Apache Hadoop

Created 03-01-2016 03:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I added 200 GB to one of the data node, I could see the new space on Ambari Dashboard but HDFS size remains the same. How could that be available to HDFS ?

Created on 03-01-2016 03:46 PM - edited 08-19-2019 05:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

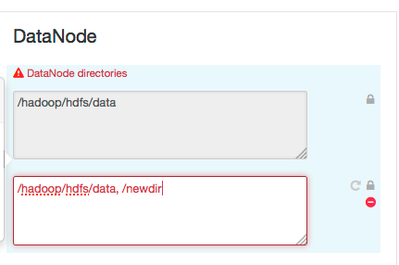

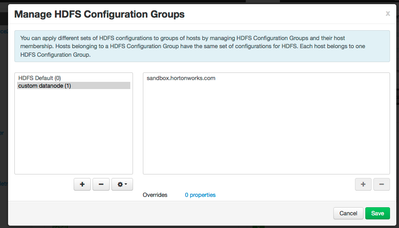

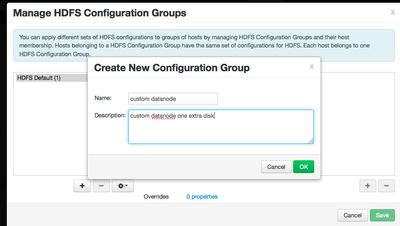

you need to add this node in config groups in Ambari, as it does not match the other nodes, after doing that in Ambari, you need to go to hdfs service and in datanode directories add a directory pointing to this new disk.

Created on 03-01-2016 03:46 PM - edited 08-19-2019 05:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you need to add this node in config groups in Ambari, as it does not match the other nodes, after doing that in Ambari, you need to go to hdfs service and in datanode directories add a directory pointing to this new disk.

Created 03-01-2016 08:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 03-02-2016 01:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Prakash Punj I have moved your fix under @Artem Ervits advice.

Created 03-01-2016 05:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like I am missing something. Tried the steps above but datanode started failing on that node. Below is what i did:

a.) Created a new configuration group with his datanode as member of that configuration group.b.) b.) HDFS--> Config --> DataNode directories and added /usr1 (new directory), e.g. /hadoop/hdfs/data,/usr1 c.) save the configuration and restarted datanode, Datanode starts to fail. I could see the new space in the disk availability. Filesystem Size Used Avail Use% Mounted on /dev/vda1 7.8G 5.7G 1.7G 77% / tmpfs 3.9G 0 3.9G 0% /dev/shm /dev/vdb 197G 8.8G 178G 5% /usr1

Created 03-01-2016 06:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Prakash Punj please post logs for datanode.

Created 03-01-2016 05:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Rebalance the HDFS and see if it helps.

Created 03-01-2016 07:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Artem Ervits @Neeraj SabharwalData Node logs are below. The new disk is mounted at /usr1

************************************************************/

2016-03-01 18:09:44,670 INFO datanode.DataNode (LogAdapter.java:info(45)) - registered UNIX signal handlers for [TERM, HUP, INT]

2016-03-01 18:09:45,580 WARN datanode.DataNode (DataNode.java:checkStorageLocations(2427)) - Invalid dfs.datanode.data.dir /usr1 :

EPERM: Operation not permitted

at org.apache.hadoop.io.nativeio.NativeIO$POSIX.chmodImpl(Native Method)

at org.apache.hadoop.io.nativeio.NativeIO$POSIX.chmod(NativeIO.java:230)

at org.apache.hadoop.fs.RawLocalFileSystem.setPermission(RawLocalFileSystem.java:727)

at org.apache.hadoop.fs.FilterFileSystem.setPermission(FilterFileSystem.java:502)

at org.apache.hadoop.util.DiskChecker.mkdirsWithExistsAndPermissionCheck(DiskChecker.java:140)

at org.apache.hadoop.util.DiskChecker.checkDir(DiskChecker.java:156)

at org.apache.hadoop.hdfs.server.datanode.DataNode$DataNodeDiskChecker.checkDir(DataNode.java:2382)

at org.apache.hadoop.hdfs.server.datanode.DataNode.checkStorageLocations(DataNode.java:2424)

at org.apache.hadoop.hdfs.server.datanode.DataNode.makeInstance(DataNode.java:2406)

at org.apache.hadoop.hdfs.server.datanode.DataNode.instantiateDataNode(DataNode.java:2298)

at org.apache.hadoop.hdfs.server.datanode.DataNode.createDataNode(DataNode.java:2345)

at org.apache.hadoop.hdfs.server.datanode.DataNode.secureMain(DataNode.java:2526)

at org.apache.hadoop.hdfs.server.datanode.DataNode.main(DataNode.java:2550)

2016-03-01 18:09:45,688 INFO impl.MetricsConfig (MetricsConfig.java:loadFirst(112)) - loaded properties from hadoop-metrics2.properties

2016-03-01 18:09:45,897 INFO timeline.HadoopTimelineMetricsSink (HadoopTimelineMetricsSink.java:init(61)) - Initializing Timeline metrics sink.

2016-03-01 18:09:45,898 INFO timeline.HadoopTimelineMetricsSink (HadoopTimelineMetricsSink.java:init(79)) - Identified hostname = hdp-hue.asotc, serviceName = datanode

2016-03-01 18:09:45,900 INFO timeline.HadoopTimelineMetricsSink (HadoopTimelineMetricsSink.java:init(91)) - Collector Uri: http://hdp-m.asotc:6188/ws/v1/timeline/metrics

2016-03-01 18:09:45,910 INFO impl.MetricsSinkAdapter (MetricsSinkAdapter.java:start(206)) - Sink timeline started

2016-03-01 18:09:45,998 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:startTimer(377)) - Scheduled snapshot period at 60 second(s).

2016-03-01 18:09:45,999 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:start(192)) - DataNode metrics system started

2016-03-01 18:09:46,006 INFO datanode.BlockScanner (BlockScanner.java:<init>(172)) - Initialized block scanner with targetBytesPerSec 1048576

2016-03-01 18:09:46,008 INFO datanode.DataNode (DataNode.java:<init>(418)) - File descriptor passing is enabled.

2016-03-01 18:09:46,008 INFO datanode.DataNode (DataNode.java:<init>(429)) - Configured hostname is hdp-hue.asotc

2016-03-01 18:09:46,018 INFO datanode.DataNode (DataNode.java:startDataNode(1127)) - Starting DataNode with maxLockedMemory = 0

2016-03-01 18:09:46,049 INFO datanode.DataNode (DataNode.java:initDataXceiver(921)) - Opened streaming server at /0.0.0.0:50010

2016-03-01 18:09:46,052 INFO datanode.DataNode (DataXceiverServer.java:<init>(76)) - Balancing bandwith is 6250000 bytes/s

2016-03-01 18:09:46,054 INFO datanode.DataNode (DataXceiverServer.java:<init>(77)) - Number threads for balancing is 5

2016-03-01 18:09:46,058 INFO datanode.DataNode (DataXceiverServer.java:<init>(76)) - Balancing bandwith is 6250000 bytes/s

2016-03-01 18:09:46,058 INFO datanode.DataNode (DataXceiverServer.java:<init>(77)) - Number threads for balancing is 5

2016-03-01 18:09:46,058 INFO datanode.DataNode (DataNode.java:initDataXceiver(936)) - Listening on UNIX domain socket: /var/lib/hadoop-hdfs/dn_socket

2016-03-01 18:09:46,170 INFO mortbay.log (Slf4jLog.java:info(67)) - Logging to org.slf4j.impl.Log4jLoggerAdapter(org.mortbay.log) via org.mortbay.log.Slf4jLog

2016-03-01 18:09:46,184 INFO server.AuthenticationFilter (AuthenticationFilter.java:constructSecretProvider(294)) - Unable to initialize FileSignerSecretProvider, falling back to use random secrets.

2016-03-01 18:09:46,193 INFO http.HttpRequestLog (HttpRequestLog.java:getRequestLog(80)) - Http request log for http.requests.datanode is not defined

2016-03-01 18:09:46,203 INFO http.HttpServer2 (HttpServer2.java:addGlobalFilter(710)) - Added global filter 'safety' (class=org.apache.hadoop.http.HttpServer2$QuotingInputFilter)

2016-03-01 18:09:46,207 INFO http.HttpServer2 (HttpServer2.java:addFilter(685)) - Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context datanode

2016-03-01 18:09:46,208 INFO http.HttpServer2 (HttpServer2.java:addFilter(693)) - Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context static

2016-03-01 18:09:46,208 INFO http.HttpServer2 (HttpServer2.java:addFilter(693)) - Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context logs

2016-03-01 18:09:46,228 INFO http.HttpServer2 (HttpServer2.java:openListeners(915)) - Jetty bound to port 48603

2016-03-01 18:09:46,229 INFO mortbay.log (Slf4jLog.java:info(67)) - jetty-6.1.26.hwx

2016-03-01 18:09:46,545 INFO mortbay.log (Slf4jLog.java:info(67)) - Started HttpServer2$SelectChannelConnectorWithSafeStartup@localhost:48603

2016-03-01 18:09:46,794 INFO web.DatanodeHttpServer (DatanodeHttpServer.java:start(201)) - Listening HTTP traffic on /0.0.0.0:50075

2016-03-01 18:09:46,984 INFO datanode.DataNode (DataNode.java:startDataNode(1144)) - dnUserName = hdfs

2016-03-01 18:09:46,984 INFO datanode.DataNode (DataNode.java:startDataNode(1145)) - supergroup = hdfs

2016-03-01 18:09:47,088 INFO ipc.CallQueueManager (CallQueueManager.java:<init>(56)) - Using callQueue class java.util.concurrent.LinkedBlockingQueue

2016-03-01 18:09:47,117 INFO ipc.Server (Server.java:run(676)) - Starting Socket Reader #1 for port 8010

2016-03-01 18:09:47,157 INFO datanode.DataNode (DataNode.java:initIpcServer(837)) - Opened IPC server at /0.0.0.0:8010

2016-03-01 18:09:47,179 INFO datanode.DataNode (BlockPoolManager.java:refreshNamenodes(152)) - Refresh request received for nameservices: null

2016-03-01 18:09:47,218 INFO datanode.DataNode (BlockPoolManager.java:doRefreshNamenodes(197)) - Starting BPOfferServices for nameservices: <default>

2016-03-01 18:09:47,234 INFO datanode.DataNode (BPServiceActor.java:run(814)) - Block pool <registering> (Datanode Uuid unassigned) service to hdp-m.asotc/10.0.2.23:8020 starting to offer service

2016-03-01 18:09:47,252 INFO ipc.Server (Server.java:run(906)) - IPC Server Responder: starting

2016-03-01 18:09:47,257 INFO ipc.Server (Server.java:run(746)) - IPC Server listener on 8010: starting

2016-03-01 18:09:47,555 INFO common.Storage (Storage.java:tryLock(715)) - Lock on /hadoop/hdfs/data/in_use.lock acquired by nodename 11122@hdp-hue.asotc

2016-03-01 18:09:47,627 INFO common.Storage (BlockPoolSliceStorage.java:recoverTransitionRead(241)) - Analyzing storage directories for bpid BP-472523401-10.0.2.23-1455577364586

2016-03-01 18:09:47,628 INFO common.Storage (Storage.java:lock(675)) - Locking is disabled for /hadoop/hdfs/data/current/BP-472523401-10.0.2.23-1455577364586

2016-03-01 18:09:47,632 INFO datanode.DataNode (DataNode.java:initStorage(1402)) - Setting up storage: nsid=1566085604;bpid=BP-472523401-10.0.2.23-1455577364586;lv=-56;nsInfo=lv=-63;cid=CID-d723cf5b-ba4a-43d3-afe1-781149930f3e;nsid=1566085604;c=0;bpid=BP-472523401-10.0.2.23-1455577364586;dnuuid=6fcfc3f7-8ddb-403a-83ba-4df61c0704fa

2016-03-01 18:09:47,656 FATAL datanode.DataNode (BPServiceActor.java:run(833)) - Initialization failed for Block pool <registering> (Datanode Uuid 6fcfc3f7-8ddb-403a-83ba-4df61c0704fa) service to hdp-m.asotc/10.0.2.23:8020. Exiting.

org.apache.hadoop.util.DiskChecker$DiskErrorException: Too many failed volumes - current valid volumes: 1, volumes configured: 2, volumes failed: 1, volume failures tolerated: 0

at org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl.<init>(FsDatasetImpl.java:289)

at org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetFactory.newInstance(FsDatasetFactory.java:34)

at org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetFactory.newInstance(FsDatasetFactory.java:30)

Created 03-01-2016 07:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Neeraj Sabharwal @Artem Ervits

Below are the logs:

************************************************************/

2016-03-01 18:09:44,634 INFO datanode.DataNode (LogAdapter.java:info(45)) - STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting DataNode

STARTUP_MSG: host = hdp-hue.asotc/10.0.2.28

STARTUP_MSG: args = []

STARTUP_MSG: version = 2.7.1.2.3.4.0-3485

STARTUP_MSG: classpath = /usr/hdp/current/hadoop-client/conf:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-math3-3.1.1.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/curator-framework-2.7.1.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/htrace-core-3.1.0-incubating.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/asm-3.2.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/xmlenc-0.52.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/api-asn1-api-1.0.0-M20.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/httpclient-4.2.5.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jetty-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/mockito-all-1.8.5.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-cli-1.2.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/activation-1.1.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/netty-3.6.2.Final.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/java-xmlbuilder-0.4.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jackson-annotations-2.2.3.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jaxb-api-2.2.2.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/azure-storage-2.2.0.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/microsoft-windowsazure-storage-sdk-0.6.0.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jsch-0.1.42.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/slf4j-api-1.7.10.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-codec-1.4.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jets3t-0.9.0.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-compress-1.4.1.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/curator-recipes-2.7.1.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/ranger-hdfs-plugin-shim-0.5.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/avro-1.7.4.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/hamcrest-core-1.3.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jackson-core-asl-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/apacheds-i18n-2.0.0-M15.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/aws-java-sdk-1.7.4.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/guava-11.0.2.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jettison-1.1.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jersey-core-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-beanutils-core-1.8.0.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/log4j-1.2.17.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/slf4j-log4j12-1.7.10.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/stax-api-1.0-2.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/ojdbc6.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jackson-databind-2.2.3.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jsp-api-2.1.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/protobuf-java-2.5.0.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/ranger-yarn-plugin-shim-0.5.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/curator-client-2.7.1.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/ranger-plugin-classloader-0.5.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jackson-xc-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/snappy-java-1.0.4.1.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/servlet-api-2.5.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-collections-3.2.2.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jackson-jaxrs-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/api-util-1.0.0-M20.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jackson-mapper-asl-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jackson-core-2.2.3.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jaxb-impl-2.2.3-1.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/paranamer-2.3.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/xz-1.0.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jersey-json-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/httpcore-4.2.5.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-lang-2.6.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-httpclient-3.1.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/zookeeper-3.4.6.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-net-3.1.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-configuration-1.6.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-io-2.4.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jersey-server-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-digester-1.8.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/junit-4.11.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jetty-util-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/gson-2.2.4.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/jsr305-3.0.0.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-logging-1.1.3.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-beanutils-1.7.0.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-aws.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-common-2.7.1.2.3.4.0-3485-tests.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-common-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-common-tests.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-nfs.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-common.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-annotations.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-azure-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-nfs-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-aws-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-annotations-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-auth.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-auth-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop/.//hadoop-azure.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/./:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/okio-1.4.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/htrace-core-3.1.0-incubating.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/asm-3.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/xmlenc-0.52.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/jetty-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/netty-all-4.0.23.Final.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/xercesImpl-2.9.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/commons-cli-1.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/netty-3.6.2.Final.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/commons-codec-1.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/commons-daemon-1.0.13.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/xml-apis-1.3.04.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/guava-11.0.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/jersey-core-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/log4j-1.2.17.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/okhttp-2.4.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/protobuf-java-2.5.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/leveldbjni-all-1.8.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/servlet-api-2.5.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/commons-lang-2.6.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/commons-io-2.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/jersey-server-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/jetty-util-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/jsr305-3.0.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/lib/commons-logging-1.1.3.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/.//hadoop-hdfs-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/.//hadoop-hdfs-nfs.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/.//hadoop-hdfs-tests.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/.//hadoop-hdfs-nfs-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/.//hadoop-hdfs-2.7.1.2.3.4.0-3485-tests.jar:/usr/hdp/2.3.4.0-3485/hadoop-hdfs/.//hadoop-hdfs.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-math3-3.1.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/curator-framework-2.7.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/htrace-core-3.1.0-incubating.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/asm-3.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/guice-servlet-3.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/fst-2.24.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/xmlenc-0.52.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/api-asn1-api-1.0.0-M20.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/httpclient-4.2.5.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jetty-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/zookeeper-3.4.6.2.3.4.0-3485-tests.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-cli-1.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/activation-1.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/netty-3.6.2.Final.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/java-xmlbuilder-0.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jackson-annotations-2.2.3.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jaxb-api-2.2.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/microsoft-windowsazure-storage-sdk-0.6.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jsch-0.1.42.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-codec-1.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jets3t-0.9.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-compress-1.4.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/curator-recipes-2.7.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/javax.inject-1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/avro-1.7.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jackson-core-asl-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/apacheds-i18n-2.0.0-M15.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/guava-11.0.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jettison-1.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jersey-core-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-beanutils-core-1.8.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/log4j-1.2.17.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/stax-api-1.0-2.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/objenesis-2.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jersey-guice-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jackson-databind-2.2.3.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jsp-api-2.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/protobuf-java-2.5.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/aopalliance-1.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/leveldbjni-all-1.8.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/javassist-3.18.1-GA.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/curator-client-2.7.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jackson-xc-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/snappy-java-1.0.4.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/servlet-api-2.5.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-collections-3.2.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/api-util-1.0.0-M20.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jackson-core-2.2.3.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/guice-3.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/paranamer-2.3.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/xz-1.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jersey-json-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/httpcore-4.2.5.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-lang-2.6.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-httpclient-3.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/zookeeper-3.4.6.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-net-3.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-configuration-1.6.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-io-2.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jersey-server-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-digester-1.8.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jetty-util-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/gson-2.2.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jsr305-3.0.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-logging-1.1.3.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/commons-beanutils-1.7.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/lib/jersey-client-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-client-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-sharedcachemanager-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-registry.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-tests.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-client.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-nodemanager.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-common-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-common.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-applications-distributedshell.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-sharedcachemanager.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-tests-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-timeline-plugins-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-registry-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-api.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-nodemanager-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-applications-distributedshell-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-api-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-applicationhistoryservice.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-common.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-web-proxy.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-timeline-plugins.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-applicationhistoryservice-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-applications-unmanaged-am-launcher.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-resourcemanager-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-applications-unmanaged-am-launcher-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-common-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-resourcemanager.jar:/usr/hdp/2.3.4.0-3485/hadoop-yarn/.//hadoop-yarn-server-web-proxy-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/asm-3.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/guice-servlet-3.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/netty-3.6.2.Final.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/commons-compress-1.4.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/javax.inject-1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/avro-1.7.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/hamcrest-core-1.3.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/jersey-core-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/log4j-1.2.17.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/jersey-guice-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/protobuf-java-2.5.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/aopalliance-1.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/leveldbjni-all-1.8.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/guice-3.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/paranamer-2.3.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/xz-1.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/commons-io-2.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/jersey-server-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/lib/junit-4.11.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-math3-3.1.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//curator-framework-2.7.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-lang3-3.3.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//htrace-core-3.1.0-incubating.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//joda-time-2.9.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//asm-3.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//xmlenc-0.52.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//api-asn1-api-1.0.0-M20.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//httpclient-4.2.5.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jetty-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//metrics-core-3.0.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//mockito-all-1.8.5.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-shuffle.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-sls.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-sls-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-openstack-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-cli-1.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//activation-1.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//netty-3.6.2.Final.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-hs-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-examples-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//java-xmlbuilder-0.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-ant.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jaxb-api-2.2.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-extras-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-openstack.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-common.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//microsoft-windowsazure-storage-sdk-0.6.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-jobclient-tests.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jsch-0.1.42.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-codec-1.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jets3t-0.9.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-app.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-compress-1.4.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-ant-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//curator-recipes-2.7.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//avro-1.7.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hamcrest-core-1.3.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-distcp.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jackson-core-asl-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//apacheds-i18n-2.0.0-M15.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-shuffle-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-rumen.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-archives-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//guava-11.0.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jettison-1.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-common-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jersey-core-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-archives.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-streaming-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-beanutils-core-1.8.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//log4j-1.2.17.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-jobclient-2.7.1.2.3.4.0-3485-tests.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-streaming.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//stax-api-1.0-2.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-gridmix-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jsp-api-2.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//protobuf-java-2.5.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-hs.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-core.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-hs-plugins-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//curator-client-2.7.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jackson-xc-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-extras.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//snappy-java-1.0.4.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//servlet-api-2.5.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-collections-3.2.2.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jackson-jaxrs-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-rumen-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-examples.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-core-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//api-util-1.0.0-M20.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jackson-mapper-asl-1.9.13.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-jobclient-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jackson-core-2.2.3.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-auth.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jaxb-impl-2.2.3-1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-app-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-hs-plugins.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//paranamer-2.3.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//xz-1.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jersey-json-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-auth-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//httpcore-4.2.5.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-datajoin.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-lang-2.6.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-httpclient-3.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//zookeeper-3.4.6.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-net-3.1.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-datajoin-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-mapreduce-client-jobclient.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-configuration-1.6.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-distcp-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-io-2.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jersey-server-1.9.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-digester-1.8.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//junit-4.11.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jetty-util-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//hadoop-gridmix.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//gson-2.2.4.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//jsr305-3.0.0.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-logging-1.1.3.jar:/usr/hdp/2.3.4.0-3485/hadoop-mapreduce/.//commons-beanutils-1.7.0.jar::mysql-connector-java-5.1.17.jar:mysql-connector-java.jar:/usr/hdp/2.3.4.0-3485/tez/tez-yarn-timeline-history-with-acls-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-yarn-timeline-cache-plugin-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-history-parser-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-yarn-timeline-history-with-fs-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-api-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-yarn-timeline-history-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-common-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-examples-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-runtime-library-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-tests-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-runtime-internals-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-mapreduce-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-dag-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-math3-3.1.1.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jsr305-2.0.3.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jetty-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-cli-1.2.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-codec-1.4.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-yarn-server-timeline-plugins-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/guava-11.0.2.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-mapreduce-client-common-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-azure-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/protobuf-java-2.5.0.jar:/usr/hdp/2.3.4.0-3485/tez/lib/slf4j-api-1.7.5.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-aws-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/servlet-api-2.5.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-collections-3.2.2.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-annotations-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-mapreduce-client-core-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-collections4-4.1.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jersey-json-1.9.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-lang-2.6.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jettison-1.3.4.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-io-2.4.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jetty-util-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-yarn-server-web-proxy-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jersey-client-1.9.jar:/usr/hdp/2.3.4.0-3485/tez/conf:mysql-connector-java-5.1.17.jar:mysql-connector-java.jar:mysql-connector-java-5.1.17.jar:mysql-connector-java.jar:/usr/hdp/2.3.4.0-3485/tez/tez-yarn-timeline-history-with-acls-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-yarn-timeline-cache-plugin-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-history-parser-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-yarn-timeline-history-with-fs-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-api-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-yarn-timeline-history-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-common-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-examples-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-runtime-library-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-tests-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-runtime-internals-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-mapreduce-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/tez-dag-0.7.0.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-math3-3.1.1.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jsr305-2.0.3.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jetty-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-cli-1.2.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-codec-1.4.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-yarn-server-timeline-plugins-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/guava-11.0.2.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-mapreduce-client-common-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-azure-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/protobuf-java-2.5.0.jar:/usr/hdp/2.3.4.0-3485/tez/lib/slf4j-api-1.7.5.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-aws-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/servlet-api-2.5.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-collections-3.2.2.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-annotations-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-mapreduce-client-core-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-collections4-4.1.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jersey-json-1.9.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-lang-2.6.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jettison-1.3.4.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-io-2.4.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jetty-util-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-yarn-server-web-proxy-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jersey-client-1.9.jar:/usr/hdp/2.3.4.0-3485/tez/conf

STARTUP_MSG: build = git@github.com:hortonworks/hadoop.git -r ef0582ca14b8177a3cbb6376807545272677d730; compiled by 'jenkins' on 2015-12-16T03:01Z

STARTUP_MSG: java = 1.8.0_60

************************************************************/

2016-03-01 18:09:44,670 INFO datanode.DataNode (LogAdapter.java:info(45)) - registered UNIX signal handlers for [TERM, HUP, INT]

2016-03-01 18:09:45,580 WARN datanode.DataNode (DataNode.java:checkStorageLocations(2427)) - Invalid dfs.datanode.data.dir /usr1 :

EPERM: Operation not permitted

at org.apache.hadoop.io.nativeio.NativeIO$POSIX.chmodImpl(Native Method)

at org.apache.hadoop.io.nativeio.NativeIO$POSIX.chmod(NativeIO.java:230)

at org.apache.hadoop.fs.RawLocalFileSystem.setPermission(RawLocalFileSystem.java:727)

at org.apache.hadoop.fs.FilterFileSystem.setPermission(FilterFileSystem.java:502)

at org.apache.hadoop.util.DiskChecker.mkdirsWithExistsAndPermissionCheck(DiskChecker.java:140)

at org.apache.hadoop.util.DiskChecker.checkDir(DiskChecker.java:156)

at org.apache.hadoop.hdfs.server.datanode.DataNode$DataNodeDiskChecker.checkDir(DataNode.java:2382)

at org.apache.hadoop.hdfs.server.datanode.DataNode.checkStorageLocations(DataNode.java:2424)

at org.apache.hadoop.hdfs.server.datanode.DataNode.makeInstance(DataNode.java:2406)

at org.apache.hadoop.hdfs.server.datanode.DataNode.instantiateDataNode(DataNode.java:2298)

at org.apache.hadoop.hdfs.server.datanode.DataNode.createDataNode(DataNode.java:2345)

at org.apache.hadoop.hdfs.server.datanode.DataNode.secureMain(DataNode.java:2526)

at org.apache.hadoop.hdfs.server.datanode.DataNode.main(DataNode.java:2550)

2016-03-01 18:09:45,688 INFO impl.MetricsConfig (MetricsConfig.java:loadFirst(112)) - loaded properties from hadoop-metrics2.properties

2016-03-01 18:09:45,897 INFO timeline.HadoopTimelineMetricsSink (HadoopTimelineMetricsSink.java:init(61)) - Initializing Timeline metrics sink.

2016-03-01 18:09:45,898 INFO timeline.HadoopTimelineMetricsSink (HadoopTimelineMetricsSink.java:init(79)) - Identified hostname = hdp-hue.asotc, serviceName = datanode

2016-03-01 18:09:45,900 INFO timeline.HadoopTimelineMetricsSink (HadoopTimelineMetricsSink.java:init(91)) - Collector Uri: http://hdp-m.asotc:6188/ws/v1/timeline/metrics

2016-03-01 18:09:45,910 INFO impl.MetricsSinkAdapter (MetricsSinkAdapter.java:start(206)) - Sink timeline started

2016-03-01 18:09:45,998 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:startTimer(377)) - Scheduled snapshot period at 60 second(s).

2016-03-01 18:09:45,999 INFO impl.MetricsSystemImpl (MetricsSystemImpl.java:start(192)) - DataNode metrics system started

2016-03-01 18:09:46,006 INFO datanode.BlockScanner (BlockScanner.java:<init>(172)) - Initialized block scanner with targetBytesPerSec 1048576

2016-03-01 18:09:46,008 INFO datanode.DataNode (DataNode.java:<init>(418)) - File descriptor passing is enabled.

2016-03-01 18:09:46,008 INFO datanode.DataNode (DataNode.java:<init>(429)) - Configured hostname is hdp-hue.asotc

2016-03-01 18:09:46,018 INFO datanode.DataNode (DataNode.java:startDataNode(1127)) - Starting DataNode with maxLockedMemory = 0

2016-03-01 18:09:46,049 INFO datanode.DataNode (DataNode.java:initDataXceiver(921)) - Opened streaming server at /0.0.0.0:50010

2016-03-01 18:09:46,052 INFO datanode.DataNode (DataXceiverServer.java:<init>(76)) - Balancing bandwith is 6250000 bytes/s

2016-03-01 18:09:46,054 INFO datanode.DataNode (DataXceiverServer.java:<init>(77)) - Number threads for balancing is 5

2016-03-01 18:09:46,058 INFO datanode.DataNode (DataXceiverServer.java:<init>(76)) - Balancing bandwith is 6250000 bytes/s

2016-03-01 18:09:46,058 INFO datanode.DataNode (DataXceiverServer.java:<init>(77)) - Number threads for balancing is 5

2016-03-01 18:09:46,058 INFO datanode.DataNode (DataNode.java:initDataXceiver(936)) - Listening on UNIX domain socket: /var/lib/hadoop-hdfs/dn_socket

2016-03-01 18:09:46,170 INFO mortbay.log (Slf4jLog.java:info(67)) - Logging to org.slf4j.impl.Log4jLoggerAdapter(org.mortbay.log) via org.mortbay.log.Slf4jLog

2016-03-01 18:09:46,184 INFO server.AuthenticationFilter (AuthenticationFilter.java:constructSecretProvider(294)) - Unable to initialize FileSignerSecretProvider, falling back to use random secrets.

2016-03-01 18:09:46,193 INFO http.HttpRequestLog (HttpRequestLog.java:getRequestLog(80)) - Http request log for http.requests.datanode is not defined

2016-03-01 18:09:46,203 INFO http.HttpServer2 (HttpServer2.java:addGlobalFilter(710)) - Added global filter 'safety' (class=org.apache.hadoop.http.HttpServer2$QuotingInputFilter)

2016-03-01 18:09:46,207 INFO http.HttpServer2 (HttpServer2.java:addFilter(685)) - Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context datanode

2016-03-01 18:09:46,208 INFO http.HttpServer2 (HttpServer2.java:addFilter(693)) - Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context static

2016-03-01 18:09:46,208 INFO http.HttpServer2 (HttpServer2.java:addFilter(693)) - Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context logs

2016-03-01 18:09:46,228 INFO http.HttpServer2 (HttpServer2.java:openListeners(915)) - Jetty bound to port 48603

2016-03-01 18:09:46,229 INFO mortbay.log (Slf4jLog.java:info(67)) - jetty-6.1.26.hwx

2016-03-01 18:09:46,545 INFO mortbay.log (Slf4jLog.java:info(67)) - Started HttpServer2$SelectChannelConnectorWithSafeStartup@localhost:48603

2016-03-01 18:09:46,794 INFO web.DatanodeHttpServer (DatanodeHttpServer.java:start(201)) - Listening HTTP traffic on /0.0.0.0:50075

2016-03-01 18:09:46,984 INFO datanode.DataNode (DataNode.java:startDataNode(1144)) - dnUserName = hdfs

2016-03-01 18:09:46,984 INFO datanode.DataNode (DataNode.java:startDataNode(1145)) - supergroup = hdfs

2016-03-01 18:09:47,088 INFO ipc.CallQueueManager (CallQueueManager.java:<init>(56)) - Using callQueue class java.util.concurrent.LinkedBlockingQueue

2016-03-01 18:09:47,117 INFO ipc.Server (Server.java:run(676)) - Starting Socket Reader #1 for port 8010

2016-03-01 18:09:47,157 INFO datanode.DataNode (DataNode.java:initIpcServer(837)) - Opened IPC server at /0.0.0.0:8010

2016-03-01 18:09:47,179 INFO datanode.DataNode (BlockPoolManager.java:refreshNamenodes(152)) - Refresh request received for nameservices: null

2016-03-01 18:09:47,218 INFO datanode.DataNode (BlockPoolManager.java:doRefreshNamenodes(197)) - Starting BPOfferServices for nameservices: <default>

2016-03-01 18:09:47,234 INFO datanode.DataNode (BPServiceActor.java:run(814)) - Block pool <registering> (Datanode Uuid unassigned) service to hdp-m.asotc/10.0.2.23:8020 starting to offer service

2016-03-01 18:09:47,252 INFO ipc.Server (Server.java:run(906)) - IPC Server Responder: starting

2016-03-01 18:09:47,257 INFO ipc.Server (Server.java:run(746)) - IPC Server listener on 8010: starting

2016-03-01 18:09:47,555 INFO common.Storage (Storage.java:tryLock(715)) - Lock on /hadoop/hdfs/data/in_use.lock acquired by nodename 11122@hdp-hue.asotc

2016-03-01 18:09:47,627 INFO common.Storage (BlockPoolSliceStorage.java:recoverTransitionRead(241)) - Analyzing storage directories for bpid BP-472523401-10.0.2.23-1455577364586

2016-03-01 18:09:47,628 INFO common.Storage (Storage.java:lock(675)) - Locking is disabled for /hadoop/hdfs/data/current/BP-472523401-10.0.2.23-1455577364586

2016-03-01 18:09:47,632 INFO datanode.DataNode (DataNode.java:initStorage(1402)) - Setting up storage: nsid=1566085604;bpid=BP-472523401-10.0.2.23-1455577364586;lv=-56;nsInfo=lv=-63;cid=CID-d723cf5b-ba4a-43d3-afe1-781149930f3e;nsid=1566085604;c=0;bpid=BP-472523401-10.0.2.23-1455577364586;dnuuid=6fcfc3f7-8ddb-403a-83ba-4df61c0704fa

2016-03-01 18:09:47,656 FATAL datanode.DataNode (BPServiceActor.java:run(833)) - Initialization failed for Block pool <registering> (Datanode Uuid 6fcfc3f7-8ddb-403a-83ba-4df61c0704fa) service to hdp-m.asotc/10.0.2.23:8020. Exiting.

org.apache.hadoop.util.DiskChecker$DiskErrorException: Too many failed volumes - current valid volumes: 1, volumes configured: 2, volumes failed: 1, volume failures tolerated: 0

at org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl.<init>(FsDatasetImpl.java:289)

at org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetFactory.newInstance(FsDatasetFactory.java:34)

at org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetFactory.newInstance(FsDatasetFactory.java:30)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initStorage(DataNode.java:1412)

at org.apache.hadoop.hdfs.server.datanode.DataNode.initBlockPool(DataNode.java:1364)

at org.apache.hadoop.hdfs.server.datanode.BPOfferService.verifyAndSetNamespaceInfo(BPOfferService.java:317)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.connectToNNAndHandshake(BPServiceActor.java:224)

at org.apache.hadoop.hdfs.server.datanode.BPServiceActor.run(BPServiceActor.java:821)

at java.lang.Thread.run(Thread.java:745)

2016-03-01 18:09:47,657 WARN datanode.DataNode (BPServiceActor.java:run(854)) - Ending block pool service for: Block pool <registering> (Datanode Uuid 6fcfc3f7-8ddb-403a-83ba-4df61c0704fa) service to hdp-m.asotc/10.0.2.23:8020

2016-03-01 18:09:47,663 INFO datanode.DataNode (BlockPoolManager.java:remove(103)) - Removed Block pool <registering> (Datanode Uuid 6fcfc3f7-8ddb-403a-83ba-4df61c0704fa)

2016-03-01 18:09:49,664 WARN datanode.DataNode (DataNode.java:secureMain(2540)) - Exiting Datanode

2016-03-01 18:09:49,666 INFO util.ExitUtil (ExitUtil.java:terminate(124)) - Exiting with status 0

2016-03-01 18:09:49,671 INFO datanode.DataNode (LogAdapter.java:info(45)) - SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down DataNode at hdp-hue.asotc/10.0.2.28

Created 03-02-2016 01:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Neeraj Sabharwal @Artem Ervits

Thank you so much guys..