Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Hadoop in real life and Practical use and Proc...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hadoop in real life and Practical use and Processing different types of Data

- Labels:

-

Apache Hadoop

-

Apache Pig

Created 05-22-2016 04:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am new to Hadoop and Big Data concept. I understand the basics of this technology and idea behind this. I was trying to understand it better using PIG tool and looking for some practical real life examples as to understand that where we can use it? How can we use it with different file types like .csv, doc, audio, video files(stream data) and relational database? All I can find on web is the examples to process .csv files which don't give any idea of practical use of hadoop and how to process unstructured data?

If anybody can explain in better way or can point to some good resources?

Created 05-22-2016 05:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hadoop is distributed filesystem and distributed compute, so you can store and process any kind of data. I know that a lot of examples point of csv and DB imports since they are the most common use cases.

I will give a list of ways of how the data that you listed can be used and processed in hadoop. You can see some blogs and public repos for examples.

1. csv Like you said you will see a lot of examples including in our sandbox tutorials.

2. doc You can put raw 'doc' documents into hdfs and use tika or tesseract to do OCR from these documents.

3. audio and video. You can put raw data again in hdfs. Processing depends on what you want to do with this data. You can extract metadata out of this data using yarn.

4. relational DB. You can take a look at sqoop examples on how you can ingest relations DB into HDFS and use hive/hcatalog to access this data.

Created on 05-22-2016 05:50 PM - edited 08-18-2019 04:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

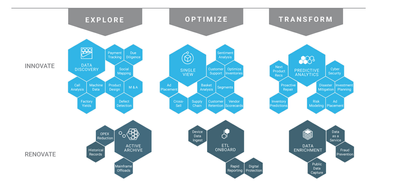

You are looking for this http://hortonworks.com/solutions/

You will use Big Data tool sets to innovate and renovate.

Innovation is "make changes in something established"

Renovation is "process of improving an outdated structure."

Created 05-22-2016 05:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hadoop is distributed filesystem and distributed compute, so you can store and process any kind of data. I know that a lot of examples point of csv and DB imports since they are the most common use cases.

I will give a list of ways of how the data that you listed can be used and processed in hadoop. You can see some blogs and public repos for examples.

1. csv Like you said you will see a lot of examples including in our sandbox tutorials.

2. doc You can put raw 'doc' documents into hdfs and use tika or tesseract to do OCR from these documents.

3. audio and video. You can put raw data again in hdfs. Processing depends on what you want to do with this data. You can extract metadata out of this data using yarn.

4. relational DB. You can take a look at sqoop examples on how you can ingest relations DB into HDFS and use hive/hcatalog to access this data.