Support Questions

- Cloudera Community

- Support

- Support Questions

- Hadoop security Failed

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hadoop security Failed

- Labels:

-

Apache Hadoop

Created 12-29-2016 01:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

i'm using HDP 2.5. i'm trying to secure hadoop and hbase using kerberos and ssl. I have follow this hortonworks doc and ssl i have follow this below links

https://community.hortonworks.com/articles/52875/enable-https-for-hdfs.html

https://community.hortonworks.com/articles/52876/enable-https-for-yarn-and-mapreduce2.html

I can able to browse https://localhost:8443. When I restart hdfs service in ambari, datanode successfully started. But namenode can't able to start. It generated this log; namenode-log1.txt. The Webhdfs filebrowser also can't able to view files on FileView Tab. The webhdfs says error "0.0.0.0:50470 ssl handshake failed"

Created 12-29-2016 05:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like the Namenode is still in the same mode.The o/p for the command return "1"

/usr/hdp/current/hadoop-hdfs-namenode/bin/hdfs dfsadmin -fs hdfs://sandbox.hortonworks.com:8020 -safemode get | grep 'Safe mode is OFF'

Please check the namenode logs to see whats the issue could be . You can also open the namenode UI and check the status of the namenode as below

hdfs://sandbox.hortonworks.com:8020:50070/dfshealth.html#tab-overview

If you think that the cluster looks ok, you can manually make the namenode come out of the "safe" mode as below and then try again :

/usr/hdp/current/hadoop-hdfs-namenode/bin/hdfs dfsadmin hdfs://sandbox.hortonworks.com:8020 -safemode leave

Created 12-29-2016 05:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like the Namenode is still in the same mode.The o/p for the command return "1"

/usr/hdp/current/hadoop-hdfs-namenode/bin/hdfs dfsadmin -fs hdfs://sandbox.hortonworks.com:8020 -safemode get | grep 'Safe mode is OFF'

Please check the namenode logs to see whats the issue could be . You can also open the namenode UI and check the status of the namenode as below

hdfs://sandbox.hortonworks.com:8020:50070/dfshealth.html#tab-overview

If you think that the cluster looks ok, you can manually make the namenode come out of the "safe" mode as below and then try again :

/usr/hdp/current/hadoop-hdfs-namenode/bin/hdfs dfsadmin hdfs://sandbox.hortonworks.com:8020 -safemode leave

Created on 12-30-2016 05:44 AM - edited 08-19-2019 05:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. it works. I got new log webhdfs authorization required error. please find my attachment. I have follow this below url for enable ssl to hdfs.

But i can't able browse files on ambari. pls tell me how to resolve this issue.

namenode-log2.txt webhdfs-error.txt.

Created 12-30-2016 07:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would suggest you to vet your settings / configs against whats documented here.

I have not followed the link you mentioned, but just by cursory look , it looks fine to me .

Also please do accept the answer if this has helped to resolve the issue

Created 12-30-2016 07:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the response. Already I have configured those configuration in hdfs. But still this issue can't able to resolve. while i'm trying to curl webhdfs by below command, it shows some error msg;

[root@sandbox java]#curl https://sandbox.hortonworks.com:50470/v1/webhdfs/v1/?op=LISTSTATUS"

curl: (60) Peer certificate cannot be authenticated with known CA certificates More details here: https://sandbox.hortonworks.com:50470/ca/cacert.pem curl performs SSL certificate verification by default, using a "bundle" of Certificate Authority (CA) public keys (CA certs). If the default bundle file isn't adequate, you can specify an alternate file using the --cacert option. If this HTTPS server uses a certificate signed by a CA represented in the bundle, the certificate verification probably failed due to a problem with the certificate (it might be expired, or the name might not match the domain name in the URL). If you'd like to turn off curl's verification of the certificate, use the -k (or --insecure) option.

pls tell me how to resolve this issue.

Created 01-02-2017 04:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please suggest anyone how to resolve this issue?

Created 08-16-2017 09:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

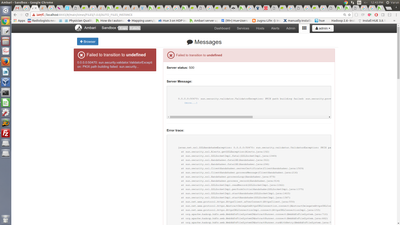

We are facing issue with Kerberos and https enabled. We followed the link provided. With HTTPS_ONLY namenode is not starting -

File "/usr/lib/python2.6/site-packages/resource_management/libraries/functions/get_user_call_output.py", line 61, in get_user_call_output

raise ExecutionFailed(err_msg, code, files_output[0], files_output[1])

resource_management.core.exceptions.ExecutionFailed: Execution of 'curl -sS -L -w '%{http_code}' -X GET --negotiate -u : -k 'https://xx.xx.xxx.xx:50470/webhdfs/v1/tmp?op=GETFILESTATUS&user.name=hdfs' 1>/tmp/tmpUvZhjh 2>/tmp/tmpF5e7yk' returned 35. curl: (35) Encountered end of file

/var/log/hadoo/hdfs/hadoop-hdfs-namenode-xxxxxxxx.log

WARN mortbay.log (Slf4jLog.java:warn(76)) - javax.net.ssl.SSLHandshakeException: no cipher suites in common.

Also, noticed that namenode not able to communicate to datanode. Ports are open.

Datanode processes -

Connection failed: [Errno 111] Connection refused to xx:1019

Please advise.

Thanks

AJ

Created 11-08-2017 09:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you ever find a solution for this? I am experiencing almost the exact set of errors as you and have yet to figure out the problem. I've gone through and recreated certs, keystore, and truststore to no avail.