All,

We have been facing an issue while we are trying to insert data from Managed to External Table S3. Each time when we have a new data in Managed Table, we need to append that new data into our external table S3. Instead of appending, it is replacing old data with newly received data (Old data are over written). I have come across similar JIRA thread and that patch is for Apache Hive (Link at the bottom). Since we are on HDP, can anyone help me out with this?

Below are the Versions:

HDP 2.5.3

Hive 1.2.1000.2.5.3.0-37

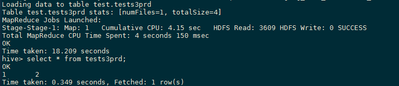

create external table tests3prd (c1 string, c2 string) location 's3a://testdata/test/tests3prd/';

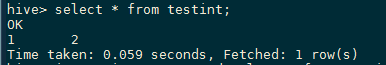

create table testint (c1 string,c2 string);

insert into testint values (1,2);

insert into tests3prd select * from testint; (2 times)

When I re-insert the same values 1,2 , it overwrites the existing row and replaces with the new record.

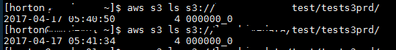

Here is the S3 external files where each time *0000_0 is overwritten instead a new copy or serial addition.

PS: Jira Thread : https://issues.apache.org/jira/browse/HIVE-15199