Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Hive MetaStore exception Exercise 1

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hive MetaStore exception Exercise 1

- Labels:

-

Apache Hive

-

Quickstart VM

Created on 03-09-2017 03:40 AM - edited 09-16-2022 04:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am using CDH 5.8 VM in Virtualbox and I am getting exception in my first excersie. It is quite frustrating as I am new to Hadoop and want to learn. Thought VM would be the safest thing to start.

VM configuration is 1CPU and 4GB RAM.

Here is the command:

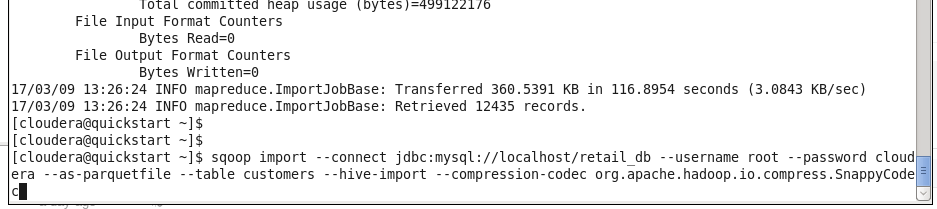

[cloudera@quickstart ~]$ sqoop import-all-tables \

> -m 1 \

> --connect jdbc:mysql://quickstart:3306/retail_db \

> --username=retail_dba \

> --password=cloudera \

> --compression-codec=snappy \

> --as-parquetfile \

> --warehouse-dir=/user/hive/warehouse \

> --hive-import

And the exception:

17/03/09 03:27:13 INFO hive.HiveManagedMetadataProvider: Creating a managed Hive table named: categories

17/03/09 03:27:13 INFO hive.metastore: Closed a connection to metastore, current connections: 0

17/03/09 03:27:13 INFO hive.metastore: Trying to connect to metastore with URI thrift://127.0.0.1:9083

17/03/09 03:27:13 INFO hive.metastore: Opened a connection to metastore, current connections: 1

17/03/09 03:27:13 INFO hive.metastore: Connected to metastore.

17/03/09 03:27:13 INFO hive.metastore: Closed a connection to metastore, current connections: 0

17/03/09 03:27:13 INFO hive.metastore: Trying to connect to metastore with URI thrift://127.0.0.1:9083

17/03/09 03:27:13 INFO hive.metastore: Opened a connection to metastore, current connections: 1

17/03/09 03:27:13 INFO hive.metastore: Connected to metastore.

17/03/09 03:27:13 ERROR sqoop.Sqoop: Got exception running Sqoop: org.kitesdk.data.DatasetOperationException: Hive MetaStore exception

org.kitesdk.data.DatasetOperationException: Hive MetaStore exception

at org.kitesdk.data.spi.hive.MetaStoreUtil.createTable(MetaStoreUtil.java:252)

at org.kitesdk.data.spi.hive.HiveManagedMetadataProvider.create(HiveManagedMetadataProvider.java:87)

at org.kitesdk.data.spi.hive.HiveManagedDatasetRepository.create(HiveManagedDatasetRepository.java:81)

at org.kitesdk.data.Datasets.create(Datasets.java:239)

at org.kitesdk.data.Datasets.create(Datasets.java:307)

at org.apache.sqoop.mapreduce.ParquetJob.createDataset(ParquetJob.java:141)

at org.apache.sqoop.mapreduce.ParquetJob.configureImportJob(ParquetJob.java:119)

at org.apache.sqoop.mapreduce.DataDrivenImportJob.configureMapper(DataDrivenImportJob.java:130)

at org.apache.sqoop.mapreduce.ImportJobBase.runImport(ImportJobBase.java:267)

at org.apache.sqoop.manager.SqlManager.importTable(SqlManager.java:692)

at org.apache.sqoop.manager.MySQLManager.importTable(MySQLManager.java:127)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:507)

at org.apache.sqoop.tool.ImportAllTablesTool.run(ImportAllTablesTool.java:111)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)

Caused by: MetaException(message:Got exception: org.apache.hadoop.ipc.RemoteException Cannot create directory /user/hive/warehouse/categories. Name node is in safe mode.

The reported blocks 908 needs additional 4 blocks to reach the threshold 0.9990 of total blocks 912.

The number of live datanodes 1 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached.

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkNameNodeSafeMode(FSNamesystem.java:1446)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirsInt(FSNamesystem.java:4318)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:4293)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:869)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.mkdirs(AuthorizationProviderProxyClientProtocol.java:323)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:608)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2086)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2082)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1693)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2080)

)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$create_table_with_environment_context_result$create_table_with_environment_context_resultStandardScheme.read(ThriftHiveMetastore.java:30072)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$create_table_with_environment_context_result$create_table_with_environment_context_resultStandardScheme.read(ThriftHiveMetastore.java:30040)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$create_table_with_environment_context_result.read(ThriftHiveMetastore.java:29966)

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:86)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_create_table_with_environment_context(ThriftHiveMetastore.java:1079)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.create_table_with_environment_context(ThriftHiveMetastore.java:1065)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.create_table_with_environment_context(HiveMetaStoreClient.java:2077)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:674)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.createTable(HiveMetaStoreClient.java:662)

at org.kitesdk.data.spi.hive.MetaStoreUtil$4.call(MetaStoreUtil.java:230)

at org.kitesdk.data.spi.hive.MetaStoreUtil$4.call(MetaStoreUtil.java:227)

at org.kitesdk.data.spi.hive.MetaStoreUtil.doWithRetry(MetaStoreUtil.java:70)

at org.kitesdk.data.spi.hive.MetaStoreUtil.createTable(MetaStoreUtil.java:242)

... 18 more

Thanks.

Created 03-09-2017 04:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

try with MySQL username root.

Created 03-09-2017 04:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It did not help. Still have same error.

Created on 03-09-2017 05:15 AM - edited 03-09-2017 05:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

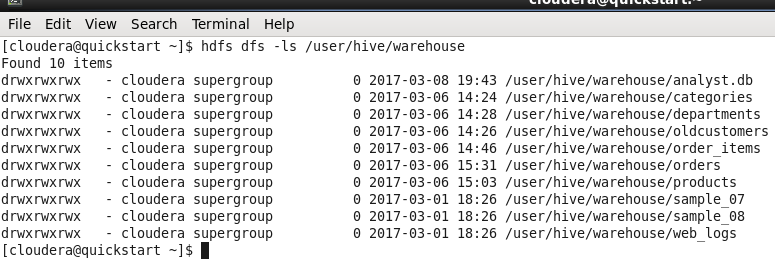

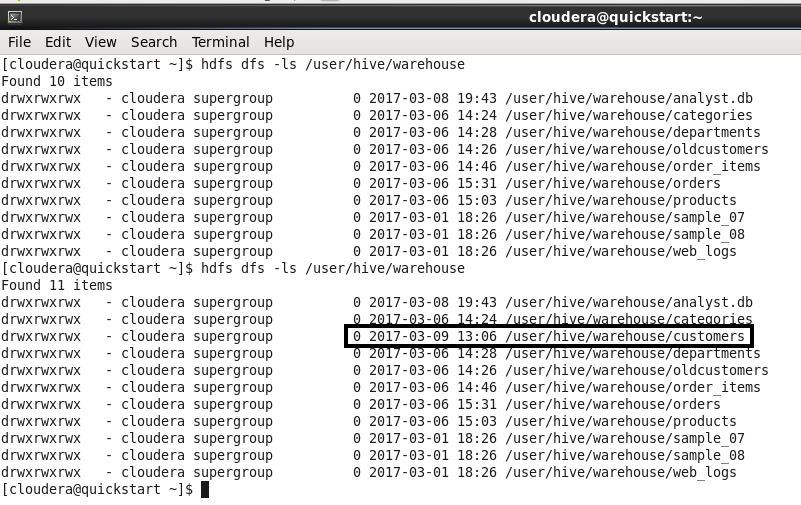

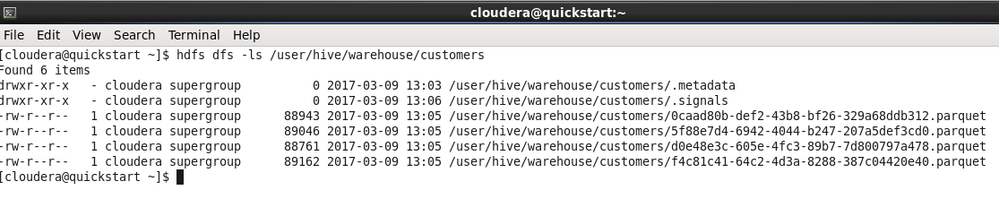

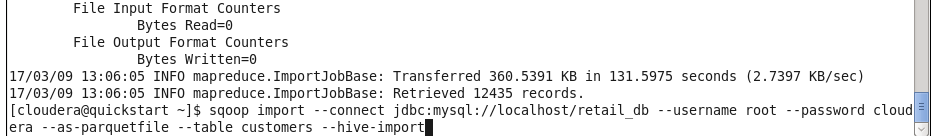

Hi, I guess it is working fine. Here is the output

To include compression (Snappy):

--compression-codec org.apache.hadoop.io.compress.SnappyCodec

Also, looking at your issue, the name node is in safe mode.

Caused by: MetaException(message:Got exception: org.apache.hadoop.ipc.

RemoteException Cannot create directory /user/hive/warehouse/categories.

Name node is in safe mode.

Restart it with

sudo service hadoop-hdfs-namenode restart