Support Questions

- Cloudera Community

- Support

- Support Questions

- Hive alerts on ambari UI

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hive alerts on ambari UI

- Labels:

-

Apache Ambari

-

Apache Hive

Created on 06-13-2018 09:30 AM - edited 08-17-2019 07:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

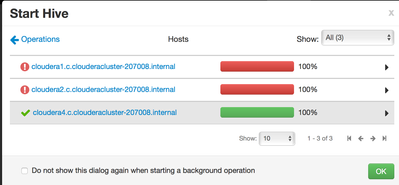

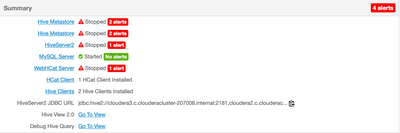

I can't start Hive on Ambari UI. I created 4 clusters. 1 master and 3 workers.

the master cluster named --> cloudera1.

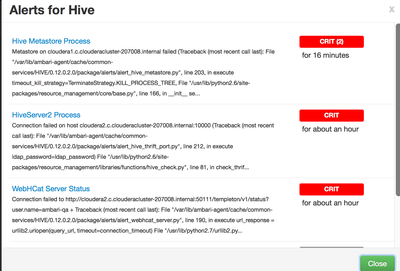

It shows the following alert.

In the Hive metadata store shown

@sheltong

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/hive_metastore.py", line 203, in <module>

HiveMetastore().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 375, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/hive_metastore.py", line 54, in start

self.configure(env)

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 120, in locking_configure

original_configure(obj, *args, **kw)

File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/hive_metastore.py", line 72, in configure

hive(name = 'metastore')

File "/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py", line 89, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/hive.py", line 310, in hive

jdbc_connector(params.hive_jdbc_target, params.hive_previous_jdbc_jar)

File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/hive.py", line 527, in jdbc_connector

content = DownloadSource(params.driver_curl_source))

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 166, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 123, in action_create

content = self._get_content()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 160, in _get_content

return content()

File "/usr/lib/python2.6/site-packages/resource_management/core/source.py", line 52, in __call__

return self.get_content()

File "/usr/lib/python2.6/site-packages/resource_management/core/source.py", line 197, in get_content

raise Fail("Failed to download file from {0} due to HTTP error: {1}".format(self.url, str(ex)))

resource_management.core.exceptions.Fail: Failed to download file from http://cloudera1.c.clouderacluster-207008.internal:8080/resources//mysql-connector-java.jar due to HTTP error: HTTP Error 404: Not Foundstdout: /var/lib/ambari-agent/data/output-1926.txt

2018-06-13 09:05:49,631 - Stack Feature Version Info: Cluster Stack=2.6, Command Stack=None, Command Version=2.6.5.0-292 -> 2.6.5.0-292

2018-06-13 09:05:49,644 - Using hadoop conf dir: /usr/hdp/2.6.5.0-292/hadoop/conf

2018-06-13 09:05:49,778 - Stack Feature Version Info: Cluster Stack=2.6, Command Stack=None, Command Version=2.6.5.0-292 -> 2.6.5.0-292

2018-06-13 09:05:49,783 - Using hadoop conf dir: /usr/hdp/2.6.5.0-292/hadoop/conf

2018-06-13 09:05:49,784 - Group['hdfs'] {}

2018-06-13 09:05:49,785 - Group['zeppelin'] {}

2018-06-13 09:05:49,785 - Group['hadoop'] {}

2018-06-13 09:05:49,785 - Group['users'] {}

2018-06-13 09:05:49,785 - Group['knox'] {}

2018-06-13 09:05:49,786 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,787 - User['storm'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,787 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,788 - User['infra-solr'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,788 - User['oozie'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users'], 'uid': None}

2018-06-13 09:05:49,789 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,790 - User['falcon'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users'], 'uid': None}

2018-06-13 09:05:49,790 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users'], 'uid': None}

2018-06-13 09:05:49,791 - User['zeppelin'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'zeppelin', u'hadoop'], 'uid': None}

2018-06-13 09:05:49,792 - User['mahout'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,792 - User['druid'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,793 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users'], 'uid': None}

2018-06-13 09:05:49,793 - User['flume'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,794 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,795 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hdfs'], 'uid': None}

2018-06-13 09:05:49,795 - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,796 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,796 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,797 - User['knox'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,798 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-06-13 09:05:49,798 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-06-13 09:05:49,799 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2018-06-13 09:05:49,804 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if

2018-06-13 09:05:49,805 - Group['hdfs'] {}

2018-06-13 09:05:49,805 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hdfs', u'hdfs']}

2018-06-13 09:05:49,806 - FS Type:

2018-06-13 09:05:49,806 - Directory['/etc/hadoop'] {'mode': 0755}

2018-06-13 09:05:49,817 - File['/usr/hdp/2.6.5.0-292/hadoop/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2018-06-13 09:05:49,818 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2018-06-13 09:05:49,837 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2018-06-13 09:05:49,842 - Skipping Execute[('setenforce', '0')] due to not_if

2018-06-13 09:05:49,842 - Directory['/var/log/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'hadoop', 'mode': 0775, 'cd_access': 'a'}

2018-06-13 09:05:49,844 - Directory['/var/run/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'root', 'cd_access': 'a'}

2018-06-13 09:05:49,844 - Directory['/tmp/hadoop-hdfs'] {'owner': 'hdfs', 'create_parents': True, 'cd_access': 'a'}

2018-06-13 09:05:49,847 - File['/usr/hdp/2.6.5.0-292/hadoop/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'hdfs'}

2018-06-13 09:05:49,849 - File['/usr/hdp/2.6.5.0-292/hadoop/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'hdfs'}

2018-06-13 09:05:49,854 - File['/usr/hdp/2.6.5.0-292/hadoop/conf/log4j.properties'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0644}

2018-06-13 09:05:49,861 - File['/usr/hdp/2.6.5.0-292/hadoop/conf/hadoop-metrics2.properties'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2018-06-13 09:05:49,862 - File['/usr/hdp/2.6.5.0-292/hadoop/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2018-06-13 09:05:49,862 - File['/usr/hdp/2.6.5.0-292/hadoop/conf/configuration.xsl'] {'owner': 'hdfs', 'group': 'hadoop'}

2018-06-13 09:05:49,866 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'hdfs', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'hadoop', 'mode': 0644}

2018-06-13 09:05:49,870 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2018-06-13 09:05:50,176 - MariaDB RedHat Support: false

2018-06-13 09:05:50,179 - Using hadoop conf dir: /usr/hdp/2.6.5.0-292/hadoop/conf

2018-06-13 09:05:50,190 - call['ambari-python-wrap /usr/bin/hdp-select status hive-server2'] {'timeout': 20}

2018-06-13 09:05:50,228 - call returned (0, 'hive-server2 - 2.6.5.0-292')

2018-06-13 09:05:50,229 - Stack Feature Version Info: Cluster Stack=2.6, Command Stack=None, Command Version=2.6.5.0-292 -> 2.6.5.0-292

2018-06-13 09:05:50,272 - File['/var/lib/ambari-agent/cred/lib/CredentialUtil.jar'] {'content': DownloadSource('http://cloudera1.c.clouderacluster-207008.internal:8080/resources/CredentialUtil.jar'), 'mode': 0755}

2018-06-13 09:05:50,273 - Not downloading the file from http://cloudera1.c.clouderacluster-207008.internal:8080/resources/CredentialUtil.jar, because /var/lib/ambari-agent/tmp/CredentialUtil.jar already exists

2018-06-13 09:05:50,274 - checked_call[('/usr/jdk64/jdk1.8.0_112/bin/java', '-cp', u'/var/lib/ambari-agent/cred/lib/*', 'org.apache.ambari.server.credentialapi.CredentialUtil', 'get', 'javax.jdo.option.ConnectionPassword', '-provider', u'jceks://file/var/lib/ambari-agent/cred/conf/hive_metastore/hive-site.jceks')] {}

2018-06-13 09:05:51,847 - checked_call returned (0, 'SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".\nSLF4J: Defaulting to no-operation (NOP) logger implementation\nSLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.\nJun 13, 2018 9:05:51 AM org.apache.hadoop.util.NativeCodeLoader <clinit>\nWARNING: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable\nadmin')

2018-06-13 09:05:51,863 - Stack Feature Version Info: Cluster Stack=2.6, Command Stack=None, Command Version=2.6.5.0-292 -> 2.6.5.0-292

2018-06-13 09:05:51,867 - Directory['/etc/hive'] {'mode': 0755}

2018-06-13 09:05:51,867 - Directories to fill with configs: [u'/usr/hdp/current/hive-metastore/conf', u'/usr/hdp/current/hive-metastore/conf/conf.server']

2018-06-13 09:05:51,868 - Directory['/etc/hive/2.6.5.0-292/0'] {'owner': 'hive', 'group': 'hadoop', 'create_parents': True, 'mode': 0755}

2018-06-13 09:05:51,869 - XmlConfig['mapred-site.xml'] {'group': 'hadoop', 'conf_dir': '/etc/hive/2.6.5.0-292/0', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': ...}

2018-06-13 09:05:51,882 - Generating config: /etc/hive/2.6.5.0-292/0/mapred-site.xml

2018-06-13 09:05:51,882 - File['/etc/hive/2.6.5.0-292/0/mapred-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-06-13 09:05:51,932 - File['/etc/hive/2.6.5.0-292/0/hive-default.xml.template'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-06-13 09:05:51,933 - File['/etc/hive/2.6.5.0-292/0/hive-env.sh.template'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-06-13 09:05:51,935 - File['/etc/hive/2.6.5.0-292/0/hive-exec-log4j.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-06-13 09:05:51,939 - File['/etc/hive/2.6.5.0-292/0/hive-log4j.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-06-13 09:05:51,940 - File['/etc/hive/2.6.5.0-292/0/parquet-logging.properties'] {'content': ..., 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-06-13 09:05:51,940 - Directory['/etc/hive/2.6.5.0-292/0/conf.server'] {'owner': 'hive', 'group': 'hadoop', 'create_parents': True, 'mode': 0700}

2018-06-13 09:05:51,941 - XmlConfig['mapred-site.xml'] {'group': 'hadoop', 'conf_dir': '/etc/hive/2.6.5.0-292/0/conf.server', 'mode': 0600, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': ...}

2018-06-13 09:05:51,951 - Generating config: /etc/hive/2.6.5.0-292/0/conf.server/mapred-site.xml

2018-06-13 09:05:51,951 - File['/etc/hive/2.6.5.0-292/0/conf.server/mapred-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0600, 'encoding': 'UTF-8'}

2018-06-13 09:05:51,999 - File['/etc/hive/2.6.5.0-292/0/conf.server/hive-default.xml.template'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2018-06-13 09:05:52,000 - File['/etc/hive/2.6.5.0-292/0/conf.server/hive-env.sh.template'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2018-06-13 09:05:52,002 - File['/etc/hive/2.6.5.0-292/0/conf.server/hive-exec-log4j.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2018-06-13 09:05:52,006 - File['/etc/hive/2.6.5.0-292/0/conf.server/hive-log4j.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2018-06-13 09:05:52,007 - File['/etc/hive/2.6.5.0-292/0/conf.server/parquet-logging.properties'] {'content': ..., 'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2018-06-13 09:05:52,007 - File['/usr/hdp/current/hive-metastore/conf/conf.server/hive-site.jceks'] {'content': StaticFile('/var/lib/ambari-agent/cred/conf/hive_metastore/hive-site.jceks'), 'owner': 'hive', 'group': 'hadoop', 'mode': 0640}

2018-06-13 09:05:52,007 - Writing File['/usr/hdp/current/hive-metastore/conf/conf.server/hive-site.jceks'] because contents don't match

2018-06-13 09:05:52,008 - XmlConfig['hive-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hive-metastore/conf/conf.server', 'mode': 0600, 'configuration_attributes': {u'hidden': {u'javax.jdo.option.ConnectionPassword': u'HIVE_CLIENT,WEBHCAT_SERVER,HCAT,CONFIG_DOWNLOAD'}}, 'owner': 'hive', 'configurations': ...}

2018-06-13 09:05:52,017 - Generating config: /usr/hdp/current/hive-metastore/conf/conf.server/hive-site.xml

2018-06-13 09:05:52,019 - File['/usr/hdp/current/hive-metastore/conf/conf.server/hive-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0600, 'encoding': 'UTF-8'}

2018-06-13 09:05:52,172 - XmlConfig['hivemetastore-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hive-metastore/conf/conf.server', 'mode': 0600, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': {u'hive.service.metrics.hadoop2.component': u'hivemetastore', u'hive.metastore.metrics.enabled': u'true', u'hive.service.metrics.reporter': u'HADOOP2'}}

2018-06-13 09:05:52,182 - Generating config: /usr/hdp/current/hive-metastore/conf/conf.server/hivemetastore-site.xml

2018-06-13 09:05:52,182 - File['/usr/hdp/current/hive-metastore/conf/conf.server/hivemetastore-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0600, 'encoding': 'UTF-8'}

2018-06-13 09:05:52,190 - File['/usr/hdp/current/hive-metastore/conf/conf.server/hive-env.sh'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2018-06-13 09:05:52,191 - Directory['/etc/security/limits.d'] {'owner': 'root', 'create_parents': True, 'group': 'root'}

2018-06-13 09:05:52,195 - File['/etc/security/limits.d/hive.conf'] {'content': Template('hive.conf.j2'), 'owner': 'root', 'group': 'root', 'mode': 0644}

2018-06-13 09:05:52,196 - Execute[('rm', '-f', u'/usr/hdp/current/hive-metastore/lib/ojdbc6.jar')] {'path': ['/bin', '/usr/bin/'], 'sudo': True}

2018-06-13 09:05:52,214 - File['/var/lib/ambari-agent/tmp/mysql-connector-java.jar'] {'content': DownloadSource('http://cloudera1.c.clouderacluster-207008.internal:8080/resources//mysql-connector-java.jar')}

2018-06-13 09:05:52,215 - Downloading the file from http://cloudera1.c.clouderacluster-207008.internal:8080/resources//mysql-connector-java.jar

Command failed after 1 tries

Created 06-13-2018 09:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As we see the error, Where it looks like you have not setup MySQL server for Hive properly. Please see:

raise Fail("Failed to download file from {0} due to HTTP error: {1}".format(self.url, str(ex)))

resource_management.core.exceptions.Fail: Failed to download file from http://xxxxxx:8080/resources//mysql-connector-java.jar due to HTTP error: HTTP Error 404

So please make sure that you have already done the following steps? On the Ambari Server Host.

# yum install mysql-connection-java -y

(OR)

if you are downloading the mysql-connector-java JAR from some tar.gz archive then please make sure to check the following locations and create the symlinks something like following to point to your jar.

.

Then you should find some symlink as following:

Example:

# ls -l /usr/share/java/mysql-connector-java.jar lrwxrwxrwx 1 root root 31 Apr 19 2017 /usr/share/java/mysql-connector-java.jar -> mysql-connector-java-5.1.17.jar

https://docs.hortonworks.com/HDPDocuments/Ambari-2.6.1.0/bk_ambari-administration/content/using_hive...

So now ambari knows how to find this jar. The JAR can be found here after

# ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java.jar # ls -l /var/lib/ambari-server/resources/mysql-connector-java.jar -rw-r--r-- 1 root root 819803 Sep 28 19:52 /var/lib/ambari-server/resources/mysql-connector-java.jar

Reference HCC Thread:

.

Created 06-13-2018 09:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As we see the error, Where it looks like you have not setup MySQL server for Hive properly. Please see:

raise Fail("Failed to download file from {0} due to HTTP error: {1}".format(self.url, str(ex)))

resource_management.core.exceptions.Fail: Failed to download file from http://xxxxxx:8080/resources//mysql-connector-java.jar due to HTTP error: HTTP Error 404

So please make sure that you have already done the following steps? On the Ambari Server Host.

# yum install mysql-connection-java -y

(OR)

if you are downloading the mysql-connector-java JAR from some tar.gz archive then please make sure to check the following locations and create the symlinks something like following to point to your jar.

.

Then you should find some symlink as following:

Example:

# ls -l /usr/share/java/mysql-connector-java.jar lrwxrwxrwx 1 root root 31 Apr 19 2017 /usr/share/java/mysql-connector-java.jar -> mysql-connector-java-5.1.17.jar

https://docs.hortonworks.com/HDPDocuments/Ambari-2.6.1.0/bk_ambari-administration/content/using_hive...

So now ambari knows how to find this jar. The JAR can be found here after

# ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java.jar # ls -l /var/lib/ambari-server/resources/mysql-connector-java.jar -rw-r--r-- 1 root root 819803 Sep 28 19:52 /var/lib/ambari-server/resources/mysql-connector-java.jar

Reference HCC Thread:

.