Support Questions

- Cloudera Community

- Support

- Support Questions

- Hive services check fails

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hive services check fails

- Labels:

-

Apache Ambari

-

Apache Hive

Created 04-13-2018 09:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I start HiveServer2 in Ambari, I get the following error:

Connection failed on host eureambarislave1.local.eurecat.org:10000 (Traceback (most recent call last): File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/alerts/alert_hive_thrift_port.py", line 212, in execute ldap_password=ldap_password) File "/usr/lib/python2.6/site-packages/resource_management/libraries/functions/hive_check.py", line 81, in check_thrift_port_sa..

Hive services check provides the following outputs:

- User Home Check failed with the message: File does not exist: /user/admin

- Hive check failed with the message:

org.apache.ambari.view.hive20.internal.ConnectionException: Cannot open a hive connection with connect string jdbc:hive2://eureambarimaster1.local.eurecat.org:2181,eureambarislave1.local.eurecat.org:2181,eureambarislave2.local.eurecat.org:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2;hive.server2.proxy.user=admin org.apache.ambari.view.hive20.internal.ConnectionException: Cannot open a hive connection with connect string jdbc:hive2://eureambarimaster1.local.eurecat.org:2181,eureambarislave1.local.eurecat.org:2181,eureambarislave2.local.eurecat.org:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2;hive.server2.proxy.user=admin at org.apache.ambari.view.hive20.internal.HiveConnectionWrapper.connect(HiveConnectionWrapper.java:89) at org.apache.ambari.view.hive20.resources.browser.ConnectionService.attemptHiveConnection(ConnectionService.java:109)

Created 04-13-2018 10:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You will need to create the HDFS home directory for the user who has logged in the ambari UI and running the hive view queries.

If you have logged in to Ambari UI as user "admin" then please do this to create "/user/admin" home directory on the HDFS

# su - hdfs -c "hdfs dfs -mkdir /user/admin" # su - hdfs -c "hdfs dfs -chown admin:hadoop /user/admin"

.

Created 04-13-2018 10:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You will need to create the HDFS home directory for the user who has logged in the ambari UI and running the hive view queries.

If you have logged in to Ambari UI as user "admin" then please do this to create "/user/admin" home directory on the HDFS

# su - hdfs -c "hdfs dfs -mkdir /user/admin" # su - hdfs -c "hdfs dfs -chown admin:hadoop /user/admin"

.

Created 04-13-2018 10:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And For the "Hive check failed with the message:" issue please make sure that the Hive Services are UP and running. If needed please restart the hive Service.

Then before accessing just to be double sure that the Hive Services are running fine or not please perform a Hive Service Check from ambari UI as following:

Ambari UI --> HIve --> "Service Actions" (Drop Down) --> Run Hive Service Check.

Some times it can happen that the Ambari Hive Connection pool is having stale connections so please try restarting ambari server once if Restarting Hive Service does not help.

Created 04-13-2018 10:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It resolved the issue with /user/admin, but I still get the error with HiveServer2:

Connection failed on host eureambarislave1.local.eurecat.org:10000 (Traceback (most recent call last): File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/alerts/alert_hive_thrift_port.py", line 212, in execute ldap_password=ldap_password) File "/usr/lib/python2.6/site-packages/resource_management/libraries/functions/hive_check.py", line 81, in check_thrift_port_sa..

Created 04-13-2018 10:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please share the complete StackTrace of the "error with HiveServer2"

Also can you please check on the HiveServer2 host to know if hive thrift port 10000 is actually opened or not?

# netstat -tnlpa | grep 10000

.

Can you also please share the Hive Server logs so that we can see if the Hive Services are actually running without any error or not?

Also if possible then can you also check if you are able to start the Hive Components manually or not ? The commands are available in the following docs:

Example:

# su $HIVE_USER # nohup /usr/hdp/current/hive-server2/bin/hiveserver2 -hiveconf hive.metastore.uris=/tmp/hiveserver2HD.out 2 /tmp/hiveserver2HD.log

.

Created 04-13-2018 10:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For HDFS User home check fail please refer to the following link for more detailed instructions: https://docs.hortonworks.com/HDPDocuments/Ambari-2.6.0.0/bk_ambari-views/content/setup_HDFS_user_dir...

.

For File/Hive View we also need to setup proxyusers (if it is not setup already) in latest version of ambari it is usually setup by default but still it is good to check once:

Services > HDFS > Configs > Advanced > Custom core-site Add Property

hadoop.proxyuser.root.groups=* hadoop.proxyuser.root.hosts=*

Created on 05-14-2018 05:17 PM - edited 08-17-2019 08:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

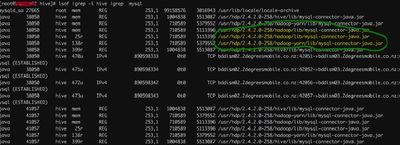

for me the old mysql-connector-java.jar was the problem though I had replace it in /use/share/java.

as a workaround I have to manually delete the jar as shown in picture and restarted the the hive metastore/all hive services.

then service check went through.