Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HiveMetastore & Hiveserver2 Failed to start On...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HiveMetastore & Hiveserver2 Failed to start On HortonWorks Hadoop cluster ?

- Labels:

-

Apache Ambari

-

Apache Hive

Created 05-10-2019 01:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello HW community,

I have a problem with my HiveMetastore & Hiveserver2 dont start at all due to the database connexion with the jdbc driver.

i proprelly executed the "https://docs.hortonworks.com/HDPDocuments/Ambari-2.6.1.5/bk_ambari-administration/content/using_hive... " i have the 3 hive users on mysql, i created the hive database and linked the mysql-connector-java.jar to my ambari-server. I tried some solution kind of replace the "localhost" in the Database URL by the host name or the ip address too, but same error.

PS: all the others services are turning.

My error is :

stderr:

Traceback (most recent call last): File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/hive_metastore.py", line 203, in <module> HiveMetastore().execute() File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 367, in execute method(env) File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/hive_metastore.py", line 56, in start create_metastore_schema() File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/hive.py", line 413, in create_metastore_schema user = params.hive_user File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 166, in __init__ self.env.run() File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run self.run_action(resource, action) File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action provider_action() File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 262, in action_run tries=self.resource.tries, try_sleep=self.resource.try_sleep) File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 72, in inner result = function(command, **kwargs) File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 102, in checked_call tries=tries, try_sleep=try_sleep, timeout_kill_strategy=timeout_kill_strategy) File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 150, in _call_wrapper result = _call(command, **kwargs_copy) File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 303, in _call raise ExecutionFailed(err_msg, code, out, err) resource_management.core.exceptions.ExecutionFailed: Execution of 'export HIVE_CONF_DIR=/usr/hdp/current/hive-metastore/conf/conf.server ; /usr/hdp/current/hive-server2-hive2/bin/schematool -initSchema -dbType mysql -userName hive -passWord [PROTECTED] -verbose' returned 1. SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/hdp/2.6.3.0-235/hive2/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/hdp/2.6.3.0-235/hadoop/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Metastore connection URL: jdbc:mysql://127.0.0.1/hive?createDatabaseIfNotExist=true Metastore Connection Driver : com.mysql.jdbc.Driver Metastore connection User: hive Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. Wed Apr 10 12:02:45 CEST 2019 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification. org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version. Underlying cause: java.sql.SQLException : The server time zone value 'CEST' is unrecognized or represents more than one time zone. You must configure either the server or JDBC driver (via the serverTimezone configuration property) to use a more specifc time zone value if you want to utilize time zone support. SQL Error code: 0 org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version. at org.apache.hive.beeline.HiveSchemaHelper.getConnectionToMetastore(HiveSchemaHelper.java:80) at org.apache.hive.beeline.HiveSchemaTool.getConnectionToMetastore(HiveSchemaTool.java:133) at org.apache.hive.beeline.HiveSchemaTool.testConnectionToMetastore(HiveSchemaTool.java:187) at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:291) at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:277) at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:526) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.util.RunJar.run(RunJar.java:233) at org.apache.hadoop.util.RunJar.main(RunJar.java:148) Caused by: java.sql.SQLException: The server time zone value 'CEST' is unrecognized or represents more than one time zone. You must configure either the server or JDBC driver (via the serverTimezone configuration property) to use a more specifc time zone value if you want to utilize time zone support. at com.mysql.cj.jdbc.exceptions.SQLError.createSQLException(SQLError.java:129) at com.mysql.cj.jdbc.exceptions.SQLError.createSQLException(SQLError.java:97) at com.mysql.cj.jdbc.exceptions.SQLError.createSQLException(SQLError.java:89) at com.mysql.cj.jdbc.exceptions.SQLError.createSQLException(SQLError.java:63) at com.mysql.cj.jdbc.exceptions.SQLError.createSQLException(SQLError.java:73) at com.mysql.cj.jdbc.exceptions.SQLExceptionsMapping.translateException(SQLExceptionsMapping.java:76) at com.mysql.cj.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:832) at com.mysql.cj.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:456) at com.mysql.cj.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:240) at com.mysql.cj.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:207) at java.sql.DriverManager.getConnection(DriverManager.java:664) at java.sql.DriverManager.getConnection(DriverManager.java:247) at org.apache.hive.beeline.HiveSchemaHelper.getConnectionToMetastore(HiveSchemaHelper.java:76) ... 11 more Caused by: com.mysql.cj.exceptions.InvalidConnectionAttributeException: The server time zone value 'CEST' is unrecognized or represents more than one time zone. You must configure either the server or JDBC driver (via the serverTimezone configuration property) to use a more specifc time zone value if you want to utilize time zone support. at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:423) at com.mysql.cj.exceptions.ExceptionFactory.createException(ExceptionFactory.java:61) at com.mysql.cj.exceptions.ExceptionFactory.createException(ExceptionFactory.java:85) at com.mysql.cj.util.TimeUtil.getCanonicalTimezone(TimeUtil.java:128) at com.mysql.cj.protocol.a.NativeProtocol.configureTimezone(NativeProtocol.java:2236) at com.mysql.cj.protocol.a.NativeProtocol.initServerSession(NativeProtocol.java:2260) at com.mysql.cj.jdbc.ConnectionImpl.initializePropsFromServer(ConnectionImpl.java:1314) at com.mysql.cj.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:963) at com.mysql.cj.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:822) ... 17 more

*** schemaTool failed ***

stdout:

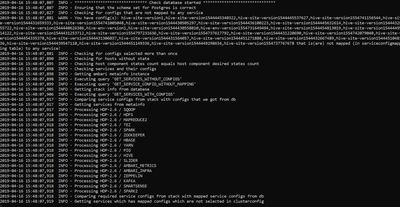

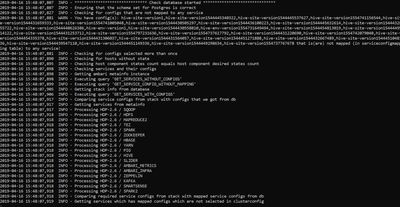

2019-04-10 12:02:27,431 - Stack Feature Version Info: Cluster Stack=2.6, Command Stack=None, Command Version=2.6.3.0-235 -> 2.6.3.0-235

2019-04-10 12:02:27,467 - Using hadoop conf dir: /usr/hdp/2.6.3.0-235/hadoop/conf

2019-04-10 12:02:27,921 - Stack Feature Version Info: Cluster Stack=2.6, Command Stack=None, Command Version=2.6.3.0-235 -> 2.6.3.0-235

2019-04-10 12:02:27,929 - Using hadoop conf dir: /usr/hdp/2.6.3.0-235/hadoop/conf

2019-04-10 12:02:27,931 - Group['livy'] {}

2019-04-10 12:02:27,933 - Group['spark'] {}

2019-04-10 12:02:27,934 - Group['hdfs'] {}

2019-04-10 12:02:27,934 - Group['zeppelin'] {}

2019-04-10 12:02:27,934 - Group['hadoop'] {}

2019-04-10 12:02:27,934 - Group['users'] {}

2019-04-10 12:02:27,935 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2019-04-10 12:02:27,937 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2019-04-10 12:02:27,938 - User['infra-solr'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2019-04-10 12:02:27,939 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2019-04-10 12:02:27,941 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users'], 'uid': None}

2019-04-10 12:02:27,942 - User['zeppelin'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'zeppelin', u'hadoop'], 'uid': None}

2019-04-10 12:02:27,943 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2019-04-10 12:02:27,944 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2019-04-10 12:02:27,945 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users'], 'uid': None}

2019-04-10 12:02:27,946 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2019-04-10 12:02:27,947 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hdfs'], 'uid': None}

2019-04-10 12:02:27,948 - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2019-04-10 12:02:27,949 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2019-04-10 12:02:27,950 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2019-04-10 12:02:27,951 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2019-04-10 12:02:27,953 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2019-04-10 12:02:27,954 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2019-04-10 12:02:27,955 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2019-04-10 12:02:27,997 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if

2019-04-10 12:02:27,999 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'create_parents': True, 'mode': 0775, 'cd_access': 'a'}

2019-04-10 12:02:28,002 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2019-04-10 12:02:28,004 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2019-04-10 12:02:28,005 - call['/var/lib/ambari-agent/tmp/changeUid.sh hbase'] {}

2019-04-10 12:02:28,057 - call returned (0, '1012')

2019-04-10 12:02:28,060 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1012'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2019-04-10 12:02:28,095 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1012'] due to not_if

2019-04-10 12:02:28,097 - Group['hdfs'] {}

2019-04-10 12:02:28,098 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hdfs', u'hdfs']}

2019-04-10 12:02:28,099 - FS Type:

2019-04-10 12:02:28,099 - Directory['/etc/hadoop'] {'mode': 0755}

2019-04-10 12:02:28,133 - File['/usr/hdp/2.6.3.0-235/hadoop/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2019-04-10 12:02:28,134 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2019-04-10 12:02:28,232 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2019-04-10 12:02:28,281 - Skipping Execute[('setenforce', '0')] due to not_if

2019-04-10 12:02:28,283 - Directory['/var/log/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'hadoop', 'mode': 0775, 'cd_access': 'a'}

2019-04-10 12:02:28,291 - Directory['/var/run/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'root', 'cd_access': 'a'}

2019-04-10 12:02:28,292 - Directory['/tmp/hadoop-hdfs'] {'owner': 'hdfs', 'create_parents': True, 'cd_access': 'a'}

2019-04-10 12:02:28,303 - File['/usr/hdp/2.6.3.0-235/hadoop/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'hdfs'}

2019-04-10 12:02:28,307 - File['/usr/hdp/2.6.3.0-235/hadoop/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'hdfs'}

2019-04-10 12:02:28,320 - File['/usr/hdp/2.6.3.0-235/hadoop/conf/log4j.properties'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0644}

2019-04-10 12:02:28,337 - File['/usr/hdp/2.6.3.0-235/hadoop/conf/hadoop-metrics2.properties'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2019-04-10 12:02:28,338 - File['/usr/hdp/2.6.3.0-235/hadoop/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2019-04-10 12:02:28,339 - File['/usr/hdp/2.6.3.0-235/hadoop/conf/configuration.xsl'] {'owner': 'hdfs', 'group': 'hadoop'}

2019-04-10 12:02:28,347 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'hdfs', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'hadoop', 'mode': 0644}

2019-04-10 12:02:28,373 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2019-04-10 12:02:29,329 - MariaDB RedHat Support: false

2019-04-10 12:02:29,335 - Using hadoop conf dir: /usr/hdp/2.6.3.0-235/hadoop/conf

2019-04-10 12:02:29,354 - call['ambari-python-wrap /usr/bin/hdp-select status hive-server2'] {'timeout': 20}

2019-04-10 12:02:29,432 - call returned (0, 'hive-server2 - 2.6.3.0-235')

2019-04-10 12:02:29,435 - Stack Feature Version Info: Cluster Stack=2.6, Command Stack=None, Command Version=2.6.3.0-235 -> 2.6.3.0-235

2019-04-10 12:02:29,505 - File['/var/lib/ambari-agent/cred/lib/CredentialUtil.jar'] {'content': DownloadSource('http://master.rh.bigdata.cluster:8080/resources/CredentialUtil.jar'), 'mode': 0755}

2019-04-10 12:02:29,515 - Not downloading the file from http://master.rh.bigdata.cluster:8080/resources/CredentialUtil.jar, because /var/lib/ambari-agent/tmp/CredentialUtil.jar already exists

2019-04-10 12:02:29,515 - checked_call[('/usr/jdk64/jdk1.8.0_112/bin/java', '-cp', u'/var/lib/ambari-agent/cred/lib/*', 'org.apache.ambari.server.credentialapi.CredentialUtil', 'get', 'javax.jdo.option.ConnectionPassword', '-provider', u'jceks://file/var/lib/ambari-agent/cred/conf/hive_metastore/hive-site.jceks')] {}

2019-04-10 12:02:30,935 - checked_call returned (0, 'SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".\nSLF4J: Defaulting to no-operation (NOP) logger implementation\nSLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.\nApr 10, 2019 12:02:30 PM org.apache.hadoop.util.NativeCodeLoader <clinit>\nWARNING: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable\nhive')

2019-04-10 12:02:30,968 - Directory['/etc/hive'] {'mode': 0755}

2019-04-10 12:02:30,969 - Directories to fill with configs: [u'/usr/hdp/current/hive-metastore/conf', u'/usr/hdp/current/hive-metastore/conf/conf.server']

2019-04-10 12:02:30,970 - Directory['/etc/hive/2.6.3.0-235/0'] {'owner': 'hive', 'group': 'hadoop', 'create_parents': True, 'mode': 0755}

2019-04-10 12:02:30,972 - XmlConfig['mapred-site.xml'] {'group': 'hadoop', 'conf_dir': '/etc/hive/2.6.3.0-235/0', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': ...}

2019-04-10 12:02:30,997 - Generating config: /etc/hive/2.6.3.0-235/0/mapred-site.xml

2019-04-10 12:02:30,997 - File['/etc/hive/2.6.3.0-235/0/mapred-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2019-04-10 12:02:31,047 - File['/etc/hive/2.6.3.0-235/0/hive-default.xml.template'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2019-04-10 12:02:31,048 - File['/etc/hive/2.6.3.0-235/0/hive-env.sh.template'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2019-04-10 12:02:31,051 - File['/etc/hive/2.6.3.0-235/0/hive-exec-log4j.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2019-04-10 12:02:31,055 - File['/etc/hive/2.6.3.0-235/0/hive-log4j.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2019-04-10 12:02:31,056 - File['/etc/hive/2.6.3.0-235/0/parquet-logging.properties'] {'content': ..., 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2019-04-10 12:02:31,058 - Directory['/etc/hive/2.6.3.0-235/0/conf.server'] {'owner': 'hive', 'group': 'hadoop', 'create_parents': True, 'mode': 0700}

2019-04-10 12:02:31,058 - XmlConfig['mapred-site.xml'] {'group': 'hadoop', 'conf_dir': '/etc/hive/2.6.3.0-235/0/conf.server', 'mode': 0600, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': ...}

2019-04-10 12:02:31,072 - Generating config: /etc/hive/2.6.3.0-235/0/conf.server/mapred-site.xml

2019-04-10 12:02:31,073 - File['/etc/hive/2.6.3.0-235/0/conf.server/mapred-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0600, 'encoding': 'UTF-8'}

2019-04-10 12:02:31,135 - File['/etc/hive/2.6.3.0-235/0/conf.server/hive-default.xml.template'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2019-04-10 12:02:31,135 - File['/etc/hive/2.6.3.0-235/0/conf.server/hive-env.sh.template'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2019-04-10 12:02:31,138 - File['/etc/hive/2.6.3.0-235/0/conf.server/hive-exec-log4j.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2019-04-10 12:02:31,143 - File['/etc/hive/2.6.3.0-235/0/conf.server/hive-log4j.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2019-04-10 12:02:31,144 - File['/etc/hive/2.6.3.0-235/0/conf.server/parquet-logging.properties'] {'content': ..., 'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2019-04-10 12:02:31,144 - File['/usr/hdp/current/hive-metastore/conf/conf.server/hive-site.jceks'] {'content': StaticFile('/var/lib/ambari-agent/cred/conf/hive_metastore/hive-site.jceks'), 'owner': 'hive', 'group': 'hadoop', 'mode': 0640}

2019-04-10 12:02:31,145 - Writing File['/usr/hdp/current/hive-metastore/conf/conf.server/hive-site.jceks'] because contents don't match

2019-04-10 12:02:31,146 - XmlConfig['hive-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hive-metastore/conf/conf.server', 'mode': 0600, 'configuration_attributes': {u'hidden': {u'javax.jdo.option.ConnectionPassword': u'HIVE_CLIENT,WEBHCAT_SERVER,HCAT,CONFIG_DOWNLOAD'}}, 'owner': 'hive', 'configurations': ...}

2019-04-10 12:02:31,159 - Generating config: /usr/hdp/current/hive-metastore/conf/conf.server/hive-site.xml

2019-04-10 12:02:31,159 - File['/usr/hdp/current/hive-metastore/conf/conf.server/hive-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0600, 'encoding': 'UTF-8'}

2019-04-10 12:02:31,315 - Writing File['/usr/hdp/current/hive-metastore/conf/conf.server/hive-site.xml'] because contents don't match

2019-04-10 12:02:31,316 - XmlConfig['hivemetastore-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hive-metastore/conf/conf.server', 'mode': 0600, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': {u'hive.service.metrics.hadoop2.component': u'hivemetastore', u'hive.metastore.metrics.enabled': u'true', u'hive.service.metrics.reporter': u'HADOOP2'}}

2019-04-10 12:02:31,327 - Generating config: /usr/hdp/current/hive-metastore/conf/conf.server/hivemetastore-site.xml

2019-04-10 12:02:31,327 - File['/usr/hdp/current/hive-metastore/conf/conf.server/hivemetastore-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0600, 'encoding': 'UTF-8'}

2019-04-10 12:02:31,337 - File['/usr/hdp/current/hive-metastore/conf/conf.server/hive-env.sh'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2019-04-10 12:02:31,338 - Writing File['/usr/hdp/current/hive-metastore/conf/conf.server/hive-env.sh'] because contents don't match

2019-04-10 12:02:31,338 - Directory['/etc/security/limits.d'] {'owner': 'root', 'create_parents': True, 'group': 'root'}

2019-04-10 12:02:31,342 - File['/etc/security/limits.d/hive.conf'] {'content': Template('hive.conf.j2'), 'owner': 'root', 'group': 'root', 'mode': 0644}

2019-04-10 12:02:31,343 - File['/usr/lib/ambari-agent/DBConnectionVerification.jar'] {'content': DownloadSource('http://master.rh.bigdata.cluster:8080/resources/DBConnectionVerification.jar'), 'mode': 0644}

2019-04-10 12:02:31,343 - Not downloading the file from http://master.rh.bigdata.cluster:8080/resources/DBConnectionVerification.jar, because /var/lib/ambari-agent/tmp/DBConnectionVerification.jar already exists

2019-04-10 12:02:31,351 - File['/usr/hdp/current/hive-metastore/conf/conf.server/hadoop-metrics2-hivemetastore.properties'] {'content': Template('hadoop-metrics2-hivemetastore.properties.j2'), 'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2019-04-10 12:02:31,352 - File['/var/lib/ambari-agent/tmp/start_metastore_script'] {'content': StaticFile('startMetastore.sh'), 'mode': 0755}

2019-04-10 12:02:31,352 - Directory['/tmp/hive'] {'owner': 'hive', 'create_parents': True, 'mode': 0777}

2019-04-10 12:02:31,353 - HdfsResource[''] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/2.6.3.0-235/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': '', 'default_fs': 'hdfs://master.rh.bigdata.cluster:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hive', 'group': 'hadoop', 'hadoop_conf_dir': '/usr/hdp/2.6.3.0-235/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/apps/hive/warehouse', u'/mr-history/done', u'/app-logs', u'/tmp'], 'mode': 01777}

2019-04-10 12:02:31,359 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://master.rh.bigdata.cluster:50070/webhdfs/v1?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpBmqvPV 2>/tmp/tmp2O7YgS''] {'logoutput': None, 'quiet': False}

2019-04-10 12:02:31,479 - call returned (0, '')

2019-04-10 12:02:31,492 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://master.rh.bigdata.cluster:50070/webhdfs/v1?op=SETPERMISSION&user.name=hdfs&permission=1777'"'"' 1>/tmp/tmpIpw5Mo 2>/tmp/tmpeiNC2x''] {'logoutput': None, 'quiet': False}

2019-04-10 12:02:31,607 - call returned (0, '')

2019-04-10 12:02:31,617 - HdfsResource[''] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/2.6.3.0-235/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': '', 'default_fs': 'hdfs://master.rh.bigdata.cluster:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hive', 'group': 'hadoop', 'hadoop_conf_dir': '/usr/hdp/2.6.3.0-235/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/apps/hive/warehouse', u'/mr-history/done', u'/app-logs', u'/tmp'], 'mode': 0700}

2019-04-10 12:02:31,623 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://master.rh.bigdata.cluster:50070/webhdfs/v1?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpUJUmmq 2>/tmp/tmpziKJr3''] {'logoutput': None, 'quiet': False}

2019-04-10 12:02:31,748 - call returned (0, '')

2019-04-10 12:02:31,753 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT '"'"'http://master.rh.bigdata.cluster:50070/webhdfs/v1?op=SETPERMISSION&user.name=hdfs&permission=700'"'"' 1>/tmp/tmp60LKkM 2>/tmp/tmp3Urul7''] {'logoutput': None, 'quiet': False}

2019-04-10 12:02:31,845 - call returned (0, '')

2019-04-10 12:02:31,849 - HdfsResource[None] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/2.6.3.0-235/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': '', 'default_fs': 'hdfs://master.rh.bigdata.cluster:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'action': ['execute'], 'hadoop_conf_dir': '/usr/hdp/2.6.3.0-235/hadoop/conf', 'immutable_paths': [u'/apps/hive/warehouse', u'/mr-history/done', u'/app-logs', u'/tmp']}

2019-04-10 12:02:31,850 - Directory['/var/run/hive'] {'owner': 'hive', 'create_parents': True, 'group': 'hadoop', 'mode': 0755, 'cd_access': 'a'}

2019-04-10 12:02:31,852 - Directory['/var/log/hive'] {'owner': 'hive', 'create_parents': True, 'group': 'hadoop', 'mode': 0755, 'cd_access': 'a'}

2019-04-10 12:02:31,853 - Directory['/var/lib/hive'] {'owner': 'hive', 'create_parents': True, 'group': 'hadoop', 'mode': 0755, 'cd_access': 'a'}

2019-04-10 12:02:31,855 - Execute['export HIVE_CONF_DIR=/usr/hdp/current/hive-metastore/conf/conf.server ; /usr/hdp/current/hive-server2-hive2/bin/schematool -initSchema -dbType mysql -userName hive -passWord [PROTECTED] -verbose'] {'not_if': u"ambari-sudo.sh su hive -l -s /bin/bash -c 'export HIVE_CONF_DIR=/usr/hdp/current/hive-metastore/conf/conf.server ; /usr/hdp/current/hive-server2-hive2/bin/schematool -info -dbType mysql -userName hive -passWord [PROTECTED] -verbose'", 'user': 'hive'}

Command failed after 1 tries

Created 05-10-2019 10:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hurrah we are now there, thats the error I was expecting now this is a case closed.

Validate the hostname by running

# hostname -f

This should give you the FQDN

The error below is very simple its a privilege issue with the hive user and database creation script you run, you didn't give the correct privileges to the hive user "Access denied for user 'hive'@'master.rh.bigdata.cluster' to database 'hive'"

To resolve the above please do the following assumptions

Root password = gr3atman

Hive password = hive

Hostname = master.rh.bigdata.cluster

mysql -uroot -pgr3atman mysql> GRANT ALL PRIVILEGES ON hive.* to 'hive'@'localhost' identified by 'hive'; mysql> GRANT ALL PRIVILEGES ON hive.* to 'hive'@'master.rh.bigdata.cluster' identified by 'hive'; mysql> GRANT ALL PRIVILEGES ON hive.* TO 'hive'@'master.rh.bigdata.cluster'; mysql> flush privileges;

All of the above should succeed. Now your hive should fire up Bravo !!

************

If you found this answer addressed your question, please take a moment to log in and click the "accept" link on the answer.

That would be a great help to Community users to find the solution quickly for these kinds of errors.

Created on 05-10-2019 01:09 PM - edited 08-17-2019 04:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

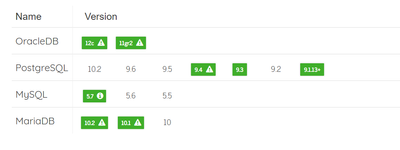

The below error tells me 2 things you are either using a MySQL server which is not supported or your jdbc driver version is not correct can you validate your Mysql database against the HXW support matrix

2019-04-16 15:55:44,477 - Check db_connection_check was unsuccessful.

Exit code: 1. Message: Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'.

The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.

ERROR: Unable to connect to the DB. Please check DB connection properties. com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

Command failed after 1 tries

I filtered the above for HDP 2.6

Created 05-10-2019 01:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you firstly stop/ re-run ambari with the below command

ambari-server stop

Then

ambari-server start --skip-database-check

Your Ambari database has many orphaned config please see this Jira how to clean you Ambari database though it would be faster to start with a clean installation but it good to get your hands dirty !!!

https://issues.apache.org/jira/browse/AMBARI-20875

CREATE TEMPORARY TABLE IF NOT EXISTS orphaned_configs AS (SELECT cc.config_id, cc.type_name, cc.version_tag FROM clusterconfig cc, clusterconfigmapping ccm WHERE cc.config_id NOT IN (SELECT scm.config_id FROM serviceconfigmapping scm) AND cc.type_name != 'cluster-env' AND cc.type_name = ccm.type_name AND cc.version_tag = ccm.version_tag);

Followed by

DELETE FROM clusterconfigmapping WHERE EXISTS (SELECT 1 FROM orphaned_configs WHERE clusterconfigmapping.type_name = orphaned_configs.type_name AND clusterconfigmapping.version_tag = orphaned_configs.version_tag);

Followed by

DELETE FROM clusterconfig WHERE clusterconfig.config_id IN (SELECT config_id FROM orphaned_configs);

Followed by

SELECT * FROM orphaned_configs;

Then

DROP TABLE orphaned_configs;

Now you Ambari db should be clean to proceed with the hive setup

Created on 05-10-2019 01:09 PM - edited 08-17-2019 03:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

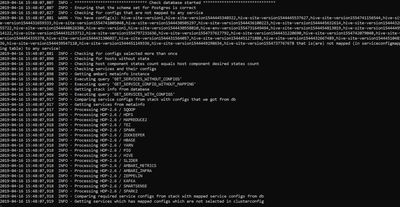

hello,

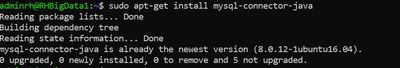

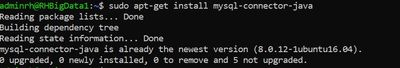

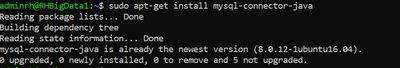

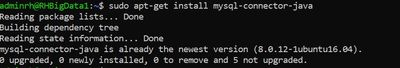

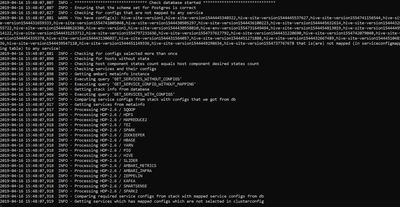

Mysql-connector-java is updated

ambari-server driver and db :

still have the warning on my ambari-server start

and finally the failed connection rom ambari :

and here's the stderr & stdout :

stderr:

- 2019-04-16 15:55:44,477 - Check db_connection_check was unsuccessful. Exit code: 1. Message: Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. ERROR: Unable to connect to the DB. Please check DB connection properties. com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

- The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

- Traceback (most recent call last): File "/var/lib/ambari-agent/cache/custom_actions/scripts/check_host.py", line 530, in <module> CheckHost().execute() File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 367, in execute method(env) File "/var/lib/ambari-agent/cache/custom_actions/scripts/check_host.py", line 207, in actionexecute raise Fail(error_message) resource_management.core.exceptions.Fail: Check db_connection_check was unsuccessful. Exit code: 1. Message: Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. ERROR: Unable to connect to the DB. Please check DB connection properties. com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

- The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

stdout:

- 2019-04-16 15:55:43,864 - Host checks started. 2019-04-16 15:55:43,864 - Check execute list: db_connection_check 2019-04-16 15:55:43,864 - DB connection check started. WARNING: File /var/lib/ambari-agent/cache/DBConnectionVerification.jar already exists, assuming it was downloaded before WARNING: File /var/lib/ambari-agent/cache/mysql-connector-java.jar already exists, assuming it was downloaded before 2019-04-16 15:55:43,866 - call['/usr/jdk64/jdk1.8.0_112/bin/java -cp /var/lib/ambari-agent/cache/DBConnectionVerification.jar:/var/lib/ambari-agent/cache/mysql-connector-java.jar -Djava.library.path=/var/lib/ambari-agent/cache org.apache.ambari.server.DBConnectionVerification "jdbc:mysql://master.rh.bigdata.cluster/hive" "hive" [PROTECTED] com.mysql.jdbc.Driver'] {} 2019-04-16 15:55:44,473 - call returned (1, "Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.\nERROR: Unable to connect to the DB. Please check DB connection properties.\ncom.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure\n\nThe last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.") 2019-04-16 15:55:44,474 - DB connection check completed. 2019-04-16 15:55:44,476 - Host checks completed. 2019-04-16 15:55:44,477 - Check db_connection_check was unsuccessful. Exit code: 1. Message: Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. ERROR: Unable to connect to the DB. Please check DB connection properties. com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

- The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

- Command failed after 1 tries

Created on 05-10-2019 01:09 PM - edited 08-17-2019 03:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hello,

Mysql-connector-java is updated

ambari-server driver and db :

still have the warning on my ambari-server start

and finally the failed connection rom ambari :

and here's the stderr & stdout :

stderr:2019-04-16 15:55:44,477 - Check db_connection_check was unsuccessful. Exit code: 1. Message: Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. ERROR: Unable to connect to the DB. Please check DB connection properties. com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server. Traceback (most recent call last): File "/var/lib/ambari-agent/cache/custom_actions/scripts/check_host.py", line 530, in <module> CheckHost().execute() File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 367, in execute method(env) File "/var/lib/ambari-agent/cache/custom_actions/scripts/check_host.py", line 207, in actionexecute raise Fail(error_message) resource_management.core.exceptions.Fail: Check db_connection_check was unsuccessful. Exit code: 1. Message: Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. ERROR: Unable to connect to the DB. Please check DB connection properties. com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.stdout:

2019-04-16 15:55:43,864 - Host checks started. 2019-04-16 15:55:43,864 - Check execute list: db_connection_check 2019-04-16 15:55:43,864 - DB connection check started. WARNING: File /var/lib/ambari-agent/cache/DBConnectionVerification.jar already exists, assuming it was downloaded before WARNING: File /var/lib/ambari-agent/cache/mysql-connector-java.jar already exists, assuming it was downloaded before 2019-04-16 15:55:43,866 - call['/usr/jdk64/jdk1.8.0_112/bin/java -cp /var/lib/ambari-agent/cache/DBConnectionVerification.jar:/var/lib/ambari-agent/cache/mysql-connector-java.jar -Djava.library.path=/var/lib/ambari-agent/cache org.apache.ambari.server.DBConnectionVerification "jdbc:mysql://master.rh.bigdata.cluster/hive" "hive" [PROTECTED] com.mysql.jdbc.Driver'] {} 2019-04-16 15:55:44,473 - call returned (1, "Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.\nERROR: Unable to connect to the DB. Please check DB connection properties.\ncom.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure\n\nThe last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.") 2019-04-16 15:55:44,474 - DB connection check completed. 2019-04-16 15:55:44,476 - Host checks completed. 2019-04-16 15:55:44,477 - Check db_connection_check was unsuccessful. Exit code: 1. Message: Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. ERROR: Unable to connect to the DB. Please check DB connection properties. com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

Command failed after 1 tries

Created on 05-10-2019 01:09 PM - edited 08-17-2019 03:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hello,

Mysql-connector-java is updated

ambari-server driver and db :

still have the warning on my ambari-server start

and finally the failed connection rom ambari :

and here's the stderr & stdout :

stderr:

- 2019-04-16 15:55:44,477 - Check db_connection_check was unsuccessful. Exit code: 1. Message: Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. ERROR: Unable to connect to the DB. Please check DB connection properties. com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

- The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

- Traceback (most recent call last): File "/var/lib/ambari-agent/cache/custom_actions/scripts/check_host.py", line 530, in <module> CheckHost().execute() File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 367, in execute method(env) File "/var/lib/ambari-agent/cache/custom_actions/scripts/check_host.py", line 207, in actionexecute raise Fail(error_message) resource_management.core.exceptions.Fail: Check db_connection_check was unsuccessful. Exit code: 1. Message: Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. ERROR: Unable to connect to the DB. Please check DB connection properties. com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

- The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

stdout:

- 2019-04-16 15:55:43,864 - Host checks started. 2019-04-16 15:55:43,864 - Check execute list: db_connection_check 2019-04-16 15:55:43,864 - DB connection check started. WARNING: File /var/lib/ambari-agent/cache/DBConnectionVerification.jar already exists, assuming it was downloaded before WARNING: File /var/lib/ambari-agent/cache/mysql-connector-java.jar already exists, assuming it was downloaded before 2019-04-16 15:55:43,866 - call['/usr/jdk64/jdk1.8.0_112/bin/java -cp /var/lib/ambari-agent/cache/DBConnectionVerification.jar:/var/lib/ambari-agent/cache/mysql-connector-java.jar -Djava.library.path=/var/lib/ambari-agent/cache org.apache.ambari.server.DBConnectionVerification "jdbc:mysql://master.rh.bigdata.cluster/hive" "hive" [PROTECTED] com.mysql.jdbc.Driver'] {} 2019-04-16 15:55:44,473 - call returned (1, "Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.\nERROR: Unable to connect to the DB. Please check DB connection properties.\ncom.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure\n\nThe last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.") 2019-04-16 15:55:44,474 - DB connection check completed. 2019-04-16 15:55:44,476 - Host checks completed. 2019-04-16 15:55:44,477 - Check db_connection_check was unsuccessful. Exit code: 1. Message: Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. ERROR: Unable to connect to the DB. Please check DB connection properties. com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

- The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

- Command failed after 1 tries

Created 05-10-2019 01:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The attached /var/log/ambari-server/ambari-server-check-database.log shows you are trying to connect to postgres which is the embedded database.

My guess is your ambari Server is using the embedded postgres database and NOT MySQL so what you need to do after installing and configuring MySQL and Hive is

Install the mySQL driver adapt the commands according to your OS

yum install -y mysql-connector-java

Make the jars available for hive etc this won't effect the Postgres installation

ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java.jar

Now continue with the Ambari hive setup it should succeed NOW!!!!

Created on 05-10-2019 01:09 PM - edited 08-17-2019 03:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

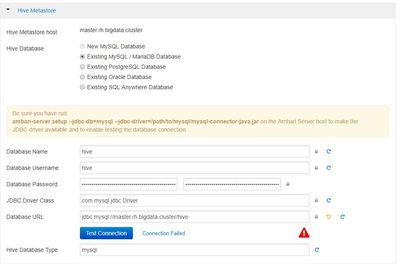

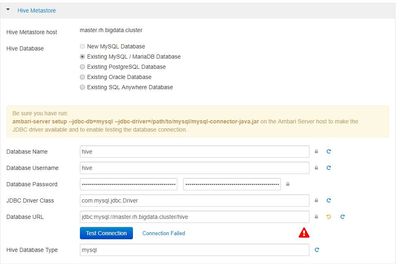

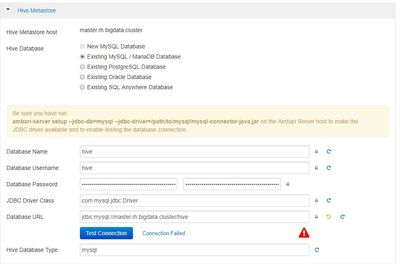

Hello Geoffrey Shelton Okot ,

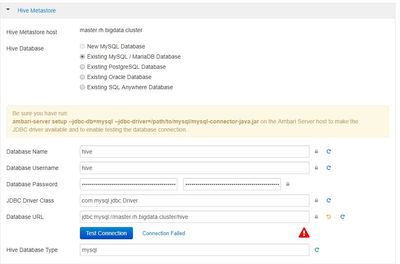

I tried with the "an existing Mysql database" but still have the same error, i also tried with the ip address and the localhost in the URL of the database but still nothing! here a screenshot of my conf

PS : i cant see your screenshot can you upload it again ?

Created on 05-10-2019 01:09 PM - edited 08-17-2019 03:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Geoffrey Shelton Okot ,

first I want to thank you for your answer,

I deleted hive and restarted all instalations and configurations as you said and the tuto explain but still the same error at the end.

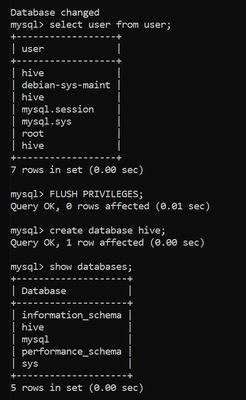

Here some screenshoots of the conf:

mysql and systeme time :

user and database on mysql :

hive conf on ambari :

I found this warning on /var/log/ambari-server/ambari-server-check-database.log :

Created on 05-10-2019 01:09 PM - edited 08-17-2019 03:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

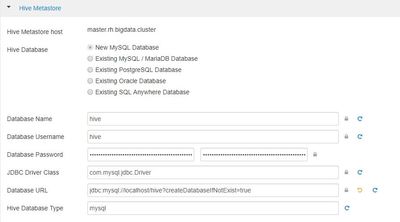

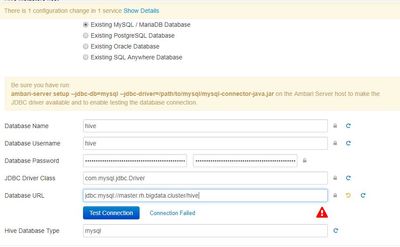

You have done everything correctly but you are not selecting the existing database you created 🙂 See attached screenshot all should work !!!

Please use the values of the hive DB created previously it should pick the correct URL and host !!

Created on 05-10-2019 01:09 PM - edited 08-17-2019 03:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hello,

Mysql-connector-java is updated

ambari-server driver and db :

still have the warning on my ambari-server start

and finally the failed connection rom ambari :

and here's the stderr & stdout :

stderr:

- 2019-04-16 15:55:44,477 - Check db_connection_check was unsuccessful. Exit code: 1. Message: Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. ERROR: Unable to connect to the DB. Please check DB connection properties. com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

- The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

- Traceback (most recent call last): File "/var/lib/ambari-agent/cache/custom_actions/scripts/check_host.py", line 530, in <module> CheckHost().execute() File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 367, in execute method(env) File "/var/lib/ambari-agent/cache/custom_actions/scripts/check_host.py", line 207, in actionexecute raise Fail(error_message) resource_management.core.exceptions.Fail: Check db_connection_check was unsuccessful. Exit code: 1. Message: Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. ERROR: Unable to connect to the DB. Please check DB connection properties. com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

- The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

stdout:

- 2019-04-16 15:55:43,864 - Host checks started. 2019-04-16 15:55:43,864 - Check execute list: db_connection_check 2019-04-16 15:55:43,864 - DB connection check started. WARNING: File /var/lib/ambari-agent/cache/DBConnectionVerification.jar already exists, assuming it was downloaded before WARNING: File /var/lib/ambari-agent/cache/mysql-connector-java.jar already exists, assuming it was downloaded before 2019-04-16 15:55:43,866 - call['/usr/jdk64/jdk1.8.0_112/bin/java -cp /var/lib/ambari-agent/cache/DBConnectionVerification.jar:/var/lib/ambari-agent/cache/mysql-connector-java.jar -Djava.library.path=/var/lib/ambari-agent/cache org.apache.ambari.server.DBConnectionVerification "jdbc:mysql://master.rh.bigdata.cluster/hive" "hive" [PROTECTED] com.mysql.jdbc.Driver'] {} 2019-04-16 15:55:44,473 - call returned (1, "Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.\nERROR: Unable to connect to the DB. Please check DB connection properties.\ncom.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure\n\nThe last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.") 2019-04-16 15:55:44,474 - DB connection check completed. 2019-04-16 15:55:44,476 - Host checks completed. 2019-04-16 15:55:44,477 - Check db_connection_check was unsuccessful. Exit code: 1. Message: Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary. ERROR: Unable to connect to the DB. Please check DB connection properties. com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

- The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

- Command failed after 1 tries