Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HiveServer2 Not Starting : ZooKeeper node does...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HiveServer2 Not Starting : ZooKeeper node does not exist: /hiveserver2

Created 09-14-2018 01:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am getting below error while starting HiveServer2 after installation.

Node does not exist: /hiveserver2

Traceback (most recent call last):

File "/usr/lib/ambari-agent/lib/resource_management/libraries/functions/decorator.py", line 54, in wrapper

return function(*args, **kwargs)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/HIVE/package/scripts/hive_service.py", line 189, in wait_for_znode

raise Fail(format("ZooKeeper node /{hive_server2_zookeeper_namespace} is not ready yet"))

Fail: ZooKeeper node /hiveserver2 is not ready yet

Here are some additional details around error :

2018-09-13 16:25:26,594 - call['ambari-sudo.sh su hive -l -s /bin/bash -c 'cat /var/run/hive/hive-server.pid 1>/tmp/tmpIldfIy 2>/tmp/tmpDcXaXg''] {'quiet': False}

2018-09-13 16:25:26,638 - call returned (1, '')

2018-09-13 16:25:26,638 - Execution of 'cat /var/run/hive/hive-server.pid 1>/tmp/tmpIldfIy 2>/tmp/tmpDcXaXg' returned 1. cat: /var/run/hive/hive-server.pid: No such file or directory

2018-09-13 16:25:26,638 - get_user_call_output returned (1, u'', u'cat: /var/run/hive/hive-server.pid: No such file or directory')

2018-09-13 16:25:26,639 - call['ambari-sudo.sh su hive -l -s /bin/bash -c 'hive --config /usr/hdp/current/hive-server2/conf/ --service metatool -listFSRoot' 2>/dev/null | grep hdfs:// | cut -f1,2,3 -d '/' | grep -v 'hdfs://seidevdsmastervm01.tsudev.seic.com:8020' | head -1'] {}

2018-09-13 16:25:33,149 - call returned (0, '')

2018-09-13 16:25:33,149 - Execute['/var/lib/ambari-agent/tmp/start_hiveserver2_script /var/log/hive/hive-server2.out /var/log/hive/hive-server2.err /var/run/hive/hive-server.pid /usr/hdp/current/hive-server2/conf/ /etc/tez/conf'] {'environment': {'HIVE_BIN': 'hive', 'JAVA_HOME': u'/usr/jdk64/jdk1.8.0_112', 'HADOOP_HOME': u'/usr/hdp/current/hadoop-client'}, 'not_if': 'ls /var/run/hive/hive-server.pid >/dev/null 2>&1 && ps -p >/dev/null 2>&1', 'user': 'hive', 'path': [u'/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/lib64/qt-3.3/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin:/var/lib/ambari-agent:/usr/hdp/current/hive-server2/bin:/usr/hdp/3.0.0.0-1634/hadoop/bin']}

2018-09-13 16:25:33,196 - Execute['/usr/jdk64/jdk1.8.0_112/bin/java -cp /usr/lib/ambari-agent/DBConnectionVerification.jar:/usr/hdp/current/hive-server2/lib/mysql-connector-java.jar org.apache.ambari.server.DBConnectionVerification 'jdbc:mysql://seidevdsmastervm01.tsudev.seic.com/hive?createDatabaseIfNotExist=true' hive [PROTECTED] com.mysql.jdbc.Driver'] {'path': ['/usr/sbin:/sbin:/usr/local/bin:/bin:/usr/bin'], 'tries': 5, 'try_sleep': 10}

2018-09-13 16:25:33,476 - call['/usr/hdp/current/zookeeper-client/bin/zkCli.sh -server worker1_node:2181,worker2_node.com:2181,master_node.com:2181 ls /hiveserver2 | grep 'serverUri=''] {}

2018-09-13 16:25:34,068 - call returned (1, 'Node does not exist: /hiveserver2')Error Message : 2018-09-13 16:25:34,068 - call returned (1, 'Node does not exist: /hiveserver2')

I have checked zk command line on master node where HS2 in configured and could not find HS2 there. Here is output :

[zk: localhost:2181(CONNECTED) 1] ls /

[registry, ambari-metrics-cluster, zookeeper, zk_smoketest, rmstore]

[zk: localhost:2181(CONNECTED) 2]

I am using HDP3.0, This is a 3 node cluster (1 Master + 2 Worker). Hive metastore (MySQL 5.7) is installed on master node and HiveServer2 is also configured on master node. All Machines have Oracle Linux 7.

Created 09-14-2018 02:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please login to Ambari UI and then check the Hive Configuration Param if the following Checkbix is ticket (Selected) or not?

hive.server2.support.dynamic.service.discovery = true

It can be found at:

Ambari UI --> Hive --> Configs --> Advanced -- > "Advanced hive-site"

Please make sure that the mentioned property is selected and then restart the Hive Services.

Created 09-14-2018 03:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have validated hive.server2.support.dynamic.service.discovery is true (check-box is selected) and I am still facing the same issue.

Created 09-14-2018 03:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On the host where it is failing to start please check the XML file if it has the correct entry?

# grep -A2 -B2 'hive.server2.support.dynamic.service.discovery' '/etc/hive/conf/hive-site.xml'

<property>

<name>hive.server2.support.dynamic.service.discovery</name>

<value>true</value>

</property>

Also please try starting the HS2 server manually to see if it works. As described in : https://docs.hortonworks.com/HDPDocuments/HDP3/HDP-3.0.0/administration/content/starting_hdp_service...

# su $HIVE_USER # nohup /usr/hdp/current/hive-server2/bin/hiveserver2 -hiveconf hive.metastore.uris=/tmp/hiveserver2HD.out 2 /tmp/hiveserver2HD.log

If manually starting HiveSerevr2 works then in that case we might need to see if cleaning the Ambari Agent cached data "/var/lib/ambari-agent/cache" "data" ..etc and restarting agent fixes it or not?

Created on 09-14-2018 04:56 AM - edited 08-18-2019 12:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Jay Kumar SenSharma for your prompt responses!

I have manually validated hive-site.xml, it has correct entry.

Later I started hiveserver2 services as suggested manually.

Here is what I see in nohup.out file.

+======================================================================+ | Error: JAVA_HOME is not set | +----------------------------------------------------------------------+ | Please download the latest Sun JDK from the Sun Java web site | | > http://www.oracle.com/technetwork/java/javase/downloads | | | | HBase requires Java 1.8 or later. | +======================================================================+ 2018-09-13 23:25:07: Starting HiveServer2 SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/hdp/3.0.0.0-1634/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/hdp/3.0.0.0-1634/hadoop/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Hive Session ID = a7aa8a99-1a27-42b9-977a-d545742d1747 Hive Session ID = 41c8d3d5-e7ca-42df-acd5-2b8d1f23220c Hive Session ID = 84951be6-015e-4634-a4de-6a6f18d51bb8 Hive Session ID = 1964a526-53da-4b50-90a9-9bc90142e2ff Hive Session ID = f6188f9b-f3a9-43ec-a9ef-6a8a87a01498

I see JAVA_HOME not set error in nohup.out file, So ran the below command to set the JAVA_HOME and reran the command to start hiveserver2 manually, this time I did not get Error: JAVA_HOME is not set but still facing same issue.

Do you suggest any other solution for this?

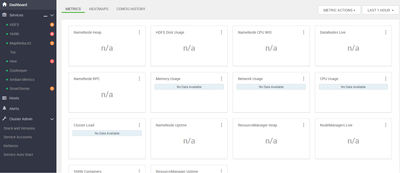

My ambari dashboard looks like this (Image Attached), This cluster is running since 36 hours but no job ran because Hive is not working. Does this n/a and not data available is fine?

All the links eg. resource manage, namenode web interface information. data node information are available and are showing correct information.

FYI - Initially I was installing Hive Metastore(MySQL) and HiveServer2 service on worker2 node but had issue while testing connection to metastore (DBConnectionVerification.jar file issue) so moved metastore and service to master and that issue got resolved.

Created 09-14-2018 04:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As you are getting JAVA_HOME not set issue while attempting to manually start HS2 so please try this:

# su $HIVE_USER # export JAVA_HOME=/usr/jdk64/jdk1.8.0_112 # nohup /usr/hdp/current/hive-server2/bin/hiveserver2 -hiveconf hive.metastore.uris=/tmp/hiveserver2HD.out 2 /tmp/hiveserver2HD.log

.

lets start the HS2 properly now (by using the manual startup command) and then we will troubleshoot the Ambari Side HS2 startup issue.

Created 10-25-2018 03:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Jay Kumar SenSharma,

I'm facing the same issue for an emergency project. I did all these recommanded settings, but the problem is still here.

My Hiveserver2 is not running... Given below my issue :

2018-10-25 14:59:40,525 - call['ambari-sudo.sh su hive -l -s /bin/bash -c 'cat /var/run/hive/hive-server.pid 1>/tmp/tmpkgSe4y 2>/tmp/tmpSm8Hzr''] {'quiet': False}

2018-10-25 14:59:40,546 - call returned (1, '')

2018-10-25 14:59:40,546 - Execution of 'cat /var/run/hive/hive-server.pid 1>/tmp/tmpkgSe4y 2>/tmp/tmpSm8Hzr' returned 1. cat: /var/run/hive/hive-server.pid: No such file or directory

2018-10-25 14:59:40,546 - get_user_call_output returned (1, u'', u'cat: /var/run/hive/hive-server.pid: No such file or directory')

2018-10-25 14:59:40,547 - call['ambari-sudo.sh su hive -l -s /bin/bash -c 'hive --config /usr/hdp/current/hive-server2/conf/ --service metatool -listFSRoot' 2>/dev/null | grep hdfs:// | cut -f1,2,3 -d '/' | grep -v 'hdfs://ayoub-poc-assurance.us-central1-c.c.astute-elixir-219512.internal:8020' | head -1'] {}

2018-10-25 14:59:49,864 - call returned (0, '')

2018-10-25 14:59:49,865 - Execute['/var/lib/ambari-agent/tmp/start_hiveserver2_script /var/log/hive/hive-server2.out /var/log/hive/hive-server2.err /var/run/hive/hive-server.pid /usr/hdp/current/hive-server2/conf/ /etc/tez/conf'] {'environment': {'HIVE_BIN': 'hive', 'JAVA_HOME': u'/usr/jdk64/jdk1.8.0_112', 'HADOOP_HOME': u'/usr/hdp/current/hadoop-client'}, 'not_if': 'ls /var/run/hive/hive-server.pid >/dev/null 2>&1 && ps -p >/dev/null 2>&1', 'user': 'hive', 'path': [u'/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/var/lib/ambari-agent:/var/lib/ambari-agent:/usr/hdp/current/hive-server2/bin:/usr/hdp/3.0.1.0-187/hadoop/bin']}

2018-10-25 14:59:49,894 - Execute['/usr/jdk64/jdk1.8.0_112/bin/java -cp /usr/lib/ambari-agent/DBConnectionVerification.jar:/usr/hdp/current/hive-server2/lib/mysql-connector-java.jar org.apache.ambari.server.DBConnectionVerification 'jdbc:mysql://ayoub-poc-assurance.us-central1-c.c.astute-elixir-219512.internal/hive?createDatabaseIfNotExist=true' hive [PROTECTED] com.mysql.jdbc.Driver'] {'path': ['/usr/sbin:/sbin:/usr/local/bin:/bin:/usr/bin'], 'tries': 5, 'try_sleep': 10}

2018-10-25 14:59:50,344 - call['/usr/hdp/current/zookeeper-client/bin/zkCli.sh -server ayoub-poc-assurance.us-central1-c.c.astute-elixir-219512.internal:2181 ls /hiveserver2 | grep 'serverUri=''] {}

2018-10-25 14:59:51,009 - call returned (1, '')

....

2018-10-25 15:04:06,641 - Will retry 5 time(s), caught exception: ZooKeeper node /hiveserver2 is not ready yet. Sleeping for 10 sec(s)

2018-10-25 15:04:16,646 - Process with pid 17878 is not running. Stale pid file at /var/run/hive/hive-server.pidCould you please help me ?

Created 10-26-2018 01:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like the following call is returning Empty JSON. Please replace the xxxxxxx with your Zookeeper hostname. Also it is better to have more than one zookeeper to maintain a zookeeper quorum so that if one ZK does not work still we have other Zookeepers working.

# /usr/hdp/current/zookeeper-client/bin/zkCli.sh -server xxxxxxxxx.internal:2181 ls /hiveserver2 | grep 'serverUri=''

Can you try running that command manually to validate if your Zookeeper is accessible and running fine and is returning the znode properly?

It might happen if your Zookeepers are not healthy (not running) or showing some errors in their logs.

Created 09-14-2018 02:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried starting HS2 after setting JAVA_HOME but it did not help.

2018-09-14 08:29:31: Starting HiveServer2 SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/hdp/3.0.0.0-1634/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/hdp/3.0.0.0-1634/hadoop/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Hive Session ID = d410dd44-b3ed-4c73-a391-f414da52f946 Hive Session ID = c7b15435-16b1-4f74-ac9a-a5f5fb09af35 Hive Session ID = 2bbef091-f52a-44a6-b1af-d1f78b30fb88 Hive Session ID = 4b405953-cf8d-4d72-a10c-49ccf873e03b Hive Session ID = cc1d904c-b920-42da-8787-c9be4afabc2b

This is error what I see when I invoke Hive :

Error: org.apache.hive.jdbc.ZooKeeperHiveClientException: Unable to read HiveServer2 configs from ZooKeeper (state=,code=0)

Created 10-19-2018 05:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all, I'm facing the same issue!

I have a fresh installation of HDP 3.0.1 on top of new CentOS7. Cluster consists only of 1 node.

The following message appears several times:

2018-10-19 15:19:30,659 - Will retry 6 time(s), caught exception: ZooKeeper node /hiveserver2 is not ready yet. Sleeping for 10 sec(s)

2018-10-19 15:19:40,669 - call['/usr/hdp/current/zookeeper-client/bin/zkCli.sh -server myhost.com:2181 ls /hiveserver2 | grep 'serverUri=''] {}

2018-10-19 15:19:41,439 - call returned (1, '')I checked zookeeper node. The node hiveserver2 exists, but comand ls /hiveserver2 returns an empty result.

Any Idea? More details below!

Thank you,

Björn

Hiveserver starting procedure ends with following messages:

stderr:

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/HIVE/package/scripts/hive_server.py", line 143, in <module>

HiveServer().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 351, in execute

method(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/HIVE/package/scripts/hive_server.py", line 53, in start

hive_service('hiveserver2', action = 'start', upgrade_type=upgrade_type)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/HIVE/package/scripts/hive_service.py", line 101, in hive_service

wait_for_znode()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/functions/decorator.py", line 54, in wrapper

return function(*args, **kwargs)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/HIVE/package/scripts/hive_service.py", line 184, in wait_for_znode

raise Exception(format("HiveServer2 is no longer running, check the logs at {hive_log_dir}"))

Exception: HiveServer2 is no longer running, check the logs at /var/log/hivestdout:

2018-10-19 15:14:07,612 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-19 15:14:07,647 - Using hadoop conf dir: /usr/hdp/3.0.1.0-187/hadoop/conf

2018-10-19 15:14:08,036 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-19 15:14:08,046 - Using hadoop conf dir: /usr/hdp/3.0.1.0-187/hadoop/conf

2018-10-19 15:14:08,048 - Group['kms'] {}

2018-10-19 15:14:08,050 - Group['livy'] {}

2018-10-19 15:14:08,051 - Group['spark'] {}

2018-10-19 15:14:08,051 - Group['ranger'] {}

2018-10-19 15:14:08,051 - Group['hdfs'] {}

2018-10-19 15:14:08,052 - Group['zeppelin'] {}

2018-10-19 15:14:08,052 - Group['hadoop'] {}

2018-10-19 15:14:08,052 - Group['users'] {}

2018-10-19 15:14:08,053 - Group['knox'] {}

2018-10-19 15:14:08,054 - User['yarn-ats'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,057 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,059 - User['storm'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,061 - User['infra-solr'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,063 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,065 - User['oozie'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

2018-10-19 15:14:08,068 - User['atlas'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,070 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,072 - User['ranger'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['ranger', 'hadoop'], 'uid': None}

2018-10-19 15:14:08,074 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

2018-10-19 15:14:08,076 - User['zeppelin'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['zeppelin', 'hadoop'], 'uid': None}

2018-10-19 15:14:08,078 - User['kms'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['kms', 'hadoop'], 'uid': None}

2018-10-19 15:14:08,080 - User['accumulo'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,082 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['livy', 'hadoop'], 'uid': None}

2018-10-19 15:14:08,084 - User['druid'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,086 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['spark', 'hadoop'], 'uid': None}

2018-10-19 15:14:08,088 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

2018-10-19 15:14:08,090 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,093 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop'], 'uid': None}

2018-10-19 15:14:08,095 - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,097 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,099 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,101 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-10-19 15:14:08,103 - User['knox'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'knox'], 'uid': None}

2018-10-19 15:14:08,105 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-10-19 15:14:08,114 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2018-10-19 15:14:08,123 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if

2018-10-19 15:14:08,124 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'create_parents': True, 'mode': 0775, 'cd_access': 'a'}

2018-10-19 15:14:08,125 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-10-19 15:14:08,127 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-10-19 15:14:08,129 - call['/var/lib/ambari-agent/tmp/changeUid.sh hbase'] {}

2018-10-19 15:14:08,142 - call returned (0, '1022')

2018-10-19 15:14:08,143 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1022'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2018-10-19 15:14:08,151 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1022'] due to not_if

2018-10-19 15:14:08,152 - Group['hdfs'] {}

2018-10-19 15:14:08,153 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop', u'hdfs']}

2018-10-19 15:14:08,154 - FS Type: HDFS

2018-10-19 15:14:08,154 - Directory['/etc/hadoop'] {'mode': 0755}

2018-10-19 15:14:08,181 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2018-10-19 15:14:08,182 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2018-10-19 15:14:08,208 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2018-10-19 15:14:08,237 - Skipping Execute[('setenforce', '0')] due to not_if

2018-10-19 15:14:08,237 - Directory['/var/log/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'hadoop', 'mode': 0775, 'cd_access': 'a'}

2018-10-19 15:14:08,241 - Directory['/var/run/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'root', 'cd_access': 'a'}

2018-10-19 15:14:08,242 - Directory['/var/run/hadoop/hdfs'] {'owner': 'hdfs', 'cd_access': 'a'}

2018-10-19 15:14:08,243 - Directory['/tmp/hadoop-hdfs'] {'owner': 'hdfs', 'create_parents': True, 'cd_access': 'a'}

2018-10-19 15:14:08,249 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'hdfs'}

2018-10-19 15:14:08,253 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'hdfs'}

2018-10-19 15:14:08,264 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/log4j.properties'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:08,285 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/hadoop-metrics2.properties'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2018-10-19 15:14:08,287 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2018-10-19 15:14:08,288 - File['/usr/hdp/3.0.1.0-187/hadoop/conf/configuration.xsl'] {'owner': 'hdfs', 'group': 'hadoop'}

2018-10-19 15:14:08,296 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'hdfs', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:08,302 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2018-10-19 15:14:08,308 - Skipping unlimited key JCE policy check and setup since it is not required

2018-10-19 15:14:08,788 - Using hadoop conf dir: /usr/hdp/3.0.1.0-187/hadoop/conf

2018-10-19 15:14:08,813 - call['ambari-python-wrap /usr/bin/hdp-select status hive-server2'] {'timeout': 20}

2018-10-19 15:14:08,871 - call returned (0, 'hive-server2 - 3.0.1.0-187')

2018-10-19 15:14:08,872 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-19 15:14:08,942 - File['/var/lib/ambari-agent/cred/lib/CredentialUtil.jar'] {'content': DownloadSource('http://myhost.com:8080/resources/CredentialUtil.jar'), 'mode': 0755}

2018-10-19 15:14:08,945 - Not downloading the file from http://myhost.com:8080/resources/CredentialUtil.jar, because /var/lib/ambari-agent/tmp/CredentialUtil.jar already exists

2018-10-19 15:14:12,261 - Directories to fill with configs: [u'/usr/hdp/current/hive-server2/conf', u'/usr/hdp/current/hive-server2/conf/']

2018-10-19 15:14:12,262 - Directory['/etc/hive/3.0.1.0-187/0'] {'owner': 'hive', 'group': 'hadoop', 'create_parents': True, 'mode': 0755}

2018-10-19 15:14:12,279 - XmlConfig['mapred-site.xml'] {'group': 'hadoop', 'conf_dir': '/etc/hive/3.0.1.0-187/0', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': ...}

2018-10-19 15:14:12,300 - Generating config: /etc/hive/3.0.1.0-187/0/mapred-site.xml

2018-10-19 15:14:12,300 - File['/etc/hive/3.0.1.0-187/0/mapred-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-19 15:14:12,368 - File['/etc/hive/3.0.1.0-187/0/hive-default.xml.template'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,369 - File['/etc/hive/3.0.1.0-187/0/hive-env.sh.template'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0755}

2018-10-19 15:14:12,374 - File['/etc/hive/3.0.1.0-187/0/llap-daemon-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,379 - File['/etc/hive/3.0.1.0-187/0/llap-cli-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,384 - File['/etc/hive/3.0.1.0-187/0/hive-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,389 - File['/etc/hive/3.0.1.0-187/0/hive-exec-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,392 - File['/etc/hive/3.0.1.0-187/0/beeline-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,399 - XmlConfig['beeline-site.xml'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0644, 'conf_dir': '/etc/hive/3.0.1.0-187/0', 'configurations': {'beeline.hs2.jdbc.url.container': u'jdbc:hive2://myhost.com:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2', 'beeline.hs2.jdbc.url.default': 'container'}}

2018-10-19 15:14:12,415 - Generating config: /etc/hive/3.0.1.0-187/0/beeline-site.xml

2018-10-19 15:14:12,415 - File['/etc/hive/3.0.1.0-187/0/beeline-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-19 15:14:12,423 - File['/etc/hive/3.0.1.0-187/0/parquet-logging.properties'] {'content': ..., 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,436 - Directory['/etc/hive/3.0.1.0-187/0'] {'owner': 'hive', 'group': 'hadoop', 'create_parents': True, 'mode': 0755}

2018-10-19 15:14:12,437 - XmlConfig['mapred-site.xml'] {'group': 'hadoop', 'conf_dir': '/etc/hive/3.0.1.0-187/0', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': ...}

2018-10-19 15:14:12,451 - Generating config: /etc/hive/3.0.1.0-187/0/mapred-site.xml

2018-10-19 15:14:12,452 - File['/etc/hive/3.0.1.0-187/0/mapred-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-19 15:14:12,519 - File['/etc/hive/3.0.1.0-187/0/hive-default.xml.template'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,519 - File['/etc/hive/3.0.1.0-187/0/hive-env.sh.template'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0755}

2018-10-19 15:14:12,525 - File['/etc/hive/3.0.1.0-187/0/llap-daemon-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,530 - File['/etc/hive/3.0.1.0-187/0/llap-cli-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,534 - File['/etc/hive/3.0.1.0-187/0/hive-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,537 - File['/etc/hive/3.0.1.0-187/0/hive-exec-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,540 - File['/etc/hive/3.0.1.0-187/0/beeline-log4j2.properties'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,542 - XmlConfig['beeline-site.xml'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0644, 'conf_dir': '/etc/hive/3.0.1.0-187/0', 'configurations': {'beeline.hs2.jdbc.url.container': u'jdbc:hive2://myhost.com:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2', 'beeline.hs2.jdbc.url.default': 'container'}}

2018-10-19 15:14:12,556 - Generating config: /etc/hive/3.0.1.0-187/0/beeline-site.xml

2018-10-19 15:14:12,557 - File['/etc/hive/3.0.1.0-187/0/beeline-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-19 15:14:12,560 - File['/etc/hive/3.0.1.0-187/0/parquet-logging.properties'] {'content': ..., 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:12,561 - File['/usr/hdp/current/hive-server2/conf/hive-site.jceks'] {'content': StaticFile('/var/lib/ambari-agent/cred/conf/hive_server/hive-site.jceks'), 'owner': 'hive', 'group': 'hadoop', 'mode': 0640}

2018-10-19 15:14:12,570 - Writing File['/usr/hdp/current/hive-server2/conf/hive-site.jceks'] because contents don't match

2018-10-19 15:14:12,571 - XmlConfig['hive-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hive-server2/conf/', 'mode': 0644, 'configuration_attributes': {u'hidden': {u'javax.jdo.option.ConnectionPassword': u'HIVE_CLIENT,CONFIG_DOWNLOAD'}}, 'owner': 'hive', 'configurations': ...}

2018-10-19 15:14:12,584 - Generating config: /usr/hdp/current/hive-server2/conf/hive-site.xml

2018-10-19 15:14:12,585 - File['/usr/hdp/current/hive-server2/conf/hive-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-19 15:14:12,825 - Writing File['/usr/hdp/current/hive-server2/conf/hive-site.xml'] because contents don't match

2018-10-19 15:14:12,826 - Generating Atlas Hook config file /usr/hdp/current/hive-server2/conf/atlas-application.properties

2018-10-19 15:14:12,826 - PropertiesFile['/usr/hdp/current/hive-server2/conf/atlas-application.properties'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0644, 'properties': ...}

2018-10-19 15:14:12,833 - Generating properties file: /usr/hdp/current/hive-server2/conf/atlas-application.properties

2018-10-19 15:14:12,833 - File['/usr/hdp/current/hive-server2/conf/atlas-application.properties'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2018-10-19 15:14:12,856 - Writing File['/usr/hdp/current/hive-server2/conf/atlas-application.properties'] because contents don't match

2018-10-19 15:14:12,864 - File['/usr/hdp/current/hive-server2/conf//hive-env.sh'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0755}

2018-10-19 15:14:12,866 - Writing File['/usr/hdp/current/hive-server2/conf//hive-env.sh'] because contents don't match

2018-10-19 15:14:12,867 - Directory['/etc/security/limits.d'] {'owner': 'root', 'create_parents': True, 'group': 'root'}

2018-10-19 15:14:12,871 - File['/etc/security/limits.d/hive.conf'] {'content': Template('hive.conf.j2'), 'owner': 'root', 'group': 'root', 'mode': 0644}

2018-10-19 15:14:12,873 - File['/usr/lib/ambari-agent/DBConnectionVerification.jar'] {'content': DownloadSource('http://myhost.com:8080/resources/DBConnectionVerification.jar'), 'mode': 0644}

2018-10-19 15:14:12,873 - Not downloading the file from http://myhost.com:8080/resources/DBConnectionVerification.jar, because /var/lib/ambari-agent/tmp/DBConnectionVerification.jar already exists

2018-10-19 15:14:12,874 - Directory['/var/run/hive'] {'owner': 'hive', 'create_parents': True, 'group': 'hadoop', 'mode': 0755, 'cd_access': 'a'}

2018-10-19 15:14:12,875 - Directory['/var/log/hive'] {'owner': 'hive', 'create_parents': True, 'group': 'hadoop', 'mode': 0755, 'cd_access': 'a'}

2018-10-19 15:14:12,876 - Directory['/var/lib/hive'] {'owner': 'hive', 'create_parents': True, 'group': 'hadoop', 'mode': 0755, 'cd_access': 'a'}

2018-10-19 15:14:12,879 - File['/var/lib/ambari-agent/tmp/start_hiveserver2_script'] {'content': Template('startHiveserver2.sh.j2'), 'mode': 0755}

2018-10-19 15:14:12,899 - File['/usr/hdp/current/hive-server2/conf/hadoop-metrics2-hiveserver2.properties'] {'content': Template('hadoop-metrics2-hiveserver2.properties.j2'), 'owner': 'hive', 'group': 'hadoop', 'mode': 0600}

2018-10-19 15:14:12,912 - XmlConfig['hiveserver2-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hive-server2/conf/', 'mode': 0600, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': ...}

2018-10-19 15:14:12,927 - Generating config: /usr/hdp/current/hive-server2/conf/hiveserver2-site.xml

2018-10-19 15:14:12,927 - File['/usr/hdp/current/hive-server2/conf/hiveserver2-site.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0600, 'encoding': 'UTF-8'}

2018-10-19 15:14:12,948 - Called copy_to_hdfs tarball: mapreduce

2018-10-19 15:14:12,948 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-19 15:14:12,949 - Tarball version was calcuated as 3.0.1.0-187. Use Command Version: True

2018-10-19 15:14:12,949 - Source file: /usr/hdp/3.0.1.0-187/hadoop/mapreduce.tar.gz , Dest file in HDFS: /hdp/apps/3.0.1.0-187/mapreduce/mapreduce.tar.gz

2018-10-19 15:14:12,949 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-19 15:14:12,949 - Tarball version was calcuated as 3.0.1.0-187. Use Command Version: True

2018-10-19 15:14:12,950 - HdfsResource['/hdp/apps/3.0.1.0-187/mapreduce'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hdfs', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0555}

2018-10-19 15:14:12,956 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/hdp/apps/3.0.1.0-187/mapreduce?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpiDaewr 2>/tmp/tmp8p8bdb''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:13,466 - call returned (0, '')

2018-10-19 15:14:13,466 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":2,"fileId":16425,"group":"hdfs","length":0,"modificationTime":1539955370866,"owner":"hdfs","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2018-10-19 15:14:13,468 - HdfsResource['/hdp/apps/3.0.1.0-187/mapreduce/mapreduce.tar.gz'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'source': '/usr/hdp/3.0.1.0-187/hadoop/mapreduce.tar.gz', 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'replace_existing_files': False, 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hdfs', 'group': 'hadoop', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0444}

2018-10-19 15:14:13,471 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/hdp/apps/3.0.1.0-187/mapreduce/mapreduce.tar.gz?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmplA61A_ 2>/tmp/tmpny25ET''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:13,577 - call returned (0, '')

2018-10-19 15:14:13,578 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":1539954662386,"blockSize":134217728,"childrenNum":0,"fileId":16426,"group":"hadoop","length":307712858,"modificationTime":1539954664144,"owner":"hdfs","pathSuffix":"","permission":"444","replication":3,"storagePolicy":0,"type":"FILE"}}200', u'')

2018-10-19 15:14:13,579 - DFS file /hdp/apps/3.0.1.0-187/mapreduce/mapreduce.tar.gz is identical to /usr/hdp/3.0.1.0-187/hadoop/mapreduce.tar.gz, skipping the copying

2018-10-19 15:14:13,579 - Will attempt to copy mapreduce tarball from /usr/hdp/3.0.1.0-187/hadoop/mapreduce.tar.gz to DFS at /hdp/apps/3.0.1.0-187/mapreduce/mapreduce.tar.gz.

2018-10-19 15:14:13,579 - Called copy_to_hdfs tarball: tez

2018-10-19 15:14:13,580 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-19 15:14:13,580 - Tarball version was calcuated as 3.0.1.0-187. Use Command Version: True

2018-10-19 15:14:13,580 - Source file: /usr/hdp/3.0.1.0-187/tez/lib/tez.tar.gz , Dest file in HDFS: /hdp/apps/3.0.1.0-187/tez/tez.tar.gz

2018-10-19 15:14:13,580 - Preparing the Tez tarball...

2018-10-19 15:14:13,580 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-19 15:14:13,581 - Tarball version was calcuated as 3.0.1.0-187. Use Command Version: True

2018-10-19 15:14:13,581 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-19 15:14:13,581 - Tarball version was calcuated as 3.0.1.0-187. Use Command Version: True

2018-10-19 15:14:13,582 - Extracting /usr/hdp/3.0.1.0-187/hadoop/mapreduce.tar.gz to /var/lib/ambari-agent/tmp/mapreduce-tarball-OPaW17

2018-10-19 15:14:13,582 - Execute[('tar', '-xf', u'/usr/hdp/3.0.1.0-187/hadoop/mapreduce.tar.gz', '-C', '/var/lib/ambari-agent/tmp/mapreduce-tarball-OPaW17/')] {'tries': 3, 'sudo': True, 'try_sleep': 1}

2018-10-19 15:14:18,818 - Extracting /usr/hdp/3.0.1.0-187/tez/lib/tez.tar.gz to /var/lib/ambari-agent/tmp/tez-tarball-0X9RR6

2018-10-19 15:14:18,822 - Execute[('tar', '-xf', u'/usr/hdp/3.0.1.0-187/tez/lib/tez.tar.gz', '-C', '/var/lib/ambari-agent/tmp/tez-tarball-0X9RR6/')] {'tries': 3, 'sudo': True, 'try_sleep': 1}

2018-10-19 15:14:22,141 - Execute[('cp', '-a', '/var/lib/ambari-agent/tmp/mapreduce-tarball-OPaW17/hadoop/lib/native', '/var/lib/ambari-agent/tmp/tez-tarball-0X9RR6/lib')] {'sudo': True}

2018-10-19 15:14:22,633 - Directory['/var/lib/ambari-agent/tmp/tez-tarball-0X9RR6/lib'] {'recursive_ownership': True, 'mode': 0755, 'cd_access': 'a'}

2018-10-19 15:14:22,634 - Creating a new Tez tarball at /var/lib/ambari-agent/tmp/tez-native-tarball-staging/tez-native.tar.gz

2018-10-19 15:14:22,645 - Execute[('tar', '-zchf', '/tmp/tmpQ7qH47', '-C', '/var/lib/ambari-agent/tmp/tez-tarball-0X9RR6', '.')] {'tries': 3, 'sudo': True, 'try_sleep': 1}

2018-10-19 15:14:39,149 - Execute[('mv', '/tmp/tmpQ7qH47', '/var/lib/ambari-agent/tmp/tez-native-tarball-staging/tez-native.tar.gz')] {}

2018-10-19 15:14:39,249 - HdfsResource['/hdp/apps/3.0.1.0-187/tez'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hdfs', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0555}

2018-10-19 15:14:39,251 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/hdp/apps/3.0.1.0-187/tez?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpmg_jWK 2>/tmp/tmp9t96Lp''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:39,427 - call returned (0, '')

2018-10-19 15:14:39,427 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":1,"fileId":16427,"group":"hdfs","length":0,"modificationTime":1539954686649,"owner":"hdfs","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2018-10-19 15:14:39,429 - HdfsResource['/hdp/apps/3.0.1.0-187/tez/tez.tar.gz'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'source': '/var/lib/ambari-agent/tmp/tez-native-tarball-staging/tez-native.tar.gz', 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'replace_existing_files': False, 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hdfs', 'group': 'hadoop', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0444}

2018-10-19 15:14:39,430 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/hdp/apps/3.0.1.0-187/tez/tez.tar.gz?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpwoZtdR 2>/tmp/tmpymW0Y9''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:39,530 - call returned (0, '')

2018-10-19 15:14:39,531 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":1539954686649,"blockSize":134217728,"childrenNum":0,"fileId":16428,"group":"hadoop","length":254014296,"modificationTime":1539954687534,"owner":"hdfs","pathSuffix":"","permission":"444","replication":3,"storagePolicy":0,"type":"FILE"}}200', u'')

2018-10-19 15:14:39,532 - Not replacing existing DFS file /hdp/apps/3.0.1.0-187/tez/tez.tar.gz which is different from /var/lib/ambari-agent/tmp/tez-native-tarball-staging/tez-native.tar.gz, due to replace_existing_files=False

2018-10-19 15:14:39,532 - Will attempt to copy tez tarball from /var/lib/ambari-agent/tmp/tez-native-tarball-staging/tez-native.tar.gz to DFS at /hdp/apps/3.0.1.0-187/tez/tez.tar.gz.

2018-10-19 15:14:39,532 - Called copy_to_hdfs tarball: pig

2018-10-19 15:14:39,533 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-19 15:14:39,533 - Tarball version was calcuated as 3.0.1.0-187. Use Command Version: True

2018-10-19 15:14:39,533 - Source file: /usr/hdp/3.0.1.0-187/pig/pig.tar.gz , Dest file in HDFS: /hdp/apps/3.0.1.0-187/pig/pig.tar.gz

2018-10-19 15:14:39,534 - HdfsResource['/hdp/apps/3.0.1.0-187/pig'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hdfs', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0555}

2018-10-19 15:14:39,535 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/hdp/apps/3.0.1.0-187/pig?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpvU3av5 2>/tmp/tmpSXDAAo''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:39,628 - call returned (0, '')

2018-10-19 15:14:39,628 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":1,"fileId":17502,"group":"hdfs","length":0,"modificationTime":1539955361371,"owner":"hdfs","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2018-10-19 15:14:39,630 - HdfsResource['/hdp/apps/3.0.1.0-187/pig/pig.tar.gz'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'source': '/usr/hdp/3.0.1.0-187/pig/pig.tar.gz', 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'replace_existing_files': False, 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hdfs', 'group': 'hadoop', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0444}

2018-10-19 15:14:39,632 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/hdp/apps/3.0.1.0-187/pig/pig.tar.gz?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpg7wi8Y 2>/tmp/tmpp9sfvt''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:39,731 - call returned (0, '')

2018-10-19 15:14:39,731 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":1539955361371,"blockSize":134217728,"childrenNum":0,"fileId":17503,"group":"hadoop","length":158791145,"modificationTime":1539955362484,"owner":"hdfs","pathSuffix":"","permission":"444","replication":3,"storagePolicy":0,"type":"FILE"}}200', u'')

2018-10-19 15:14:39,733 - DFS file /hdp/apps/3.0.1.0-187/pig/pig.tar.gz is identical to /usr/hdp/3.0.1.0-187/pig/pig.tar.gz, skipping the copying

2018-10-19 15:14:39,733 - Will attempt to copy pig tarball from /usr/hdp/3.0.1.0-187/pig/pig.tar.gz to DFS at /hdp/apps/3.0.1.0-187/pig/pig.tar.gz.

2018-10-19 15:14:39,733 - Called copy_to_hdfs tarball: hive

2018-10-19 15:14:39,733 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-19 15:14:39,734 - Tarball version was calcuated as 3.0.1.0-187. Use Command Version: True

2018-10-19 15:14:39,734 - Source file: /usr/hdp/3.0.1.0-187/hive/hive.tar.gz , Dest file in HDFS: /hdp/apps/3.0.1.0-187/hive/hive.tar.gz

2018-10-19 15:14:39,734 - HdfsResource['/hdp/apps/3.0.1.0-187/hive'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hdfs', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0555}

2018-10-19 15:14:39,736 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/hdp/apps/3.0.1.0-187/hive?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpZWcAw3 2>/tmp/tmp3fIVfV''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:39,829 - call returned (0, '')

2018-10-19 15:14:39,830 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":1,"fileId":17504,"group":"hdfs","length":0,"modificationTime":1539955366537,"owner":"hdfs","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2018-10-19 15:14:39,831 - HdfsResource['/hdp/apps/3.0.1.0-187/hive/hive.tar.gz'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'source': '/usr/hdp/3.0.1.0-187/hive/hive.tar.gz', 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'replace_existing_files': False, 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hdfs', 'group': 'hadoop', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0444}

2018-10-19 15:14:39,833 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/hdp/apps/3.0.1.0-187/hive/hive.tar.gz?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmplQQ8so 2>/tmp/tmpJrn4zn''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:39,930 - call returned (0, '')

2018-10-19 15:14:39,930 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":1539955366537,"blockSize":134217728,"childrenNum":0,"fileId":17505,"group":"hadoop","length":381339333,"modificationTime":1539955368164,"owner":"hdfs","pathSuffix":"","permission":"444","replication":3,"storagePolicy":0,"type":"FILE"}}200', u'')

2018-10-19 15:14:39,932 - DFS file /hdp/apps/3.0.1.0-187/hive/hive.tar.gz is identical to /usr/hdp/3.0.1.0-187/hive/hive.tar.gz, skipping the copying

2018-10-19 15:14:39,932 - Will attempt to copy hive tarball from /usr/hdp/3.0.1.0-187/hive/hive.tar.gz to DFS at /hdp/apps/3.0.1.0-187/hive/hive.tar.gz.

2018-10-19 15:14:39,932 - Called copy_to_hdfs tarball: sqoop

2018-10-19 15:14:39,932 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-19 15:14:39,933 - Tarball version was calcuated as 3.0.1.0-187. Use Command Version: True

2018-10-19 15:14:39,933 - Source file: /usr/hdp/3.0.1.0-187/sqoop/sqoop.tar.gz , Dest file in HDFS: /hdp/apps/3.0.1.0-187/sqoop/sqoop.tar.gz

2018-10-19 15:14:39,934 - HdfsResource['/hdp/apps/3.0.1.0-187/sqoop'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hdfs', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0555}

2018-10-19 15:14:39,935 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/hdp/apps/3.0.1.0-187/sqoop?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmp123DmF 2>/tmp/tmpjTwLlt''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:40,032 - call returned (0, '')

2018-10-19 15:14:40,033 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":1,"fileId":17506,"group":"hdfs","length":0,"modificationTime":1539955369535,"owner":"hdfs","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2018-10-19 15:14:40,034 - HdfsResource['/hdp/apps/3.0.1.0-187/sqoop/sqoop.tar.gz'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'source': '/usr/hdp/3.0.1.0-187/sqoop/sqoop.tar.gz', 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'replace_existing_files': False, 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hdfs', 'group': 'hadoop', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0444}

2018-10-19 15:14:40,036 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/hdp/apps/3.0.1.0-187/sqoop/sqoop.tar.gz?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpK8ZNxC 2>/tmp/tmpDikwff''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:40,163 - call returned (0, '')

2018-10-19 15:14:40,164 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":1539955369535,"blockSize":134217728,"childrenNum":0,"fileId":17507,"group":"hadoop","length":83672044,"modificationTime":1539955370296,"owner":"hdfs","pathSuffix":"","permission":"444","replication":3,"storagePolicy":0,"type":"FILE"}}200', u'')

2018-10-19 15:14:40,165 - DFS file /hdp/apps/3.0.1.0-187/sqoop/sqoop.tar.gz is identical to /usr/hdp/3.0.1.0-187/sqoop/sqoop.tar.gz, skipping the copying

2018-10-19 15:14:40,165 - Will attempt to copy sqoop tarball from /usr/hdp/3.0.1.0-187/sqoop/sqoop.tar.gz to DFS at /hdp/apps/3.0.1.0-187/sqoop/sqoop.tar.gz.

2018-10-19 15:14:40,165 - Called copy_to_hdfs tarball: hadoop_streaming

2018-10-19 15:14:40,165 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.1.0-187 -> 3.0.1.0-187

2018-10-19 15:14:40,166 - Tarball version was calcuated as 3.0.1.0-187. Use Command Version: True

2018-10-19 15:14:40,166 - Source file: /usr/hdp/3.0.1.0-187/hadoop-mapreduce/hadoop-streaming.jar , Dest file in HDFS: /hdp/apps/3.0.1.0-187/mapreduce/hadoop-streaming.jar

2018-10-19 15:14:40,167 - HdfsResource['/hdp/apps/3.0.1.0-187/mapreduce'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hdfs', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0555}

2018-10-19 15:14:40,168 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/hdp/apps/3.0.1.0-187/mapreduce?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpMQ5r80 2>/tmp/tmpLBd9ek''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:40,306 - call returned (0, '')

2018-10-19 15:14:40,307 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":2,"fileId":16425,"group":"hdfs","length":0,"modificationTime":1539955370866,"owner":"hdfs","pathSuffix":"","permission":"555","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2018-10-19 15:14:40,308 - HdfsResource['/hdp/apps/3.0.1.0-187/mapreduce/hadoop-streaming.jar'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'source': '/usr/hdp/3.0.1.0-187/hadoop-mapreduce/hadoop-streaming.jar', 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'replace_existing_files': False, 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hdfs', 'group': 'hadoop', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0444}

2018-10-19 15:14:40,310 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/hdp/apps/3.0.1.0-187/mapreduce/hadoop-streaming.jar?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpv7KKpQ 2>/tmp/tmpysKpsJ''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:40,443 - call returned (0, '')

2018-10-19 15:14:40,444 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":1539955370866,"blockSize":134217728,"childrenNum":0,"fileId":17508,"group":"hadoop","length":176345,"modificationTime":1539955371313,"owner":"hdfs","pathSuffix":"","permission":"444","replication":3,"storagePolicy":0,"type":"FILE"}}200', u'')

2018-10-19 15:14:40,445 - DFS file /hdp/apps/3.0.1.0-187/mapreduce/hadoop-streaming.jar is identical to /usr/hdp/3.0.1.0-187/hadoop-mapreduce/hadoop-streaming.jar, skipping the copying

2018-10-19 15:14:40,445 - Will attempt to copy hadoop_streaming tarball from /usr/hdp/3.0.1.0-187/hadoop-mapreduce/hadoop-streaming.jar to DFS at /hdp/apps/3.0.1.0-187/mapreduce/hadoop-streaming.jar.

2018-10-19 15:14:40,446 - HdfsResource['/warehouse/tablespace/external/hive/sys.db/'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hive', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 01755}

2018-10-19 15:14:40,448 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/warehouse/tablespace/external/hive/sys.db/?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpHhJKJ2 2>/tmp/tmpFy9XC_''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:40,608 - call returned (0, '')

2018-10-19 15:14:40,608 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"aclBit":true,"blockSize":0,"childrenNum":46,"fileId":17383,"group":"hadoop","length":0,"modificationTime":1539955372851,"owner":"hive","pathSuffix":"","permission":"1755","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2018-10-19 15:14:40,610 - HdfsResource['/warehouse/tablespace/external/hive/sys.db/query_data/'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hive', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 01777}

2018-10-19 15:14:40,611 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/warehouse/tablespace/external/hive/sys.db/query_data/?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpUC4YVf 2>/tmp/tmpP0lLJ4''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:40,712 - call returned (0, '')

2018-10-19 15:14:40,712 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"aclBit":true,"blockSize":0,"childrenNum":1,"fileId":17384,"group":"hadoop","length":0,"modificationTime":1539954990329,"owner":"hive","pathSuffix":"","permission":"1777","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2018-10-19 15:14:40,713 - HdfsResource['/warehouse/tablespace/external/hive/sys.db/dag_meta'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hive', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 01777}

2018-10-19 15:14:40,715 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/warehouse/tablespace/external/hive/sys.db/dag_meta?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpM1dPhQ 2>/tmp/tmpDU7VWv''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:40,810 - call returned (0, '')

2018-10-19 15:14:40,811 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"aclBit":true,"blockSize":0,"childrenNum":0,"fileId":17509,"group":"hadoop","length":0,"modificationTime":1539955372026,"owner":"hive","pathSuffix":"","permission":"1777","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2018-10-19 15:14:40,812 - HdfsResource['/warehouse/tablespace/external/hive/sys.db/dag_data'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hive', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 01777}

2018-10-19 15:14:40,814 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/warehouse/tablespace/external/hive/sys.db/dag_data?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpw0UFhI 2>/tmp/tmpi748TA''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:40,912 - call returned (0, '')

2018-10-19 15:14:40,912 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"aclBit":true,"blockSize":0,"childrenNum":0,"fileId":17510,"group":"hadoop","length":0,"modificationTime":1539955372412,"owner":"hive","pathSuffix":"","permission":"1777","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2018-10-19 15:14:40,914 - HdfsResource['/warehouse/tablespace/external/hive/sys.db/app_data'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'owner': 'hive', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 01777}

2018-10-19 15:14:40,916 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/warehouse/tablespace/external/hive/sys.db/app_data?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmp5sZAqZ 2>/tmp/tmpS60pRQ''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:41,009 - call returned (0, '')

2018-10-19 15:14:41,009 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"aclBit":true,"blockSize":0,"childrenNum":1,"fileId":17511,"group":"hadoop","length":0,"modificationTime":1539955458667,"owner":"hive","pathSuffix":"","permission":"1777","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2018-10-19 15:14:41,011 - HdfsResource[None] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'action': ['execute'], 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp']}

2018-10-19 15:14:41,018 - Directory['/usr/lib/ambari-logsearch-logfeeder/conf'] {'create_parents': True, 'mode': 0755, 'cd_access': 'a'}

2018-10-19 15:14:41,019 - Generate Log Feeder config file: /usr/lib/ambari-logsearch-logfeeder/conf/input.config-hive.json

2018-10-19 15:14:41,019 - File['/usr/lib/ambari-logsearch-logfeeder/conf/input.config-hive.json'] {'content': Template('input.config-hive.json.j2'), 'mode': 0644}

2018-10-19 15:14:41,020 - Hive: Setup ranger: command retry not enabled thus skipping if ranger admin is down !

2018-10-19 15:14:41,021 - HdfsResource['/ranger/audit'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'user': 'hdfs', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'recursive_chmod': True, 'owner': 'hdfs', 'group': 'hdfs', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0755}

2018-10-19 15:14:41,022 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/ranger/audit?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpqBaOGx 2>/tmp/tmppEAvF5''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:41,118 - call returned (0, '')

2018-10-19 15:14:41,119 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":6,"fileId":16390,"group":"hdfs","length":0,"modificationTime":1539955373909,"owner":"hdfs","pathSuffix":"","permission":"755","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2018-10-19 15:14:41,120 - HdfsResource['/ranger/audit/hiveServer2'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'user': 'hdfs', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'recursive_chmod': True, 'owner': 'hive', 'group': 'hive', 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp'], 'mode': 0700}

2018-10-19 15:14:41,122 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://myhost.com:50070/webhdfs/v1/ranger/audit/hiveServer2?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpam8kTV 2>/tmp/tmpKFOTMl''] {'logoutput': None, 'quiet': False}

2018-10-19 15:14:41,215 - call returned (0, '')

2018-10-19 15:14:41,215 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":0,"fileId":17517,"group":"hive","length":0,"modificationTime":1539955373909,"owner":"hive","pathSuffix":"","permission":"700","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2018-10-19 15:14:41,217 - HdfsResource[None] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/3.0.1.0-187/hadoop/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://myhost.com:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': 'missing_principal', 'user': 'hdfs', 'action': ['execute'], 'hadoop_conf_dir': '/usr/hdp/3.0.1.0-187/hadoop/conf', 'immutable_paths': [u'/mr-history/done', u'/warehouse/tablespace/managed/hive', u'/warehouse/tablespace/external/hive', u'/app-logs', u'/tmp']}

2018-10-19 15:14:41,218 - call['ambari-python-wrap /usr/bin/hdp-select status hive-server2'] {'timeout': 20}

2018-10-19 15:14:41,258 - call returned (0, 'hive-server2 - 3.0.1.0-187')

2018-10-19 15:14:41,273 - Skipping Ranger API calls, as policy cache file exists for hive

2018-10-19 15:14:41,274 - If service name for hive is not created on Ranger Admin, then to re-create it delete policy cache file: /etc/ranger/hadoop01_hive/policycache/hiveServer2_hadoop01_hive.json

2018-10-19 15:14:41,276 - File['/usr/hdp/current/hive-server2/conf//ranger-security.xml'] {'content': InlineTemplate(...), 'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:41,277 - Writing File['/usr/hdp/current/hive-server2/conf//ranger-security.xml'] because contents don't match

2018-10-19 15:14:41,278 - Directory['/etc/ranger/hadoop01_hive'] {'owner': 'hive', 'create_parents': True, 'group': 'hadoop', 'mode': 0775, 'cd_access': 'a'}

2018-10-19 15:14:41,279 - Directory['/etc/ranger/hadoop01_hive/policycache'] {'owner': 'hive', 'group': 'hadoop', 'create_parents': True, 'mode': 0775, 'cd_access': 'a'}

2018-10-19 15:14:41,280 - File['/etc/ranger/hadoop01_hive/policycache/hiveServer2_hadoop01_hive.json'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0644}

2018-10-19 15:14:41,281 - XmlConfig['ranger-hive-audit.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hive-server2/conf/', 'mode': 0744, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': ...}

2018-10-19 15:14:41,297 - Generating config: /usr/hdp/current/hive-server2/conf/ranger-hive-audit.xml

2018-10-19 15:14:41,298 - File['/usr/hdp/current/hive-server2/conf/ranger-hive-audit.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0744, 'encoding': 'UTF-8'}

2018-10-19 15:14:41,319 - XmlConfig['ranger-hive-security.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hive-server2/conf/', 'mode': 0744, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': ...}

2018-10-19 15:14:41,333 - Generating config: /usr/hdp/current/hive-server2/conf/ranger-hive-security.xml

2018-10-19 15:14:41,333 - File['/usr/hdp/current/hive-server2/conf/ranger-hive-security.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0744, 'encoding': 'UTF-8'}

2018-10-19 15:14:41,345 - XmlConfig['ranger-policymgr-ssl.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hive-server2/conf/', 'mode': 0744, 'configuration_attributes': {}, 'owner': 'hive', 'configurations': ...}

2018-10-19 15:14:41,360 - Generating config: /usr/hdp/current/hive-server2/conf/ranger-policymgr-ssl.xml

2018-10-19 15:14:41,360 - File['/usr/hdp/current/hive-server2/conf/ranger-policymgr-ssl.xml'] {'owner': 'hive', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0744, 'encoding': 'UTF-8'}

2018-10-19 15:14:41,369 - Execute[(u'/usr/hdp/3.0.1.0-187/ranger-hive-plugin/ranger_credential_helper.py', '-l', u'/usr/hdp/3.0.1.0-187/ranger-hive-plugin/install/lib/*', '-f', '/etc/ranger/hadoop01_hive/cred.jceks', '-k', 'sslKeyStore', '-v', [PROTECTED], '-c', '1')] {'logoutput': True, 'environment': {'JAVA_HOME': u'/usr/jdk64/jdk1.8.0_112'}, 'sudo': True}

Using Java:/usr/jdk64/jdk1.8.0_112/bin/java

Alias sslKeyStore created successfully!

2018-10-19 15:14:43,177 - Execute[(u'/usr/hdp/3.0.1.0-187/ranger-hive-plugin/ranger_credential_helper.py', '-l', u'/usr/hdp/3.0.1.0-187/ranger-hive-plugin/install/lib/*', '-f', '/etc/ranger/hadoop01_hive/cred.jceks', '-k', 'sslTrustStore', '-v', [PROTECTED], '-c', '1')] {'logoutput': True, 'environment': {'JAVA_HOME': u'/usr/jdk64/jdk1.8.0_112'}, 'sudo': True}

Using Java:/usr/jdk64/jdk1.8.0_112/bin/java

Alias sslTrustStore created successfully!

2018-10-19 15:14:44,394 - File['/etc/ranger/hadoop01_hive/cred.jceks'] {'owner': 'hive', 'group': 'hadoop', 'mode': 0640}

2018-10-19 15:14:44,396 - File['/etc/ranger/hadoop01_hive/.cred.jceks.crc'] {'owner': 'hive', 'only_if': 'test -e /etc/ranger/hadoop01_hive/.cred.jceks.crc', 'group': 'hadoop', 'mode': 0640}

2018-10-19 15:14:44,404 - call['ambari-sudo.sh su hive -l -s /bin/bash -c 'cat /var/run/hive/hive-server.pid 1>/tmp/tmpV9BE8F 2>/tmp/tmpweqPCn''] {'quiet': False}

2018-10-19 15:14:44,528 - call returned (0, '')

2018-10-19 15:14:44,529 - get_user_call_output returned (0, u'49080', u'')

2018-10-19 15:14:44,530 - call['ambari-sudo.sh su hive -l -s /bin/bash -c 'hive --config /usr/hdp/current/hive-server2/conf/ --service metatool -listFSRoot' 2>/dev/null | grep hdfs:// | cut -f1,2,3 -d '/' | grep -v 'hdfs://myhost.com:8020' | head -1'] {}

2018-10-19 15:15:13,029 - call returned (0, '')

2018-10-19 15:15:13,030 - Execute['/var/lib/ambari-agent/tmp/start_hiveserver2_script /var/log/hive/hive-server2.out /var/log/hive/hive-server2.err /var/run/hive/hive-server.pid /usr/hdp/current/hive-server2/conf/ /etc/tez/conf'] {'environment': {'HIVE_BIN': 'hive', 'JAVA_HOME': u'/usr/jdk64/jdk1.8.0_112', 'HADOOP_HOME': u'/usr/hdp/current/hadoop-client'}, 'not_if': 'ls /var/run/hive/hive-server.pid >/dev/null 2>&1 && ps -p 49080 >/dev/null 2>&1', 'user': 'hive', 'path': [u'/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin:/var/lib/ambari-agent:/var/lib/ambari-agent:/usr/hdp/current/hive-server2/bin:/usr/hdp/3.0.1.0-187/hadoop/bin']}

2018-10-19 15:15:13,202 - Execute['/usr/jdk64/jdk1.8.0_112/bin/java -cp /usr/lib/ambari-agent/DBConnectionVerification.jar:/usr/hdp/current/hive-server2/lib/mysql-connector-java-8.0.12.jar org.apache.ambari.server.DBConnectionVerification 'jdbc:mysql://myhost.com/hive' hive [PROTECTED] com.mysql.jdbc.Driver'] {'path': ['/usr/sbin:/sbin:/usr/local/bin:/bin:/usr/bin'], 'tries': 5, 'try_sleep': 10}

2018-10-19 15:15:15,769 - call['/usr/hdp/current/zookeeper-client/bin/zkCli.sh -server myhost.com:2181 ls /hiveserver2 | grep 'serverUri=''] {}

2018-10-19 15:15:17,123 - call returned (1, '')

2018-10-19 15:15:17,124 - Will retry 29 time(s), caught exception: ZooKeeper node /hiveserver2 is not ready yet. Sleeping for 10 sec(s)

2018-10-19 15:15:27,136 - call['/usr/hdp/current/zookeeper-client/bin/zkCli.sh -server myhost.com:2181 ls /hiveserver2 | grep 'serverUri=''] {}

2018-10-19 15:15:28,075 - call returned (1, '')

2018-10-19 15:15:28,079 - Will retry 28 time(s), caught exception: ZooKeeper node /hiveserver2 is not ready yet. Sleeping for 10 sec(s)

2018-10-19 15:15:38,090 - call['/usr/hdp/current/zookeeper-client/bin/zkCli.sh -server myhost.com:2181 ls /hiveserver2 | grep 'serverUri=''] {}