Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How can I change spark package repository?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How can I change spark package repository?

- Labels:

-

Apache Spark

Created on 05-15-2017 06:41 AM - edited 08-17-2019 05:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

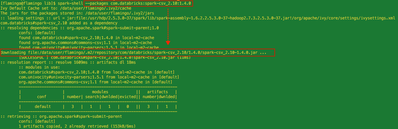

Using spark-shell with --packages options like databricks,

Of course, spark is downloading package library on the maven repository of internet.

But, in case of offline mode, it not useful.

How can I change or add spark package repository?

Created 05-15-2017 07:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hm, I just fixed this issue with multiple jars option.

Created 05-17-2017 08:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you tried the multiple jars options?

Created 05-17-2017 11:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes I did.

Created 08-11-2017 01:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In my case, I navigate to the folder /data/user/flamingo/.ivy2/jars

... Ivy Default Cache set to: /data/user/flamingo/.ivy2/cache The jars for the packages stored in: /data/user/flamingo/.ivy2/jars ...

And copy all the jars below to the directory you want to store jars, then execute the spark command like:

SPARK_MAJOR_VERSION=2 bin/spark-shell --jars="/path/to/jars"

Then the result seems worked!