Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How can I get cdsw usage stats

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How can I get cdsw usage stats

Created 10-04-2021 05:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I need to produce stats on cdsw resource usage by data scientists. See bellow:

| User | Number of sessions | Total CPU | Total data processed |

Created 10-05-2021 11:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @GregoryG

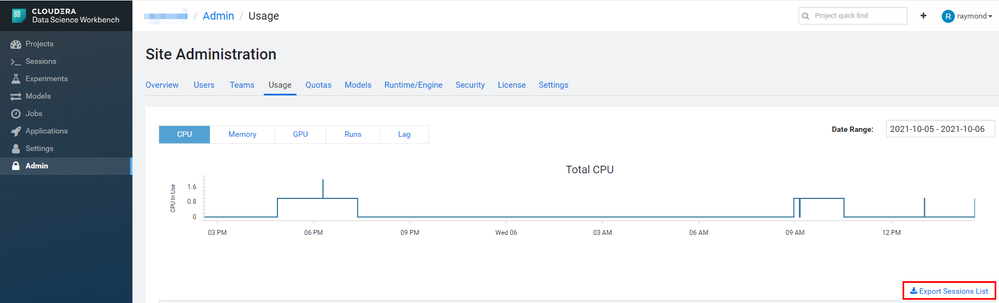

| User | Number of sessions | Total CPU |

The above columns can be confirmed by the site administration -> usage report.

Refer to the following screenshot:

But the last one is impossible to be gathered directly by CDSW.

And I think it's hard to do that. One possible way to achieve that is to deploy a service to capture the metrics from Spark History Server API and CDSW's API.

Specifically, in general, users generate two kinds of workloads through CDSW, one is Spark workload, and the other is Engine running in CDSW's local Kubernetes cluster.

Spark's workload runs on a CDP cluster, and the amount of data processed by these workloads will be recorded in the event log and can be viewed through the Spark History Web UI.

The Spark History Web UI also has a Rest API, so you can look for tools on the Internet that can obtain data from the Spark History Server API or write a script to count the data.

The local Engine of CDSW is usually used to execute some Machine Learning and Deep Learning workloads. I guess that the amount of data processed by these workloads should not be recorded by CDSW, but by specific frameworks of ML and DL. These frameworks should have roles like Spark History Server, so you should investigate from that aspect.

Created 10-05-2021 11:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @GregoryG

| User | Number of sessions | Total CPU |

The above columns can be confirmed by the site administration -> usage report.

Refer to the following screenshot:

But the last one is impossible to be gathered directly by CDSW.

And I think it's hard to do that. One possible way to achieve that is to deploy a service to capture the metrics from Spark History Server API and CDSW's API.

Specifically, in general, users generate two kinds of workloads through CDSW, one is Spark workload, and the other is Engine running in CDSW's local Kubernetes cluster.

Spark's workload runs on a CDP cluster, and the amount of data processed by these workloads will be recorded in the event log and can be viewed through the Spark History Web UI.

The Spark History Web UI also has a Rest API, so you can look for tools on the Internet that can obtain data from the Spark History Server API or write a script to count the data.

The local Engine of CDSW is usually used to execute some Machine Learning and Deep Learning workloads. I guess that the amount of data processed by these workloads should not be recorded by CDSW, but by specific frameworks of ML and DL. These frameworks should have roles like Spark History Server, so you should investigate from that aspect.

Created 10-10-2021 11:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@GregoryG, Has the reply helped resolve your issue? If so, please mark the appropriate reply as the solution, as it will make it easier for others to find the answer in the future.

Regards,

Vidya Sargur,Community Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community: