Support Questions

- Cloudera Community

- Support

- Support Questions

- How can I limit the amount of YARN memory allocate...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How can I limit the amount of YARN memory allocated to the Spark interpreter in Zeppelin?

- Labels:

-

Apache Spark

-

Apache YARN

-

Apache Zeppelin

Created 07-27-2016 04:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I notice that each time I run a Spark script through Zeppelin it utilises the full amount of YARN memory available in my cluster. Is there a way that I can limit / manage memory consumption? Irrelevant of the type of job demands it seems to always use 100% of the cluster.

Thanks,M

Created on 07-27-2016 06:44 PM - edited 08-18-2019 05:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In general you can change the executor memory in Zeppelin by modifying zeppelin-env.sh and including

export ZEPPELIN_JAVA_OPTS="-Dspark.executor.memory=1g"

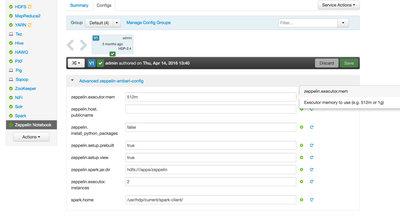

If you are installing Zeppelin via Ambari, you can set this via zeppelin.executor.mem (see screenshot)

You can follow the tutorial here which goes through create queue and configure zeppelin to use it

https://github.com/hortonworks-gallery/ambari-zeppelin-service/blob/master/README.md

Created on 07-27-2016 06:44 PM - edited 08-18-2019 05:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In general you can change the executor memory in Zeppelin by modifying zeppelin-env.sh and including

export ZEPPELIN_JAVA_OPTS="-Dspark.executor.memory=1g"

If you are installing Zeppelin via Ambari, you can set this via zeppelin.executor.mem (see screenshot)

You can follow the tutorial here which goes through create queue and configure zeppelin to use it

https://github.com/hortonworks-gallery/ambari-zeppelin-service/blob/master/README.md

Created 07-27-2016 06:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here's one way: edit ZEPPELIN_JAVA_OPTS in zeppelin-env.sh. You can also leverage dynamic allocation (read this) if you want dynamic scaling.

[root@craig-a-1 conf]# grep "ZEPPELIN_JAVA_OPTS" /usr/hdp/current/zeppelin-server/lib/conf/zeppelin-env.sh # Additional jvm options. for example, export ZEPPELIN_JAVA_OPTS="-Dspark.executor.memory=8g -Dspark.cores.max=16" export ZEPPELIN_JAVA_OPTS="-Dhdp.version=2.4.2.0-258 -Dspark.executor.memory=512m -Dspark.executor.instances=2 -Dspark.yarn.queue=default" # zeppelin interpreter process jvm options. Default = ZEPPELIN_JAVA_OPTS

Created 02-12-2017 11:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried the following two suggestions independently (full restarts of Zeppelin each time) with no luck.

First, via Ambari, I changed zeppelin.executor.mem from 512m to 256m and zeppelin.executor.instances from 2 to 1.

Then, again via Ambari, I updated the following snippet of the zeppelin_env_content

from

export ZEPPELIN_JAVA_OPTS="-Dhdp.version={{full_stack_version}} -Dspark.executor.memory={{executor_mem}} -Dspark.executor.instances={{executor_instances}} -Dspark.yarn.queue={{spark_queue}}"to

export ZEPPELIN_JAVA_OPTS="-Dhdp.version={{full_stack_version}} -Dspark.executor.memory=512m -Dspark.executor.instances=2 -Dspark.yarn.queue={{spark_queue}}"Both times, my little 2.5 Sandbox was still running full throttle once I run some code in Zeppelin. If anyone notices what I missed on this, please advise.

Created 02-13-2017 02:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can probably specify a dedicated queue for Zeppelin/Spark in capacity scheduler configuration and give it a limit, for example 50% of the total resources.

Created 02-13-2017 02:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good point. For my Sandbox testing, I decided to just use the steps provided in http://stackoverflow.com/questions/40550011/zeppelin-how-to-restart-sparkcontext-in-zeppelin to stop the SparkContext when I need to do something outside of Zeppelin. Not ideal, but working good enough for some multi-framework prototyping I'm doing.