Support Questions

- Cloudera Community

- Support

- Support Questions

- How does virtualization affect "python yarn-utils....

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How does virtualization affect "python yarn-utils.py" output and settings?

- Labels:

-

Apache YARN

Created 02-22-2016 08:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are running an 8 node virtualized cluster with 5 datanodes. Each datanode is allocated 8 vcores, 54 GB of RAM, and use shared SAN storage. The output of yarn-utils (v=8, m=54, d=4) is:

yarn.scheduler.minimum-allocation-mb=6656

yarn.scheduler.maximum-allocation-mb=53248

yarn.nodemanager.resource.memory-mb=53248

mapreduce.map.memory.mb=6656

mapreduce.map.java.opts=-Xmx5324m

mapreduce.reduce.memory.mb=6656

mapreduce.reduce.java.opts=-Xmx5324m

yarn.app.mapreduce.am.resource.mb=6656

yarn.app.mapreduce.am.command-opts=-Xmx5324m

mapreduce.task.io.sort.mb=2662

Some questions I have is 1) what do you put for disks value when data node disks are running on shared SAN storage? and; 2) The maximum container size only shows 8 GB even though each node is assigned 54 GB. Does this have something to do with over commitment in the virtual environment? yarn-utils wants it set to 53 GB.

Created on 02-22-2016 09:32 PM - edited 08-18-2019 05:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

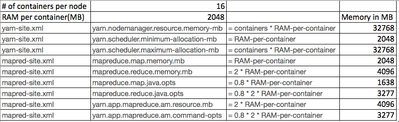

Scott, there's two layers of memory settings that you need to be aware of - NodeManager and Containers. NodeManager has all the available memory it can provide to containers. You want to have more containers with decent memory. Rule of thumb is to use 2048MB of memory per container. So if you have 53GB of available memory per node, then you have about 26 containers available per node to do the job. 8GB of memory per container IMO is too big.

We don't know how many disks are there to be used by Hadoop from the SAN storage. You can disregard the disks in the equation as the formula is typically done for on-premise clusters. But you can run a manual calculation of the memory settings since you have the minimum container per node and memory per container values (26, 2048MB respectively). You can use the formula below. Just replace the # of containers per node and RAM per container with your values. Please note that 53GB of available ram per vm is too big knowing it only has 54GB RAM. Typically, you would want to set aside about 8GB for other processes - OS, HBase, etc. which means available memory per node is just 46GB.

Hope this helps.

Created 02-22-2016 08:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's making more sense. My yarn.nodemanager.resource.memory-mb was only set to 16 GB so this restricted my min and max settings. Still not clear what to set disks to in a virtual environment in order to get a good baseline setting.

Created on 02-22-2016 09:32 PM - edited 08-18-2019 05:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Scott, there's two layers of memory settings that you need to be aware of - NodeManager and Containers. NodeManager has all the available memory it can provide to containers. You want to have more containers with decent memory. Rule of thumb is to use 2048MB of memory per container. So if you have 53GB of available memory per node, then you have about 26 containers available per node to do the job. 8GB of memory per container IMO is too big.

We don't know how many disks are there to be used by Hadoop from the SAN storage. You can disregard the disks in the equation as the formula is typically done for on-premise clusters. But you can run a manual calculation of the memory settings since you have the minimum container per node and memory per container values (26, 2048MB respectively). You can use the formula below. Just replace the # of containers per node and RAM per container with your values. Please note that 53GB of available ram per vm is too big knowing it only has 54GB RAM. Typically, you would want to set aside about 8GB for other processes - OS, HBase, etc. which means available memory per node is just 46GB.

Hope this helps.