Support Questions

- Cloudera Community

- Support

- Support Questions

- How to Transform an Avro to a Super-Schema Avro?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to Transform an Avro to a Super-Schema Avro?

- Labels:

-

Apache NiFi

Created 06-07-2017 01:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Assume a data file in avro schema "avro schema short".

Assume "avro schema full" that is inclusive of the "avro schema short" and has some additional fields, set for default values.

The short schema Avro can have fields in any order and field-wise is a sub-set of the super-schema with fields not necessarily in the same order.

How would one use NiFi out-of-box processors to transform the first data file into "avro schema full" setting the values for the additional fields to the default values as specified in the "avro schema full"? It could be a creative solution involving one of those Execute ... or Scripted ... processors.

Created on 06-07-2017 02:10 PM - edited 08-17-2019 10:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree with what Matt said above and I had been working on a template to achieve this before I saw his answer so figured I would post it anyway...

I made up the following two schemas:

{"name": "shortSchema",

"namespace": "nifi",

"type": "record",

"fields": [

{ "name": "a", "type": "string" },

{ "name": "b", "type": "string" }

]}{"name": "fullSchema",

"namespace": "nifi",

"type": "record",

"fields": [

{ "name": "c", "type": ["null", "string"], "default" : "default value for field c" },

{ "name": "d", "type": ["null", "string"], "default" : "default value for field d" },

{ "name": "a", "type": "string" },

{ "name": "b", "type": "string" }

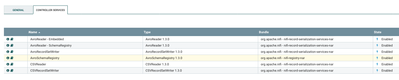

]}Then made the following flow:

What this flow does it the following:

- Generate a CSV with two rows and the columns a,b,c

- Reads the CSV using the shortSchema and writes as Avro with the shortSchema

- Updates the schema.name attribute to fullSchema

- Reads the Avro using the embedded schema (shortSchema) and writes it using the schema from schema.name (fullSchema)

- Reads the Avro using the embedded schema (fullSchema) and writes it back to CSV

At the end the CSV that is printed now has the new fields with default values filled in.

The CSV part is just for being able to easily see what is going on and is obviously not required if you already have Avro data.

Here is a template of the flow: convert-avro-short-to-full.xml

Created 06-07-2017 01:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As of NiFi 1.2.0, you can use ConvertRecord, after configuring an AvroReader with the short schema and an AvroRecordSetWriter with the super schema.

Prior to NiFi 1.2.0, you may be able to use ConvertAvroSchema, using the super-schema as both Input and Output Schema property values (if you use the short schema as Input, the processor complains about the unmapped fields in the super schema). I tried this by adding a single field to my "short schema" to make the "super schema":

{"name": "extra_field", "type": "string", "default": "Hello"}I'm not sure if this will work with arbitrary super-sets, but it is worth a try 🙂

Created 06-07-2017 08:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much.

Created on 06-07-2017 02:10 PM - edited 08-17-2019 10:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree with what Matt said above and I had been working on a template to achieve this before I saw his answer so figured I would post it anyway...

I made up the following two schemas:

{"name": "shortSchema",

"namespace": "nifi",

"type": "record",

"fields": [

{ "name": "a", "type": "string" },

{ "name": "b", "type": "string" }

]}{"name": "fullSchema",

"namespace": "nifi",

"type": "record",

"fields": [

{ "name": "c", "type": ["null", "string"], "default" : "default value for field c" },

{ "name": "d", "type": ["null", "string"], "default" : "default value for field d" },

{ "name": "a", "type": "string" },

{ "name": "b", "type": "string" }

]}Then made the following flow:

What this flow does it the following:

- Generate a CSV with two rows and the columns a,b,c

- Reads the CSV using the shortSchema and writes as Avro with the shortSchema

- Updates the schema.name attribute to fullSchema

- Reads the Avro using the embedded schema (shortSchema) and writes it using the schema from schema.name (fullSchema)

- Reads the Avro using the embedded schema (fullSchema) and writes it back to CSV

At the end the CSV that is printed now has the new fields with default values filled in.

The CSV part is just for being able to easily see what is going on and is obviously not required if you already have Avro data.

Here is a template of the flow: convert-avro-short-to-full.xml