Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to avoid duplicate row insertion in Hive?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to avoid duplicate row insertion in Hive?

- Labels:

-

Apache Hive

Created on

12-20-2019

04:27 AM

- last edited on

12-20-2019

06:22 AM

by

VidyaSargur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

CREATE TABLE Test( recordId String,

Advertiser_id STRING ,

Advertiser_name STRING,

PRIMARY KEY (recordId) DISABLE NOVALIDATE);

insert into Test values('1','prakash','vtv');

insert into Test values('1','prakash','vtv');

Test.recordid | Test.advertiser_id | Test.advertiser_name |

+-----------------------------+----------------------------------+------------------------------------+

| 1 | prakash | vtv |

| 1 | prakash | vtv |

+-----------------------------+----------------------------------+------------------------------------+

As per RDBMS terms Primary key constraint shouldn't allow me to create duplicate record with the same value.

How to achieve this in Hive?

Created on 12-22-2019 10:13 AM - edited 12-22-2019 10:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That by design:

A NOVALIDATE constraint is basically a constraint that can be enabled but for which hive will not check the existing data to determine whether there might be data that currently violate the constraint.

This is useful if we know there’s data that violates the constraint but we want to quickly put on a constraint to prevent further violations, with the intention to clean up any possible violations at some future point in time.

It’s also potentially useful if we know the data is clean and so want to prevent the potentially significant overheads of hive having to check all the data to ensure there are indeed no violations.

Created 12-23-2019 02:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shelton Thanks for your response and understood your point Hive will not check existing data in case if NOVALIDATE constraint is specified.

Is there any other way that i can insert only unique values for a particular column in Hive?

Created 12-26-2019 06:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are few BI tools which can be used as a layer on top of Hive which can make use of pk constraints.

AFAIK Hive does not check/validate pk constraints

https://docs.cloudera.com/HDPDocuments/HDP3/HDP-3.1.4/using-hiveql/content/hive_constraints.html

It can be added in table definition so that BI tools can make efficient query decisions and perform checks on key constraints.

Created 12-26-2019 12:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

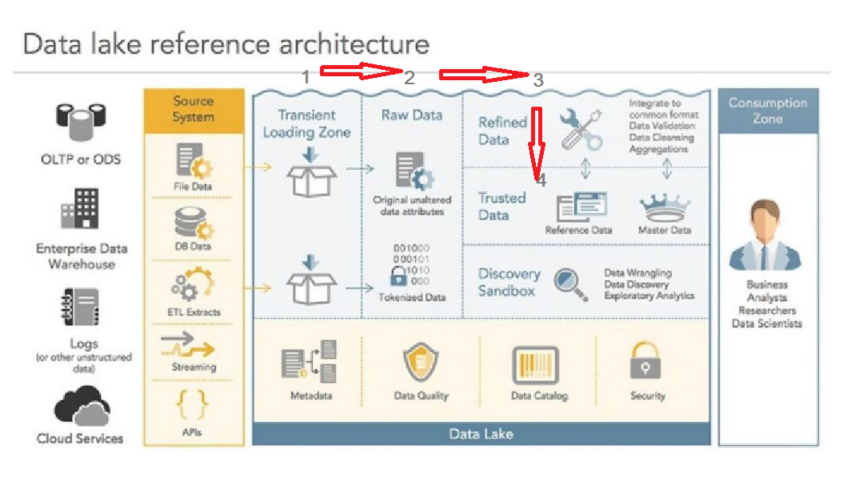

To ensure data from multiple data sources are ingested to discover at a later stage business insights, usually we dump everything. Comparison of source data with data ingested to simply validate that all the data has been pushed and verifying that correct data files are generated and loaded into HDFS correctly into the desired location.

A smart data lake ingestion tool or solution like kylo should enable self-service data ingestion, data wrangling, data profiling, data validation, data cleansing/standardization,see attached architecture

- /landing_Zone/Raw_data/ [ Corresponding to stage1]

- /landing_Zone/Raw_data/refined [ Corresponding to stage2]

- /landing_Zone/Raw_data/refined/Trusted Data [ Corresponding to stage3]

- /landing_Zone/Raw_data/refined/Trusted Data/sandbox [ Corresponding to stage4]

The data lake can be used also to feed upstream systems for a real-time monitoring system or long storage like HDFS or hive for analytics

Data quality is often seen as the unglamorous component of working with data. Ironically, it’s usually the component that makes up the majority of our time of data engineers. Data quality might very well be the single most important component of a data pipeline, since, without a level of confidence and reliability in your data, the dashboard and analysis generated from the data is useless.

The challenge with data quality is that there are no clear and simple formulas for determining if data is correct this is a continuous data engineering task as more data sources are incorporated to the data pipeline.

Typically hive plugged on stage 3 and tables are created after the data validation of stage 2 this ensures that data scientists have cleansed data to run their models and analysts using BI tools at least this has been the tasks I have done all through many projects

HTH