Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to change the block size of existing files...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to change the block size of existing files in HDFS?

- Labels:

-

Apache Hadoop

Created 04-26-2018 10:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I tried looking in to the community but could not get proper answer for this question.

How can I change the block size for the existing files in HDFS? I want to increase the block size.

I see the solution as distcp and I understood that we have to use distcp to move the files, folders and subfolders to a new temporary location with new block size and then remove the files, folders, etc for which block size has to be increased and copy the files from temporary location back to original location.

The above methodology might have side effects such as overhead of HDFS by adding duplicate copies of the files and change in permissions while copying files from temporary location and etc.

Is they any way which is efficient enough to replace the existing files with the same name and same privileges but with increased block size?

Thanks to all for your time on this question.

Created 04-27-2018 12:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Once you have changed the block size at the cluster level, whatever files you put or copy to hdfs will have the new default block size of 256 MB

Unfortunately, apart from DISTCP you have the usual -put and -get HDFS commands

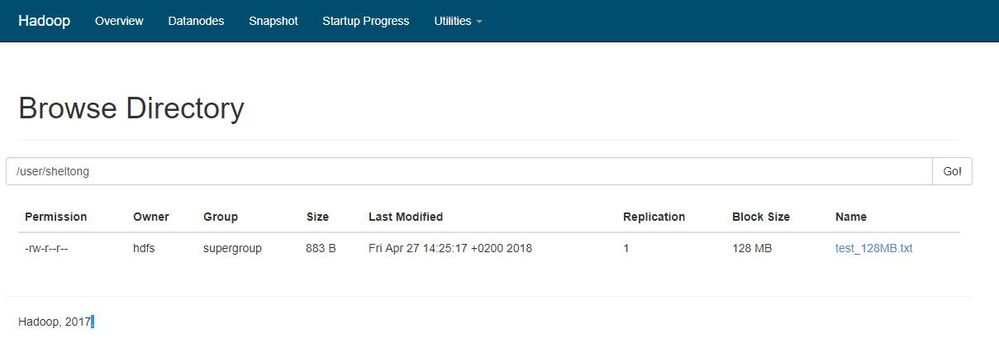

My default blocksize is 128MB see attached screenshot 128MB.JPG

Created a file test_128MB.txt

$ vi test_128MB.txt

Uploaded a 128 MB files to HDFS

$ hdfs dfs -put test_128MB.txt /user/sheltong

see attached screenshot 128MB.JPG notice the block size

I then copied the same file back to the local filesystem,

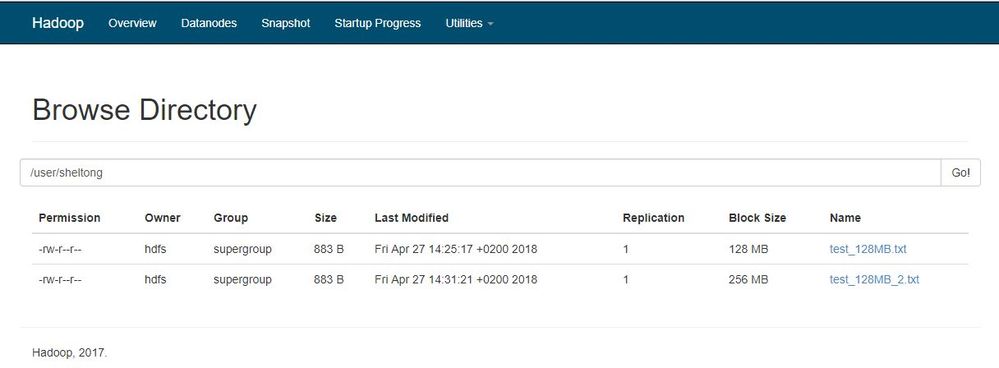

$ hdfs dfs -get /user/sheltong/test_128MB.txt /tmp/test_128MB_2.txt

The using the -D option to define a new blocksize of 256 MB

$ hdfs dfs -D dfs.blocksize=268435456 -put test_128MB_2.txt /user/sheltong

See screenshot 256MB.JPG, technically its possible if you have a few files but you should remember the test_128MB.txt and test_128MB_2.txt are the same files of 128MB, so changing the blocksize of an existing files with try to fit a 128bock in a 256 MB block leading to wastage of space of the other 128MB, hence the reason it will ONLY apply to new files.

Hope that gives you a better understanding

Created 02-26-2021 02:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Agreed, but is there a way to avoid this wastage. apart from migrating data to LFS and then again to HDFS.

Example: We have a 500MB file with block size 128 MB i.e. 4 blocks on HDFS. Now since we changed block size to 256MB, how would we make the file on HDFS to have 2 blocks of 256MB instead of 4.

Please suggest.

Created 04-29-2018 05:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Geoffery,

Thanks a lot for your time.

Yes, you are correct and I am looking for a tool other than distcp

Thanks a lot for your time on this again.

Created 04-29-2018 06:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nice to know it has answered your question.

Could you Accept the answer I gave by Clicking on Accept button below, That would be a great help to Community users to find the solution quickly for these kinds of errors.

Created 10-25-2018 07:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is in regard to the changing the block size of an existing file from 64mb to 128mb.

We are facing some issues when we delete the files.

So, is there a way to change the block size of an existing file, without removing the file.

Created 12-10-2018 10:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the details.

I have a cluster which has 220 million files and out of which 110 million is less than 1 MB in size.

Default block size is set to 128 MB.

What should be the blocksize for file less than 1 MB? And How we can set in live cluster?

Total Files + Directories: 227008030

Disk Remaining: 700 TB / 3.5 PB (20%)

- « Previous

-

- 1

- 2

- Next »