Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to change the block size of existing files...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to change the block size of existing files in HDFS?

- Labels:

-

Apache Hadoop

Created 04-26-2018 10:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I tried looking in to the community but could not get proper answer for this question.

How can I change the block size for the existing files in HDFS? I want to increase the block size.

I see the solution as distcp and I understood that we have to use distcp to move the files, folders and subfolders to a new temporary location with new block size and then remove the files, folders, etc for which block size has to be increased and copy the files from temporary location back to original location.

The above methodology might have side effects such as overhead of HDFS by adding duplicate copies of the files and change in permissions while copying files from temporary location and etc.

Is they any way which is efficient enough to replace the existing files with the same name and same privileges but with increased block size?

Thanks to all for your time on this question.

Created 04-27-2018 12:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Once you have changed the block size at the cluster level, whatever files you put or copy to hdfs will have the new default block size of 256 MB

Unfortunately, apart from DISTCP you have the usual -put and -get HDFS commands

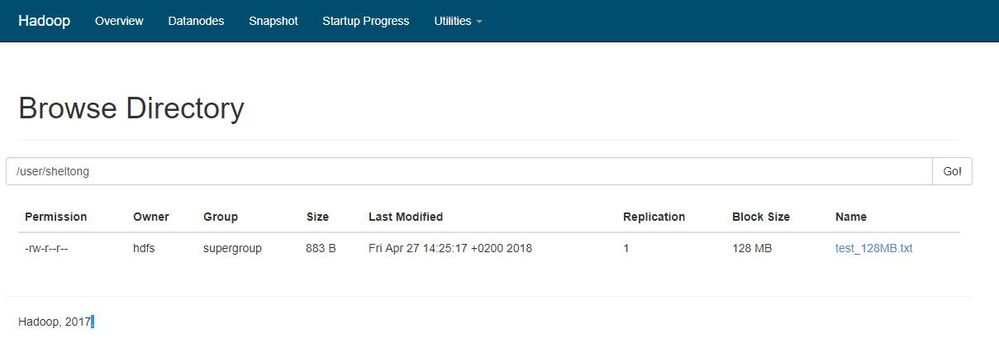

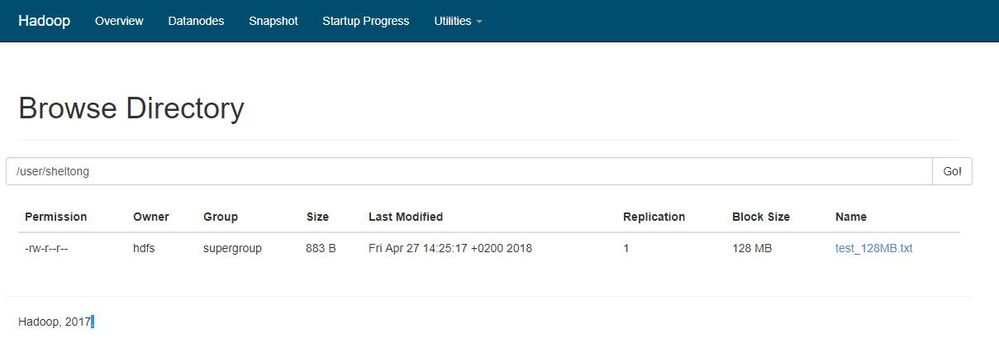

My default blocksize is 128MB see attached screenshot 128MB.JPG

Created a file test_128MB.txt

$ vi test_128MB.txt

Uploaded a 128 MB files to HDFS

$ hdfs dfs -put test_128MB.txt /user/sheltong

see attached screenshot 128MB.JPG notice the block size

I then copied the same file back to the local filesystem,

$ hdfs dfs -get /user/sheltong/test_128MB.txt /tmp/test_128MB_2.txt

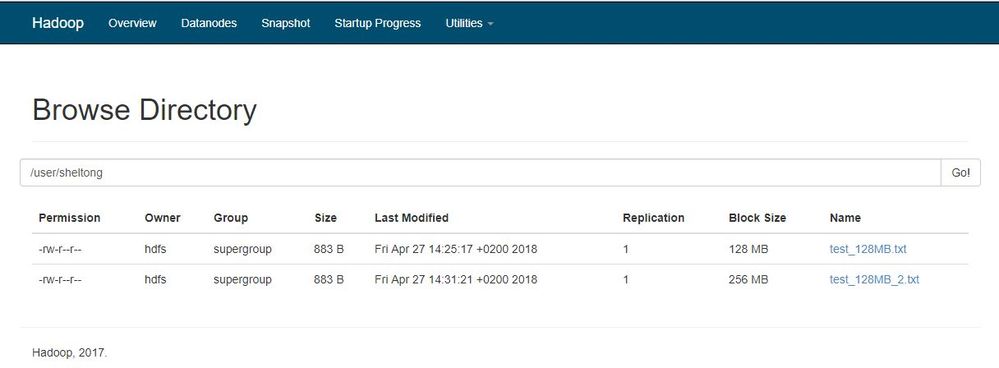

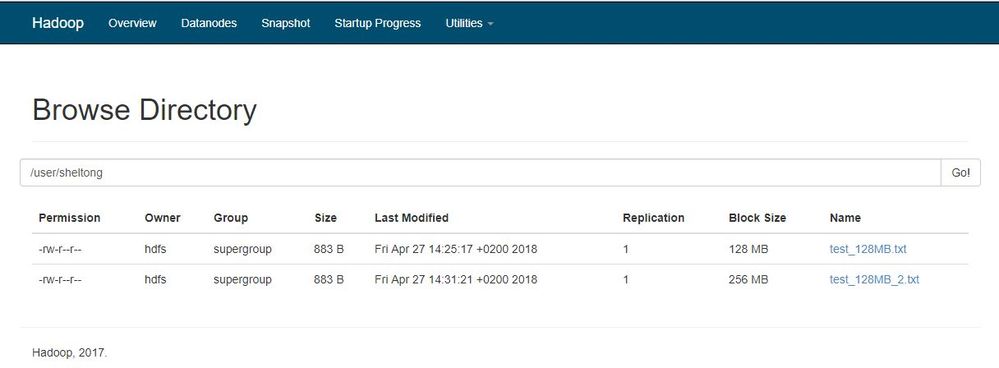

The using the -D option to define a new blocksize of 256 MB

$ hdfs dfs -D dfs.blocksize=268435456 -put test_128MB_2.txt /user/sheltong

See screenshot 256MB.JPG, technically its possible if you have a few files but you should remember the test_128MB.txt and test_128MB_2.txt are the same files of 128MB, so changing the blocksize of an existing files with try to fit a 128bock in a 256 MB block leading to wastage of space of the other 128MB, hence the reason it will ONLY apply to new files.

Hope that gives you a better understanding

Created 04-26-2018 11:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not aware of any other method but distcp command. Just wanted to add to this thread that distcp command has a -p option that you can use to preserve file permissions (user, group, posix permissions) and timestamp as well.

HTH

Created 04-26-2018 01:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Felix Albani,

Thanks a lot for your time on this.

Can you verify on this distcp procedure:

a) Use distcp and copy all the files and subfolders with -p option to a temporary location in HDFS on the same cluster with new block size.

b) Remove all the files in original location.

c) Copy the files from temporary location to original location.

Am I correct?

Created 04-27-2018 12:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sriram Yes, that would work. You could also avoid having to copy twice (point a and c) by moving the original data to a temporary location first (which will trigger only a change on the Namenode metadata and not actual copy of blocks which could take lot time):

a) Use hdfs dfs mv command to move all the original files and subfolders to /tmp

b) Copy the files from /tmp to the original location using distcp -p

c) Remove the original files

*** If you found my previous answer addressed your question, please take a moment to login and click the "accept" link on the answer.

Created 04-26-2018 06:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>How can I change the block size for the existing files in HDFS? I want to increase the block size.

May I ask what you are trying to achieve? We might be able to make better suggestions if we know what is the problem you are trying to solve?

Created 04-27-2018 01:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your time on this.

I want to increase the block size of existing files and this is a requirement for us.

This is to decrease the latency while reading the file.

Created 04-27-2018 06:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hadoop Distributed File System was designed to hold and manage large amounts of data; therefore typical HDFS block sizes are significantly larger than the block sizes you would see for a traditional filesystem the block size is specified in hdfs-site.xml.

The default block size in Hadoop 2.0 is 128mb, to change to 256MB edit the parameter, dfs.block.size to change to the desired block size eg 256 MB and you will need to restart all the stale services for the change to take effect. It's recommended to always use Ambari UI to make HDP/HDF changes

Existing files' block size can't be changed, In order to change the existing files' block size, 'distcp' utility can be used.

or

Override the default block size with 265 MB

hadoop fs -D dfs.blocksize=268435456 -copyFromLocal /tmp/test/payroll-april10.csv blksize/payroll-april10.csv

Hope that helps

Created 07-09-2020 02:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi.

I've a file with 128Mb block size

I'd like to change an existing file's blocksize using:

hdfs dfs -mv /user/myfile.txt /tmp

hdfs dfs -D dfs.blocksize=268435456 -cp /tmp/myfile.txt /userIt works

When I try to use a distcp, with -p to preserve original file's attributes, target file's blocksize doesn't change

hadoop distcp -p -D dfs.block.size=268435456 /tmp/myfile.txt /user/myfile.txtCan't understand where am I wrong

Created 04-27-2018 10:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Geoffrey Shelton Okot,

Thanks for your time.

I agree with your point about changing the block size on cluster level and restarting the services but the new block size would be applicable only for new files and the command you gave is applicable for new files.

I would like to know the method other than distcp to change block size of existing files in hadoop cluster.

Created 04-27-2018 12:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Once you have changed the block size at the cluster level, whatever files you put or copy to hdfs will have the new default block size of 256 MB

Unfortunately, apart from DISTCP you have the usual -put and -get HDFS commands

My default blocksize is 128MB see attached screenshot 128MB.JPG

Created a file test_128MB.txt

$ vi test_128MB.txt

Uploaded a 128 MB files to HDFS

$ hdfs dfs -put test_128MB.txt /user/sheltong

see attached screenshot 128MB.JPG notice the block size

I then copied the same file back to the local filesystem,

$ hdfs dfs -get /user/sheltong/test_128MB.txt /tmp/test_128MB_2.txt

The using the -D option to define a new blocksize of 256 MB

$ hdfs dfs -D dfs.blocksize=268435456 -put test_128MB_2.txt /user/sheltong

See screenshot 256MB.JPG, technically its possible if you have a few files but you should remember the test_128MB.txt and test_128MB_2.txt are the same files of 128MB, so changing the blocksize of an existing files with try to fit a 128bock in a 256 MB block leading to wastage of space of the other 128MB, hence the reason it will ONLY apply to new files.

Hope that gives you a better understanding