Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to configure storage policy in Ambari?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to configure storage policy in Ambari?

- Labels:

-

Apache Hadoop

Created 10-28-2015 10:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Setting the following value in the Ambari box corresponding to the property dfs.datanode.data.dir does not seem to work:

/hadoop/hdfs/data,[SSD]/mnt/ssdDisk/hdfs/data

I get a warning "Must be a slash or drive at the start" and I cannot save the new configuration.

Is there a way to define those disk storages in Ambari (in the past I tried to do it in the hdfs-site.xml file and it worked fine)?

My Ambari version is 2.1.0 and I use HDP 2.3.0 (Sandbox).

Created 10-28-2015 11:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please see this

<property>

<name>dfs.datanode.data.dir</name>

<value>[DISK]file:///hddata/dn/disk0, [SSD]file:///hddata/dn/ssd0,[ARCHIVE]file:///hddata/dn/archive0</value>

</property>Response edited based on the comment:

Ambari 2.1.1 + supports this as per AMBARI-12601

Created 10-28-2015 11:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please see this

<property>

<name>dfs.datanode.data.dir</name>

<value>[DISK]file:///hddata/dn/disk0, [SSD]file:///hddata/dn/ssd0,[ARCHIVE]file:///hddata/dn/archive0</value>

</property>Response edited based on the comment:

Ambari 2.1.1 + supports this as per AMBARI-12601

Created 10-28-2015 11:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It does not work in Ambari (same error as what I got with the configuration I described before).

My problem is the integration with Ambari, not the configuration in hdfs-site.xml (as mentioned before, when editing directly hdfs-site.xml it works fine).

Created 10-28-2015 12:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please see this

It's fixed in Ambari 2.1.1

Created on 06-16-2016 10:29 AM - edited 08-19-2019 05:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

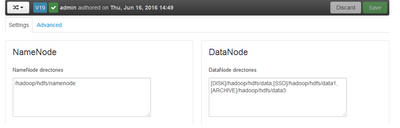

I got it working on Ambari 2.2.1

- 1.Create mount points:

#mkdir /hadoop/hdfs/data1 /hadoop/hdfs/data2 /hadoop/hdfs/data3

#chown hdfs:hadoop /hadoop/hdfs/data1 /hadoop/hdfs/data2 /hadoop/hdfs/data3

(**We are using the configuration for test purpose only, so no disks are mounted.)

- 2.Login to Ambari > HDFS>setting

- 3.Add datanode directories as shown below:

- Datanode>datanode directories:

- [DISK]/hadoop/hdfs/data,[SSD]/hadoop/hdfs/data1,[RAMDISK]/hadoop/hdfs/data2,[ARCHIVE]/hadoop/hdfs/data3

-

-

Restart hdfs hdfs service.

Restart all other afftected services.

Create a directory /cold

# su hdfs

[hdfs@hdp-qa2-n1 ~]$ hadoop fs -mkdir /cold

Set COLD storage policy on /cold

[hdfs@hdp-qa2-n1 ~]$ hdfs storagepolicies -setStoragePolicy -path /cold -policy COLD

Set storage policy COLD on /cold

5. Run get storage policy:

[hdfs@hdp-qa2-n1 ~]$ hdfs storagepolicies -getStoragePolicy -path /cold

The storage policy of /cold:

BlockStoragePolicy{COLD:2, storageTypes=[ARCHIVE], creationFallbacks=[], replicationFallbacks=[]}