Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to create & recover a snapshot

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to create & recover a snapshot

Created 03-08-2018 06:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi guys,

I have been using AWS machine for practice.My Question is :

How i Create & Recover Snapshot ?

Please explain.

Thanks,

Mudassar Hussain

Created 03-15-2018 10:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The steps 1,2 and 3 are okay.You dont need to create directory2, when you enable a directory as snapshottable in this case /user/mudassar/snapdemo the snapshots will be created under this directory with .snapshot....... which makes it invisible when you run the hdfs dfs -ls command

Let me demo on HDP 2.6

I will create your user on my local environment.

As mudassar as the root user

# adduser mudassar

Switch to superuser and owner of HDFS

# su - hdfs

Create the snapshot demo notice the -p option as the root directory /user/mudassar doesn't exist yet Note: hdfs hadoop will be deprecated so use hdfs dfs command!

$ hdfs dfs -mkdir -p /user/mudassar/snapdemo

Validate directory

$ hdfs dfs -ls /user/mudassar Found 1 items drwxr-xr-x - hdfs hdfs 0 2018-03-15 09:39 /user/mudassar/snapdemo

Change ownership to mudassar

$ hdfs dfs -chown mudassar /user/mudassar/snapdemo

Validate change of ownership

$ hdfs dfs -ls /user/mudassar Found 1 items drwxr-xr-x - mudassar hdfs 0 2018-03-15 09:39 /user/mudassar/snapdemo

Make the directory snapshottable

$ hdfs dfsadmin -allowSnapshot /user/mudassar/snapdemo Allowing snaphot on /user/mudassar/snapdemo succeeded

Show all the snapshottable directories in your cluster a subcommand under hdfs

$ hdfs lsSnapshottableDir drwxr-xr-x 0 mudassar hdfs 0 2018-03-15 09:39 0 65536 /user/mudassar/snapdemo

Create 2 sample files in /tmp

$ echo "Test one for snaphot No worries No worries I was worried you got stuck and didn't revert the HCC is full of solutions so" > /tmp/text1.txt $ echo "The default behavior is that only a superuser is allowed to access all the resources of the Kafka cluster, and no other user can access those resources" > /tmp/text2.txt

Validate the files were created

$cd /tmp $ls -lrt -rw-r--r-- 1 hdfs hadoop 121 Mar 15 10:04 text1.txt -rw-r--r-- 1 hdfs hadoop 152 Mar 15 10:04 text2.txt

Copy the files from locall to HDFS

$ hdfs dfs -put text1.txt /user/mudassar/snapdemo

Create a snapshot of the file text1.txt

$ hdfs dfs -createSnapshot /user/mudassar/snapdemo Created snapshot /user/mudassar/snapdemo/.snapshot/s20180315-101148.262

Note above the .snapshot directory which is a hidden system directory

Show the snapshot of text1.txt

$ hdfs dfs -ls /user/mudassar/snapdemo/.snapshot/s20180315-101148.262 Found 1 items -rw-r--r-- 3 hdfs hdfs 121 2018-03-15 10:10 /user/mudassar/snapdemo/.snapshot/s20180315-101148.262/text1.txt

Copied the second file text2.txt from local /tmp to HDFS

$ hdfs dfs -put text2.txt /user/mudassar/snapdemo

Validation that the 2 files should be resent

$ hdfs dfs -ls /user/mudassar/snapdemo Found 2 items -rw-r--r-- 3 hdfs hdfs 121 2018-03-15 10:10 /user/mudassar/snapdemo/text1.txt -rw-r--r-- 3 hdfs hdfs 152 2018-03-15 10:19 /user/mudassar/snapdemo/text2.txt

Demo simulate loss of file text1.txt

$ hdfs dfs -rm /user/mudassar/snapdemo/text1.txt

Indeed file text1.txt was deleted ONLY text2.txt remains

$ hdfs dfs -ls /user/mudassar/snapdemo Found 1 items -rw-r--r-- 3 hdfs hdfs 152 2018-03-15 10:19 /user/mudassar/snapdemo/text2.txt

Restore the text1.txt

$ hdfs dfs -cp -ptopax /user/mudassar/snapdemo/.snapshot/s20180315-101148.262/text1.txt /user/mudassar/snapdemo

To use -ptopax this ensure the timestamp is restored you will need to set the dfs.namenode.accesstime.precision to default 1 hr which is 360000 seconds

Check the original timestamp for text1.txt above !!!

hdfs dfs -ls /user/mudassar/snapdemo Found 2 items -rw-r--r-- 3 hdfs hdfs 121 2018-03-15 10:10 /user/mudassar/snapdemo/text1.txt -rw-r--r-- 3 hdfs hdfs 152 2018-03-15 10:19 /user/mudassar/snapdemo/text2.txt

In a nutshell, you don't need to create directory2 because when you run hdfs dfs -createSnapshot command it autocreates a directory under the original starting with .snapshot, that also saves you from extra steps of creating sort of a backup directory.

I hope that explains it clearly this time

Created 03-08-2018 09:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In this examples I am using a Cloudera virtual box but it the same command on HDP. I didn't create a local user mudassar but used hdfs and the hdfs directory

#################################

# Whoami

#################################

# id uid=0(root) gid=0(root) groups=0(root)

#################################

# Switched to hdfs user

#################################

# su - hdfs

#################################

# Changed directory to tmp to create test files

#################################

$ cd /tmp

#################################

# Created first file

#################################

$ echo "This is the first text file for mudassar to test snapshot" > text1.txt

#################################

# Created second file

#################################

$ echo "Apache Kafka comes with a pluggable authorizer known as Kafka Authorization Command Line (ACL) Interface, which is used for defining users and allowing or denying them to access its various APIs. The default behavior is that only a superuser is allowed to access all the resources of the Kafka cluster, and no other user can access those resources if no proper ACL is defined for those users. The general format in which Kafka ACL is defined is as follows" > text2.txt

#################

# Here is output

################

$ ls -lrt -rw-rw-r-- 1 hdfs hdfs 58 Mar 8 00:34 text1.txt -rw-rw-r-- 1 cloudera hdfs 456 Mar 8 00:37 text2.txt

#################################

# Above I changed the owner of text2.txt to cloudera as root user in the local filesystem

#################################

#chown cloudera:hdfs /tmp/text2.txt

################################

# First create a target directory in hdfs

################################

hdfs dfs -mkdir /user/mudassar

################################

# create a snapshottable directory

#################################

hdfs dfsadmin -allowSnapshot /user/mudassar

output:

Allowing snaphot on /user/mudassar succeeded

#################################

# Check the snapshottable dir in HDFS

#################################

hdfs lsSnapshottableDir

Output

drwxr-xr-x 0 hdfs supergroup 0 2018-03-08 00:54 0 65536 /user/mudassar

#################################

# copy a file from local to the HDFS snapshotable directory

#################################

hdfs dfs -put /tmp/text1.txt /user/mudassar

#################################

# Validate the files was copied

#################################

hdfs dfs -ls /user/mudassar

Found 1 items -rw-r--r-- 1 hdfs supergroup 58 2018-03-08 00:55 /user/mudassar/text1.txt #################################

# create snapshot

#################################

hdfs dfs -createSnapshot /user/mudassar

Output Created snapshot /user/mudassar/.snapshot/s20180308-005619.181

#################################

# Check to see the snapshot

#################################

hdfs dfs -ls /user/mudassar/.snapshot

Output Found 1 items drwxr-xr-x - hdfs supergroup 0 2018-03-08 00:56 /user/mudassar/.snapshot/s20180308-005619.181 #################################

# copy another file to the directory

#################################

hdfs dfs -put /tmp/text2.txt /user/mudassar

#################################

# Check the files exit in /user/mudassar notice the timestamp,permissions etc

#################################

hdfs dfs -ls /user/mudassar

output

Found 2 items

-rw-r--r-- 1 hdfs supergroup 58 2018-03-08 00:55 /user/mudassar/text1.txt

-rw-r--r-- 1 hdfs supergroup 456 2018-03-08 00:58 /user/mudassar/text2.txt

#################################

# Changed ownership of one of the files

#################################

hdfs dfs -chown cloudera:supergroup /user/mudassar/text2.txt

#################################

#Create second snapshot

#################################

hdfs dfs -createSnapshot /user/mudassar

#################################

# checked the directory notice now we have 2 snapshots one contains ONLY texte1.txt and the other contains both files #################################

$ hdfs dfs -ls /user/mudassar/.snapshot

Output

Found 2 items

drwxr-xr-x - hdfs supergroup 0 2018-03-08 00:56 /user/mudassar/.snapshot/s20180308-005619.181

drwxr-xr-x - hdfs supergroup 0 2018-03-08 01:01 /user/mudassar/.snapshot/s20180308-010152.924 #################################

# Simulate accidental deletion of the files

#################################

hdfs dfs -rm /user/mudassar/*

output

18/03/08 01:06:26 INFO fs.TrashPolicyDefault: Moved: 'hdfs://quickstart.cloudera:8020/user/mudassar/text1.txt' to trash at: hdfs://quickstart.cloudera:8020/user/hdfs/.Trash/Current/user/mudassar/text1.txt

18/03/08 01:06:26 INFO fs.TrashPolicyDefault: Moved: 'hdfs://quickstart.cloudera:8020/user/mudassar/text2.txt' to trash at: hdfs://quickstart.cloudera:8020/user/hdfs/.Trash/Current/user/mudassar/text2.txt

#################################

# Validate the files were deleted

#################################

hdfs dfs -ls /user/mudassar Will return nothing but the directory exist, run hdfs dfs -ls /user/

#################################

# check the contents of the latest snapshot xxxxx.924 see above

#################################

hdfs dfs -ls -R /user/mudassar/.snapshot/s20180308-010152.924

output

-rw-r--r-- 1 hdfs supergroup 58 2018-03-08 00:55 /user/mudassar/.snapshot/s20180308-010152.924/text1.txt

-rw-r--r-- 1 cloudera supergroup 456 2018-03-08 00:58 /user/mudassar/.snapshot/s20180308-010152.924/text2.txt

#################################

# Recover the 2 files from snapshot using -ptopax option

#################################

hdfs dfs -cp -ptopax /user/mudassar/.snapshot/s20180308-010152.924/* /user/mudassar/

#################################

# Validate the files were restored with original timestamps, ownership, permission, ACLs and XAttrs. #################################

hdfs dfs -ls /user/mudassar

output

-rw-r--r-- 1 hdfs supergroup 58 2018-03-08 00:55 /user/mudassar/text1.txt -rw-r--r-- 1 cloudera supergroup 456 2018-03-08 00:58 /user/mudassar/text2.txt

There you are recovered the 2 files delete accidentally ,please let me know if that worked out for you

Created 03-08-2018 10:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any updates ?

Created 03-09-2018 05:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You haven't given your feedback on the method and solution I provided, you should understand that members go a long way to help out and feedback as to whether the solution resolved your problem is appreciated and in that case you accept the answer to reward the user and close the thread,so that others with a similar problem could use it as a SOLUTION.

Created 03-11-2018 10:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @geoffrey shelton Okot I am really sorry. actually i was stuck in another task (deadline). I know if someone comes and help you it it great thing. and you always help us a lot. last time my "Acls" topic covers after you help.

I have not tried this solution yet. but in next coming days i will definitely try this solution and will let you know,

Many Thanks

Created 03-11-2018 07:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No worries I was worried you got stuck and didn't revert the HCC is full of solutions so, don't hesitate to update the thread if you encounter any problems but if the solution provided resolves your issue then that's great what you need to do is accept and close the thread

Cheers

Created on 03-15-2018 07:28 AM - edited 08-18-2019 03:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks A lot @Geoffrey Shelton Okot

I have tried this solution and got the output but I am not satisfied from myself on this topic. So i want to do another example,

Please help me out. I am using Amazon Machine (Hortonworks) :

First of all I create user : "mudassar"

then change the ownership by wrote this command :

1. "sudo -u hdfs hadoop fs -chown mudassar:mudassar /user/mudassar"

then go to user "mudassar"

2. sudo su - mudassar

then i create a directory(snapdemo) in this user :

3. hadoop fs -mkdir /user/mudassar/snapdemo

then i create another directory(directory2) in this user :

4. hadoop fs -mkdir /user/mudassar/directory2

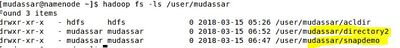

Now i have 2 directories in this user. Please see the below screen :

1. Now i want to create the text file (text1.txt) into "snapdemo" directory. and then take a snapshot of this directory into another directory (derectory2) of this user.

2. then delete the file (text1.txt) from directory "snapdemo" and then recover the snapshot from "directory2".

Hope above make sense to you.

Thanks Again

Cheers

Created 03-15-2018 10:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The steps 1,2 and 3 are okay.You dont need to create directory2, when you enable a directory as snapshottable in this case /user/mudassar/snapdemo the snapshots will be created under this directory with .snapshot....... which makes it invisible when you run the hdfs dfs -ls command

Let me demo on HDP 2.6

I will create your user on my local environment.

As mudassar as the root user

# adduser mudassar

Switch to superuser and owner of HDFS

# su - hdfs

Create the snapshot demo notice the -p option as the root directory /user/mudassar doesn't exist yet Note: hdfs hadoop will be deprecated so use hdfs dfs command!

$ hdfs dfs -mkdir -p /user/mudassar/snapdemo

Validate directory

$ hdfs dfs -ls /user/mudassar Found 1 items drwxr-xr-x - hdfs hdfs 0 2018-03-15 09:39 /user/mudassar/snapdemo

Change ownership to mudassar

$ hdfs dfs -chown mudassar /user/mudassar/snapdemo

Validate change of ownership

$ hdfs dfs -ls /user/mudassar Found 1 items drwxr-xr-x - mudassar hdfs 0 2018-03-15 09:39 /user/mudassar/snapdemo

Make the directory snapshottable

$ hdfs dfsadmin -allowSnapshot /user/mudassar/snapdemo Allowing snaphot on /user/mudassar/snapdemo succeeded

Show all the snapshottable directories in your cluster a subcommand under hdfs

$ hdfs lsSnapshottableDir drwxr-xr-x 0 mudassar hdfs 0 2018-03-15 09:39 0 65536 /user/mudassar/snapdemo

Create 2 sample files in /tmp

$ echo "Test one for snaphot No worries No worries I was worried you got stuck and didn't revert the HCC is full of solutions so" > /tmp/text1.txt $ echo "The default behavior is that only a superuser is allowed to access all the resources of the Kafka cluster, and no other user can access those resources" > /tmp/text2.txt

Validate the files were created

$cd /tmp $ls -lrt -rw-r--r-- 1 hdfs hadoop 121 Mar 15 10:04 text1.txt -rw-r--r-- 1 hdfs hadoop 152 Mar 15 10:04 text2.txt

Copy the files from locall to HDFS

$ hdfs dfs -put text1.txt /user/mudassar/snapdemo

Create a snapshot of the file text1.txt

$ hdfs dfs -createSnapshot /user/mudassar/snapdemo Created snapshot /user/mudassar/snapdemo/.snapshot/s20180315-101148.262

Note above the .snapshot directory which is a hidden system directory

Show the snapshot of text1.txt

$ hdfs dfs -ls /user/mudassar/snapdemo/.snapshot/s20180315-101148.262 Found 1 items -rw-r--r-- 3 hdfs hdfs 121 2018-03-15 10:10 /user/mudassar/snapdemo/.snapshot/s20180315-101148.262/text1.txt

Copied the second file text2.txt from local /tmp to HDFS

$ hdfs dfs -put text2.txt /user/mudassar/snapdemo

Validation that the 2 files should be resent

$ hdfs dfs -ls /user/mudassar/snapdemo Found 2 items -rw-r--r-- 3 hdfs hdfs 121 2018-03-15 10:10 /user/mudassar/snapdemo/text1.txt -rw-r--r-- 3 hdfs hdfs 152 2018-03-15 10:19 /user/mudassar/snapdemo/text2.txt

Demo simulate loss of file text1.txt

$ hdfs dfs -rm /user/mudassar/snapdemo/text1.txt

Indeed file text1.txt was deleted ONLY text2.txt remains

$ hdfs dfs -ls /user/mudassar/snapdemo Found 1 items -rw-r--r-- 3 hdfs hdfs 152 2018-03-15 10:19 /user/mudassar/snapdemo/text2.txt

Restore the text1.txt

$ hdfs dfs -cp -ptopax /user/mudassar/snapdemo/.snapshot/s20180315-101148.262/text1.txt /user/mudassar/snapdemo

To use -ptopax this ensure the timestamp is restored you will need to set the dfs.namenode.accesstime.precision to default 1 hr which is 360000 seconds

Check the original timestamp for text1.txt above !!!

hdfs dfs -ls /user/mudassar/snapdemo Found 2 items -rw-r--r-- 3 hdfs hdfs 121 2018-03-15 10:10 /user/mudassar/snapdemo/text1.txt -rw-r--r-- 3 hdfs hdfs 152 2018-03-15 10:19 /user/mudassar/snapdemo/text2.txt

In a nutshell, you don't need to create directory2 because when you run hdfs dfs -createSnapshot command it autocreates a directory under the original starting with .snapshot, that also saves you from extra steps of creating sort of a backup directory.

I hope that explains it clearly this time

Created 05-31-2020 11:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi shelton,

please write me the command that recovers snapshot data in such a way by retaining its ownership,timestamp,permissions and acls.

Looking forward to hear from you.

thanks

Created 03-15-2018 11:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot 🙂 @Geoffrey Shelton Okot

I got the desired output. I will do some more work on this topic.

I will let you know if i will stuck at any point.

one thing i wana let you know, below command not working at my machine :

"hdfs lsSnapshottableDir " Show all the snapshot-table directories

Cheers 🙂