Support Questions

- Cloudera Community

- Support

- Support Questions

- How to execute Hive DDL Command from Apache NIFI ?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to execute Hive DDL Command from Apache NIFI ?

- Labels:

-

Apache NiFi

Created 06-04-2018 07:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am trying to create some tables in Hive from Apache NIFI but I didn't find any exact Processor for that. However I found a processor name PutHiveQl which can be used for DDL/DML operation but I didn't find any property in which I can write the query. If it is the right processor for this purpose then how it can be used in my case.

Created on 06-04-2018 10:12 AM - edited 08-17-2019 09:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

PutHiveQL processor is used to execute HiveDDL/DML commands and the processor expects incoming flowfile content would be HiveQL command.

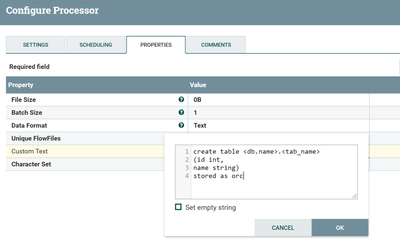

You can keep your create table statement by using GenerateFlowfile processor (or) replacetext processor ..etc and feed the success relation to PutHiveQL processor then the processor executes the content of flowfile and creates the table.

Flow:

GenerateFlowfile configs:

PuthiveQL configs:

Configure/enable HiveConnection pool and if you are having more than one HiveDDL/DML command in the flowfile content then use ; as delimiter then the processor will execute those commands with the specified delimiter.

In NiFi convertAvroTo ORC processor adds hive.ddl attribute based on the flowfile content we can make use of that attribute and then use ReplaceText processor to create new flowfile content and execute the hive ddl statement using PutHiveQL processor.

Please refer to this link for more details regarding generating/executing hive.ddl statements using NiFi.

Created on 06-04-2018 10:12 AM - edited 08-17-2019 09:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

PutHiveQL processor is used to execute HiveDDL/DML commands and the processor expects incoming flowfile content would be HiveQL command.

You can keep your create table statement by using GenerateFlowfile processor (or) replacetext processor ..etc and feed the success relation to PutHiveQL processor then the processor executes the content of flowfile and creates the table.

Flow:

GenerateFlowfile configs:

PuthiveQL configs:

Configure/enable HiveConnection pool and if you are having more than one HiveDDL/DML command in the flowfile content then use ; as delimiter then the processor will execute those commands with the specified delimiter.

In NiFi convertAvroTo ORC processor adds hive.ddl attribute based on the flowfile content we can make use of that attribute and then use ReplaceText processor to create new flowfile content and execute the hive ddl statement using PutHiveQL processor.

Please refer to this link for more details regarding generating/executing hive.ddl statements using NiFi.