Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to get the full file path to my hdfs root ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to get the full file path to my hdfs root ?

- Labels:

-

Apache Hadoop

-

HDFS

Created on 03-14-2016 09:08 PM - edited 09-16-2022 03:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How do I find the full file path to my hadoop root. I think they have the form hdfs://nn.xxxxxxxxx:port_no/

Created 03-17-2016 10:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A couple of observations and a few recommendations. First, if you are trying to run the pig script from the linux command line, I would recommend you save your pig script locally and then run it. Also, you don't really need to fully qualify the location of the input file like you are doing above. Here is a walk through of something like you are doing now; all from the command line.

SSH to the Sandbox and become maria_dev. I have an earlier 2.4 version and it does not have a local maria_dev user account (she does have an account in Ambari as well as a HDFS home directory) so I had to create that first as shown below. If the first "su" command works then skip the "useradd" command. Then verify she has a HDFS home directory.

HW10653-2:~ lmartin$ ssh root@127.0.0.1 -p 2222 root@127.0.0.1's password: Last login: Tue Mar 15 22:14:09 2016 from 10.0.2.2 [root@sandbox ~]# su maria_dev su: user maria_dev does not exist [root@sandbox ~]# useradd -m -s /bin/bash maria_dev [root@sandbox ~]# su - maria_dev [maria_dev@sandbox ~]$ hdfs dfs -ls /user Found 17 items <<NOTE: I deleted all except the one I was looking for... drwxr-xr-x - maria_dev hdfs 0 2016-03-14 22:49 /user/maria_dev

Then copy a file to HDFS that you can then later read.

[maria_dev@sandbox ~]$ hdfs dfs -put /etc/hosts hosts.txt [maria_dev@sandbox ~]$ hdfs dfs -cat /user/maria_dev/hosts.txt # File is generated from /usr/lib/hue/tools/start_scripts/gen_hosts.sh # Do not remove the following line, or various programs # that require network functionality will fail. 127.0.0.1localhost.localdomain localhost 10.0.2.15sandbox.hortonworks.com sandbox ambari.hortonworks.com

Now put the following two lines of code into a LOCAL file called runme.pig as shown when listing it below.

[maria_dev@sandbox ~]$ pwd /home/maria_dev [maria_dev@sandbox ~]$ cat runme.pig data = LOAD '/user/maria_dev/hosts.txt'; DUMP data;

Then just run it (remember, no dashes!!). NOTE: many lines removed from the logging output that is bundled in with the DUMP of the hosts.txt file.

[maria_dev@sandbox ~]$ pig runme.pig

... REMOVED A BUNCH OF LOGGING MESSAGES ...

2016-03-17 22:38:45,636 [main] INFO org.apache.pig.tools.pigstats.mapreduce.SimplePigStats - Script Statistics:

HadoopVersionPigVersionUserIdStartedAtFinishedAtFeatures

2.7.1.2.4.0.0-1690.15.0.2.4.0.0-169maria_dev2016-03-17 22:38:102016-03-17 22:38:45UNKNOWN

Success!

Job Stats (time in seconds):

JobIdMapsReducesMaxMapTimeMinMapTimeAvgMapTimeMedianMapTimeMaxReduceTimeMinReduceTimeAvgReduceTimeMedianReducetimeAliasFeatureOutputs

job_1458253459880_00011077770dataMAP_ONLYhdfs://sandbox.hortonworks.com:8020/tmp/temp212662320/tmp-490136848,

Input(s):

Successfully read 5 records (670 bytes) from: "/user/maria_dev/hosts.txt"

Output(s):

Successfully stored 5 records (310 bytes) in: "hdfs://sandbox.hortonworks.com:8020/tmp/temp212662320/tmp-490136848"

Counters:

Total records written : 5

Total bytes written : 310

Spillable Memory Manager spill count : 0

Total bags proactively spilled: 0

Total records proactively spilled: 0

Job DAG:

job_1458253459880_0001

... REMOVED ABOUT 10 MORE LOGGING MESSAGES ...

.... THE NEXT BIT IS THE RESULTS OF THE DUMP COMMAND ....

(# File is generated from /usr/lib/hue/tools/start_scripts/gen_hosts.sh)

(# Do not remove the following line, or various programs)

(# that require network functionality will fail.)

(127.0.0.1,,localhost.localdomain localhost)

(10.0.2.15,sandbox.hortonworks.com sandbox ambari.hortonworks.com)

2016-03-17 22:38:46,662 [main] INFO org.apache.pig.Main - Pig script completed in 43 seconds and 385 milliseconds (43385 ms)

[maria_dev@sandbox ~]$ Does this work for you?? If so, the you can run a Pig script from the CLI and remember... you do NOT need all the fully qualified naming junk if running this way. GOOD LUCK!

Created 03-14-2016 10:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can look for the following stanza in /etc/hadoop/conf/hdfs-site.xml (this KVP can also be found in Ambari; Services > HDFS > Configs > Advanced > Advanced hdfs-site > dfs.namenode.rpc-address).

<property>

<name>dfs.namenode.rpc-address</name>

<value>sandbox.hortonworks.com:8020</value>

</property>Then plug that value into your request.

[root@sandbox conf]# hadoop fs -ls hdfs://sandbox.hortonworks.com:8020/user/it1 Found 5 items drwx------ - it1 hdfs 0 2016-03-07 06:16 hdfs://sandbox.hortonworks.com:8020/user/it1/.staging drwxr-xr-x - it1 hdfs 0 2016-03-07 02:47 hdfs://sandbox.hortonworks.com:8020/user/it1/2016-03-07-02-47-10 drwxr-xr-x - it1 hdfs 0 2016-03-07 06:16 hdfs://sandbox.hortonworks.com:8020/user/it1/avg-output drwxr-xr-x - maria_dev hdfs 0 2016-03-13 23:42 hdfs://sandbox.hortonworks.com:8020/user/it1/geolocation drwxr-xr-x - it1 hdfs 0 2016-03-07 02:44 hdfs://sandbox.hortonworks.com:8020/user/it1/input

For HA solutions, you would use dfs.ha.namenodes.mycluster as described in http://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.4.0/bk_hadoop-ha/content/ha-nn-config-cluster.ht....

Created 03-15-2016 12:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks that works, but still can't run a pig script using pig -risk.pig or with the full file path, or by using grunt>run risk.pig

how on earth do I run a pig file without using ambari or grunt?

Created 03-15-2016 05:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

don't supply the dash. so just type "pig risk.pig". if you want to guarantee you run it with Tez they type "pig -x tez risk.pig".

well... that's assuming that risk.pig is on the local file system, not HDFS. are you trying to run a pig script that is stored on HDFS, or are you within your pig script trying to reference a file to read. if the later, then you shouldn't need the full HDFS path, just the directory such as "/user/it1/data.txt".

if the script is on hdfs then you should be able to run it with "pig hdfs://nn.mydomain.com:9020/myscripts/script.pig" as described in http://pig.apache.org/docs/r0.15.0/start.html#batch-mode.

Created on 03-15-2016 10:02 PM - edited 08-19-2019 03:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

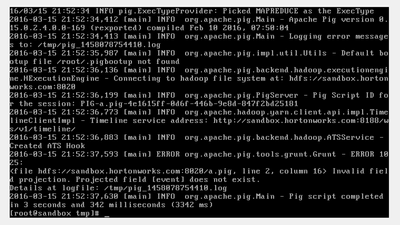

still can't run any .pig script. Does not give me confidence for exams if default install can't do basic functions.

Created 03-15-2016 11:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

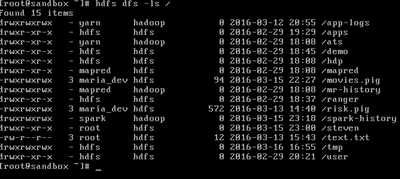

can you run "hdfs dfs -ls /" to see what is in the root directory as it looks like you are looking for "/a.pig" which is in the root file system? is that where you have stored your pig script?

Created on 03-16-2016 05:24 PM - edited 08-19-2019 03:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is the hdfs root:

data = LOAD 'hdfs://sandbox.hortonworks.com:8020/root/tmp/maria_dev/movies.txt'; DUMP data; That .txt file is a simple list of movies. So which directory should I be able to run "pig -movies.pig" from?

Created 03-17-2016 10:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A couple of observations and a few recommendations. First, if you are trying to run the pig script from the linux command line, I would recommend you save your pig script locally and then run it. Also, you don't really need to fully qualify the location of the input file like you are doing above. Here is a walk through of something like you are doing now; all from the command line.

SSH to the Sandbox and become maria_dev. I have an earlier 2.4 version and it does not have a local maria_dev user account (she does have an account in Ambari as well as a HDFS home directory) so I had to create that first as shown below. If the first "su" command works then skip the "useradd" command. Then verify she has a HDFS home directory.

HW10653-2:~ lmartin$ ssh root@127.0.0.1 -p 2222 root@127.0.0.1's password: Last login: Tue Mar 15 22:14:09 2016 from 10.0.2.2 [root@sandbox ~]# su maria_dev su: user maria_dev does not exist [root@sandbox ~]# useradd -m -s /bin/bash maria_dev [root@sandbox ~]# su - maria_dev [maria_dev@sandbox ~]$ hdfs dfs -ls /user Found 17 items <<NOTE: I deleted all except the one I was looking for... drwxr-xr-x - maria_dev hdfs 0 2016-03-14 22:49 /user/maria_dev

Then copy a file to HDFS that you can then later read.

[maria_dev@sandbox ~]$ hdfs dfs -put /etc/hosts hosts.txt [maria_dev@sandbox ~]$ hdfs dfs -cat /user/maria_dev/hosts.txt # File is generated from /usr/lib/hue/tools/start_scripts/gen_hosts.sh # Do not remove the following line, or various programs # that require network functionality will fail. 127.0.0.1localhost.localdomain localhost 10.0.2.15sandbox.hortonworks.com sandbox ambari.hortonworks.com

Now put the following two lines of code into a LOCAL file called runme.pig as shown when listing it below.

[maria_dev@sandbox ~]$ pwd /home/maria_dev [maria_dev@sandbox ~]$ cat runme.pig data = LOAD '/user/maria_dev/hosts.txt'; DUMP data;

Then just run it (remember, no dashes!!). NOTE: many lines removed from the logging output that is bundled in with the DUMP of the hosts.txt file.

[maria_dev@sandbox ~]$ pig runme.pig

... REMOVED A BUNCH OF LOGGING MESSAGES ...

2016-03-17 22:38:45,636 [main] INFO org.apache.pig.tools.pigstats.mapreduce.SimplePigStats - Script Statistics:

HadoopVersionPigVersionUserIdStartedAtFinishedAtFeatures

2.7.1.2.4.0.0-1690.15.0.2.4.0.0-169maria_dev2016-03-17 22:38:102016-03-17 22:38:45UNKNOWN

Success!

Job Stats (time in seconds):

JobIdMapsReducesMaxMapTimeMinMapTimeAvgMapTimeMedianMapTimeMaxReduceTimeMinReduceTimeAvgReduceTimeMedianReducetimeAliasFeatureOutputs

job_1458253459880_00011077770dataMAP_ONLYhdfs://sandbox.hortonworks.com:8020/tmp/temp212662320/tmp-490136848,

Input(s):

Successfully read 5 records (670 bytes) from: "/user/maria_dev/hosts.txt"

Output(s):

Successfully stored 5 records (310 bytes) in: "hdfs://sandbox.hortonworks.com:8020/tmp/temp212662320/tmp-490136848"

Counters:

Total records written : 5

Total bytes written : 310

Spillable Memory Manager spill count : 0

Total bags proactively spilled: 0

Total records proactively spilled: 0

Job DAG:

job_1458253459880_0001

... REMOVED ABOUT 10 MORE LOGGING MESSAGES ...

.... THE NEXT BIT IS THE RESULTS OF THE DUMP COMMAND ....

(# File is generated from /usr/lib/hue/tools/start_scripts/gen_hosts.sh)

(# Do not remove the following line, or various programs)

(# that require network functionality will fail.)

(127.0.0.1,,localhost.localdomain localhost)

(10.0.2.15,sandbox.hortonworks.com sandbox ambari.hortonworks.com)

2016-03-17 22:38:46,662 [main] INFO org.apache.pig.Main - Pig script completed in 43 seconds and 385 milliseconds (43385 ms)

[maria_dev@sandbox ~]$ Does this work for you?? If so, the you can run a Pig script from the CLI and remember... you do NOT need all the fully qualified naming junk if running this way. GOOD LUCK!

Created 03-30-2018 01:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is this method correct to specify the path of the hdfs file. Is this same for everyone

Created 03-19-2016 07:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks I will look at that over the weekend.