@fy-test

Welcome to the community.

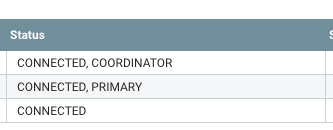

No matter which NiFi node you are connected to any change request must be sent the elected "Cluster Coordinator" that replicates that request to all connected nodes. If any of the nodes that has been requested to make the change fails to respond in the node will get disconnected. The elected "Primary node" is the node on which any primary only scheduled processor components will run. It is also important to understand that which node is elected as the "Primary" or "Coordinator" can change at any time.

I don't think forcing all your users on to the Primary node is going to solve your issue.

Even with a node disconnection caused by a failure of the request replication, the disconnected node should attempt to reconnect to the node and inherit the cluster flow if is it different from the local flow on the connecting node.

You should also be looking at things like CPU load average, Heap usage, and Garbage collection stats on your primary node versus the other nodes.

Perhaps adjust max timer driven thread pool sizes or adjusting timeouts would be helpful.

How well are your dataflow designs distributing the load across all nodes in your cluster?

Please help our community grow and thrive. If you found any of the suggestions/solutions provided helped you with solving your issue or answering your question, please take a moment to login and click "Accept as Solution" on one or more of them that helped.

Thank you,

Matt