Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: How to set equivalent output file size after s...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to set equivalent output file size after sqoop import?

- Labels:

-

Apache Sqoop

Created on 11-15-2016 12:33 AM - edited 08-18-2019 03:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sqoop Version 1.4.6 in HDP 2.5.0.0

Oracle 11g Select query size is about 2.3GB.

Sqoop Import

....

--num-mappers 4

--split-by STR

...

Result.

I think the mappers options doesn't disrelated with saving same file size.

I want to split output file size each per 570MB, but sqoop parameter is not support that feature.

Is that another options or tips for output file size?

Created 11-15-2016 07:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Peter Kim I don't think you can control file sizes other than changing the number of mappers as you seem to be doing, with one exception. If you are in direct mode you can use --direct-split-size 570000000 which will split into approximately 570MB files.

Created on 11-16-2016 01:12 AM - edited 08-18-2019 03:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

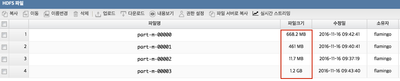

That param "--direct-split-size" is only for postsgreSQL, If I'm right. Because I already tested it, and It's not working. See my result.

Total table size is 2.3GB.

sqoop import

...

--num-mappers 4

--direct-split-size 600000000

Created 11-16-2016 01:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like you're right about --direct-split-size being limited to postgresql. So, I think you'll need to depend on the number of mappers and the proper split-by column. What size files are you getting when you export with 4 mappers?

I noticed that in your original post, you have --split-by STR, which makes me think you're trying to split by a string column which is not possible and I think it will produce an error with more than one mapper.

But, let's assume you use a split-by column is actually numeric. Do you know for sure that your data is evenly distributed by the split-by column? If the numeric field is not evenly distributed, you will end up with some larger and some smaller files. Sqoop first does select min(<split-coumn>), max(split-column) from <table>. It then divides it by the number of mappers.

For example, suppose you have 25 records in the database. The primary key field "id" has 24 records with id's 1 through 24, plus one other record with id=100. If, we run 4 mappers, we get min 1, max 100, divided into 4 groups by id ranges for the 4 mappers. Each mapper writes the records in its ID range. So we will end up with ONE file containing 24 records, three empty files and the one with id = 100.

Created on 11-21-2016 01:29 AM - edited 08-18-2019 03:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

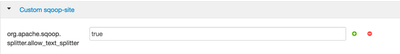

--split-by option is possible for text column after add sqoop-site.xml in ambari or add that option in command line.

Like this.

I think oracle record count is not relevant splitted file size. Because actual file size depends on column count and column type and column value size per one record.

And here's my interesting sqoop import results.

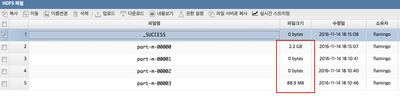

One file total size : 2.2 GB

sqoop import

...

--direct

--fetch size 1000

--num-mappers 10

--split-by EMP_NO (TEXT)

0 bytes each 3 mappers, and 1.1GB to 1 mapper.

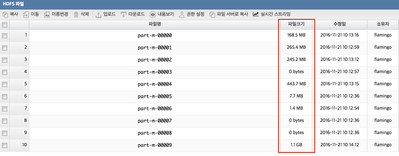

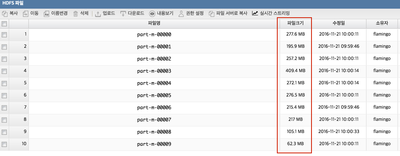

and re-test with same value except below option.

--split-by REEDER_ID (NUMBER)

In my opinion, Sqoop mappers only parallel processing without regard to the file size for selected query results in oracle record, these are not evenly split file size. Also --split-by with NUMBER TYPE COLUMN option is useful than TEXT TYPE COLUMN that is not accurate for splitted file size.

Created 11-16-2016 02:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apparently there is a newer option "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" for splitting on string there is no guarantee it will split evenly.

Created 11-20-2016 11:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Peter Kim from the user guide

By default sqoop will use query select min(<split-by>), max(<split-by>) from <table name> to find out boundaries for creating splits. In some cases this query is not the most optimal so you can specify any arbitrary query returning two numeric columns using --boundary-query argument.

If the actual values for the primary key are not uniformly distributed across its range, then this can result in unbalanced tasks. You should explicitly choose a different column with the --split-by argument. For example, --split-by employee_id. Sqoop cannot currently split on multi-column indices. If your table has no index column, or has a multi-column key, then you must also manually choose a splitting column.

If a table does not have a primary key defined and the --split-by <col> is not provided, then import will fail unless the number of mappers is explicitly set to one with the --num-mappers 1 option or the --autoreset-to-one-mapper option is used. The option --autoreset-to-one-mapper is typically used with the import-all-tables tool to automatically handle tables without a primary key in a schema.

There's also a whole section on Oracle connector in Sqoop user guide v. 1.4.6

The Data Connector for Oracle and Hadoop generates queries for the mappers of the form:

SELECT *

FROM sometable

WHERE rowid >= dbms_rowid.rowid_create(1, 893, 1, 279, 0) AND

rowid <= dbms_rowid.rowid_create(1, 893, 1, 286, 32767)The Data Connector for Oracle and Hadoop queries ensure that:

- No two mappers read data from the same Oracle block. This minimizes redundant IO.

- The table does not require indexes.

- The Sqoop command line does not need to specify a

--split-bycolumn.