Support Questions

- Cloudera Community

- Support

- Support Questions

- How to terminate Zeppelin applications when idle

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to terminate Zeppelin applications when idle

- Labels:

-

Apache Spark

-

Apache YARN

-

Apache Zeppelin

Created on 03-06-2017 03:26 PM - edited 08-19-2019 01:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I need Zeppelin applications to terminate after being idle for a certain duration and have tried a number of things with no success. The applications just stay in "RUNNING" state on YARN resource manager. Can someone suggest anything that I've missed where the applications are closed?

I'm using hortonworks with hadoop 2.7.3 with zeppelin,spark 1.6.2 and yarn.

I've set the zeppelin spark interpreters to be isolated, so each notebook session uses a different spark context. I have enabled dynamic allocation with the shuffle service and cannot see this having any effect. I've set the dynamic allocation attributes through YARN in the spark custom spark-defaults config, and also set them in the Zeppelin spark interpreter config.

Below is a screenshot of the config values in Zeppelin spark interpreter:

My spark custom-defaults config is:

spark.storage.memoryFraction=0.45

spark.shuffle.spill.compress=false

spark.shuffle.spill=true

spark.shuffle.service.port=7337

spark.shuffle.service.enabled=true

spark.shuffle.memoryFraction=0.75

spark.shuffle.manager=SORT

spark.shuffle.consolidateFiles=true

spark.shuffle.compress=false

spark.dynamicAllocation.sustainedSchedulerBacklogTimeout=5

spark.dynamicAllocation.schedulerBacklogTimeout=5

spark.dynamicAllocation.minExecutors=6

spark.dynamicAllocation.initialExecutors=6

spark.dynamicAllocation.executorIdleTimeout=30

spark.dynamicAllocation.enabled=true

My YARN node manager config:

yarn.nodemanager.aux-services=mapreduce_shuffle,spark_shuffle

YARN yarn-site config:

yarn.nodemanager.aux-services.mapreduce_shuffle.class=org.apache.hadoop.mapred.ShuffleHandler

yarn.nodemanager.aux-services.spark2_shuffle.class=org.apache.spark.network.yarn.YarnShuffleService

yarn.nodemanager.aux-services.spark2_shuffle.classpath={{stack_root}}/${hdp.version}/spark2/aux/*

yarn.nodemanager.aux-services.spark_shuffle.classpath={{stack_root}}/${hdp.version}/spark/aux/*

(I've checked the shuffle jar file location and it does satisfy this)

yarn.nodemanager.aux-services.spark_shuffle.class=org.apache.spark.network.yarn.YarnShuffleService

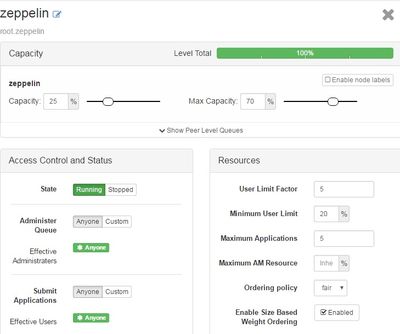

I've also created a YARN queue for all Zeppelin tasks. Screenshot of settings below:

Below is a screenshot of how YARN Resource Manager looks after 2 Zeppelin notebooks have been run. I can see the spark interpreter spark.executor.instances has ended up being used (10+1).

These applications stay running unless killed or spark interpreter is restarted. How can these be removed when idle?

Thank you for any suggestions or guidance.

Alistair

Created 03-06-2017 08:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm noticing that Livy has the feature to stop Spark application after a certain timeout configured in livy.server.session.timeout property. Find the apache Jira tracking similar issue at https://issues.apache.org/jira/browse/ZEPPELIN-1293.

You can set up a livy interpreter and run all your spark notebooks with livy.spark interpreter. Find links to install and configure livy interpreter as below.

Created 03-07-2017 05:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, I'll give that a try and get back to you

Created 04-19-2017 01:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found there are bugs in livy 0.2 with this functionality. This looks to be fixed in v 0.4.

I'm using hortonworks hdp, which only has livy 0.2 on it. I'm not going to upgrade it myself, as I will be losing the benefits of using hdp then. I will wait for the next release of hdp with livy 0.4.

Thanks for your answer

Created 02-20-2018 01:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Apache Livy is not exactly like Apache Spark on YARN in Zeppelin. There are things that you will notice you don't have even in Zeppelin 0.7.3 with Livy 0.4.0:

- No multiple output: If you have a count and a show and bunch of other stuff in one block you will only see the last result in Livy

- No ZeppelinContext in Livy

- No dep() to add dependency by user without you adding them manually and restart the Livy server

I like Livy, but I had to move to Spark interpreter because of these features missing in Livy. Also, time to time you see error 500 which is really really hard to debug and see what caused crashing your app as suppose in Spark interpreter it will just show you the error itself.

Created 04-04-2019 07:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi there, did u find a solution for this issue? I am facing the same problem here. Thx

Created on 09-17-2022 12:02 PM - edited 09-17-2022 12:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The solution to the problem is to set the following parameters in zeppelin-site.xml

<property>

<name>zeppelin.interpreter.lifecyclemanager.class</name>

<value>org.apache.zeppelin.interpreter.lifecycle.TimeoutLifecycleManager</value>

<description>LifecycleManager class for managing the lifecycle of interpreters, by default interpreter will

be closed after timeout</description>

</property>

<property>

<name>zeppelin.interpreter.lifecyclemanager.timeout.checkinterval</name>

<value>360000</value>

<description>milliseconds of the interval to checking whether interpreter is time out</description>

</property>

<property>

<name>zeppelin.interpreter.lifecyclemanager.timeout.threshold</name>

<value>3600000</value>

<description>milliseconds of the interpreter timeout threshold, by default it is 1 hour</description>

</property>

Best Regards

Dariusz Jezierski