Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: INFO yarn.ApplicationMaster: Unregistering App...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

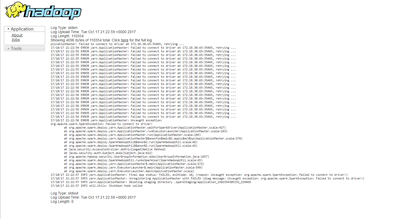

INFO yarn.ApplicationMaster: Unregistering ApplicationMaster with FAILED (diag message: Uncaught exception: org.apache.spark.SparkException: Failed to connect to driver!)

- Labels:

-

Apache Spark

Created on 10-19-2017 02:36 PM - edited 08-17-2019 05:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

lot of jobs are failing with the error unable to connect to driver

Created 10-20-2017 06:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try spark-submit --master <master-ip>:<spark-port> to submit the job.

Created 10-23-2017 04:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Thangarajan but giving ip and port will make the job specific to only that particular

so again if those ports are not available it could be a problem is there any other work around

thanks for your rply

Created 10-20-2017 07:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is this happening with all the jobs? For example even with some long running jobs?

Sometimes it can happen when the Spark job finishes fine but too early, But the executors are still trying to contact driver, Hence ultimately yarn declares the job as failed since executors could not connect.

.

It can also happen if there is a Firewall (Network issue) if some ports are blocked. So you might want to check if the ports are accessible properly or not? Mostly the port are chosen at random in spark, but you may try setting spark.driver.port to see if it is accessible remotely and helps. For other ports please refer to:

http://spark.apache.org/docs/latest/security.html#configuring-ports-for-network-security

http://spark.apache.org/docs/latest/configuration.html#networking

.

Created 10-23-2017 04:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yeah it is happenning with all the jobs and after some time they are picking some other port and start working fine

what is the thing those jobs trying to find in the port are they trying to find the node manager

for the successfull jobs i went to the port and did ps -wwf port number where i can find node manager running there

Created 01-22-2019 07:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have encountered the same issue.

Did you able to fix it?

If yes, can you please share the solution here.

Thanks in advance.