Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: INSERT INTO TABLE failing with error while mo...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

INSERT INTO TABLE failing with error while moving files from org.apache.hadoop.hive.ql.exec.Task

- Labels:

-

Apache Hive

-

Apache Oozie

Created on 05-03-2016 03:40 AM - edited 08-19-2019 03:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

We have an Hive action within a Oozie workflow that throws the following error occasionally:

2016-04-29 16:16:19,129 INFO [main] ql.Driver (Driver.java:launchTask(1604)) - Starting task [Stage-0:MOVE] in serial mode 73997 [main] INFO org.apache.hadoop.hive.ql.exec.Task - Loading data to table audit.aud_tbl_validation_result from hdfs://<nameservice>/tmp/hive/<user>/6218606e-a08c-4912-ad02-6a147165b7d7/hive_2016-04-29_16-15-51_649_3401400148024571575-1/-ext-10000 2016-04-29 16:16:19,129 INFO [main] exec.Task (SessionState.java:printInfo(824)) - Loading data to table audit.aud_tbl_validation_result from hdfs://<nameservice>/tmp/hive/<user>/6218606e-a08c-4912-ad02-6a147165b7d7/hive_2016-04-29_16-15-51_649_3401400148024571575-1/-ext-10000 76263 [main] INFO hive.ql.metadata.Hive - Renaming src:hdfs://<nameservice>/tmp/hive/<user>/6218606e-a08c-4912-ad02-6a147165b7d7/hive_2016-04-29_16-15-51_649_3401400148024571575-1/-ext-10000/000000_0;dest: hdfs://<nameservice>/apps/hive/warehouse/audit.db/aud_tbl_validation_result/000000_0_copy_348;Status:false 2016-04-29 16:16:21,395 INFO [main] metadata.Hive (Hive.java:renameFile(2461)) - Renaming src:hdfs://<nameservice>/tmp/hive/<user>/6218606e-a08c-4912-ad02-6a147165b7d7/hive_2016-04-29_16-15-51_649_3401400148024571575-1/-ext-10000/000000_0;dest: hdfs://<nameservice>/apps/hive/warehouse/audit.db/aud_tbl_validation_result/000000_0_copy_348;Status:false 76274 [main] ERROR org.apache.hadoop.hive.ql.exec.Task - Failed with exception copyFiles: error while moving files!!! Cannot move hdfs://<nameservice>/tmp/hive/<user>/6218606e-a08c-4912-ad02-6a147165b7d7/hive_2016-04-29_16-15-51_649_3401400148024571575-1/-ext-10000/000000_0 to hdfs://<nameservice>/apps/hive/warehouse/audit.db/aud_tbl_validation_result/000000_0_copy_348 org.apache.hadoop.hive.ql.metadata.HiveException: copyFiles: error while moving files!!! Cannot move hdfs://<nameservice>/tmp/hive/<user>/6218606e-a08c-4912-ad02-6a147165b7d7/hive_2016-04-29_16-15-51_649_3401400148024571575-1/-ext-10000/000000_0 to hdfs://<nameservice>/apps/hive/warehouse/audit.db/aud_tbl_validation_result/000000_0_copy_348 at org.apache.hadoop.hive.ql.metadata.Hive.copyFiles(Hive.java:2536) at org.apache.hadoop.hive.ql.metadata.Table.copyFiles(Table.java:673) at org.apache.hadoop.hive.ql.metadata.Hive.loadTable(Hive.java:1571) at org.apache.hadoop.hive.ql.exec.MoveTask.execute(MoveTask.java:288) at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:160) at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:85) at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:1606) at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1367) at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1179) at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1006) at org.apache.hadoop.hive.ql.Driver.run(Driver.java:996) at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:247) at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:199) at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:410) at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:345) at org.apache.hadoop.hive.cli.CliDriver.processReader(CliDriver.java:443) at org.apache.hadoop.hive.cli.CliDriver.processFile(CliDriver.java:459) at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:739) at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:677) at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:616) at org.apache.oozie.action.hadoop.HiveMain.runHive(HiveMain.java:323) at org.apache.oozie.action.hadoop.HiveMain.run(HiveMain.java:284) at org.apache.oozie.action.hadoop.LauncherMain.run(LauncherMain.java:39) at org.apache.oozie.action.hadoop.HiveMain.main(HiveMain.java:66) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.oozie.action.hadoop.LauncherMapper.map(LauncherMapper.java:226) at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:54) at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:450) at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343) at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:163) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1628) at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158) Caused by: java.io.IOException: Cannot move hdfs://<nameservice>/tmp/hive/<user>/6218606e-a08c-4912-ad02-6a147165b7d7/hive_2016-04-29_16-15-51_649_3401400148024571575-1/-ext-10000/000000_0 to hdfs://<nameservice>/apps/hive/warehouse/audit.db/aud_tbl_validation_result/000000_0_copy_348 at org.apache.hadoop.hive.ql.metadata.Hive.copyFiles(Hive.java:2530) ... 36 more

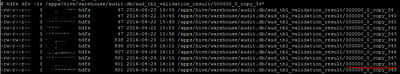

The Namenode logs reveals more details --> destination exists!

hadoop-hdfs-namenode-<host>.log.3:2016-04-29 16:16:21,394 WARN hdfs.StateChange (FSDirectory.java:unprotectedRenameTo(540)) - DIR* FSDirectory.unprotectedRenameTo: failed to rename /tmp/hive/<user>/6218606e-a08c-4912-ad02-6a147165b7d7/hive_2016-04-29_16-15-51_649_3401400148024571575-1/-ext-10000/000000_0 to /apps/hive/warehouse/audit.db/aud_tbl_validation_result/000000_0_copy_348 because destination exists

Cross checking HDFS, the file is in the Hive warehouse directory.

Stack/Settings:

HDP 2.2.4, Kerberos enabled, NN HA, Hive ACID disabled

Hive statements used in Oozie workflow:

INSERT INTO TABLE AUDIT.AUD_TBL_BATCH_RUN_LOG VALUES(${Batch_ID},"${Business_DT}", ...);

INSERT INTO TABLE AUDIT.AUD_TBL_VALIDATION_RESULT VALUES(${Batch_ID},"${Job_ID}","${Status}", ...);Hive DLL:

CREATE TABLE aud_tbl_batch_run_log (

AUD_Batch_ID BIGINT,

AUD_JOB_ID STRING,

... )

INTO 10 BUCKETS stored as orc TBLPROPERTIES ('transactional'='false');

CREATE TABLE aud_tbl_batch_validation_result (

AUD_Batch_ID BIGINT,

AUD_JOB_ID STRING,

AUD_STATUS STRING,

... )

INTO 10 BUCKETS stored as orc TBLPROPERTIES ('transactional'='false');We see this error occasionally for table aud_tbl_batch_run_log as well as aud_tbl_batch_validation_result.

Why does the file sometimes already exists? How does the insert into table internally works?

Any hints to solve this are highly appreciated.

Thank you & best regards, Chris

Created 05-03-2016 03:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like you are executing one insert per row. I see you have 900byte files in HDFS. Most likely reason for your error is two workflows going in parallel trying to insert into the table.

Even if you get the insert flow right, 900 byte files in HDFS will create performance hit for hive and NN overload for HDFS. You should try to change your oozie workflow. You should consider microbatching or streaming in your data into HDFS/Hive.

You could write your audits to nfs share with shell action. Every few minutes you can load all your audits from that folder into HDFS. This is an example of microbatching strategy.

You can also try putting JMS messages and use storm-jms to direct them to HDFS. This is a streaming approach.

Created 05-03-2016 03:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like you are executing one insert per row. I see you have 900byte files in HDFS. Most likely reason for your error is two workflows going in parallel trying to insert into the table.

Even if you get the insert flow right, 900 byte files in HDFS will create performance hit for hive and NN overload for HDFS. You should try to change your oozie workflow. You should consider microbatching or streaming in your data into HDFS/Hive.

You could write your audits to nfs share with shell action. Every few minutes you can load all your audits from that folder into HDFS. This is an example of microbatching strategy.

You can also try putting JMS messages and use storm-jms to direct them to HDFS. This is a streaming approach.

Created 05-03-2016 04:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Ravi Mutyala, Thank you for your response. It's even worser: In sum we have 8 Oozie workflows containing in total 500+ sub-workflows for loading source tables with a Sqoop action. Each sub-workflow contains the above mentioned Hive action for auditing. This creates for each loaded source table two INSERT INTO TABLE statements in Hive.

We hit the error when running those 8 workflows in parallel. Would a sequential execution of the workflows help in this case?

What do you exactly mean with microbatching?

Thanks, Chris

Created 05-03-2016 04:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You could write your audits to nfs share with shell action. Every few minutes you can load all your audits from that folder into HDFS. This is an example of microbatching strategy.

You can also try putting JMS messages and use storm-jms to direct them to HDFS. This is a streaming approach.

Created 05-20-2016 04:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Christian Guegi Do you see this issue only when running multiple worflows in parallel?

Created 05-31-2016 09:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, we see this issue only when running multiple Oozie worklflows in parallel.

Created 05-22-2016 10:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please check if you are using KMS in those scenarios you can't copy the data from EZ to other EZ which gives the same kind of error ...To avoid this error use scratch directory which will resolve your issue.

Created 05-31-2016 09:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is no KMS used in those szenarios.

Created 07-05-2016 09:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Below our findings:

As shown in the DDL above, bucketing is used in the problematic tables. Bucket number gets decided according to hashing algorithm, out of 10 buckets for each insert 1 bucket will have actual data file and other 9 buckets will have same file name with zero size. During this hash calculation race condition is happening when inserting a new row into the bucketed table via multiple different threads/processes, due to which 2 or more threads/processes are trying to create the same bucket file.

In addition, as discussed here, the current architecture is not really recommended as over the period of time there would be millions of files on HDFS, which would create extra overhead on the Namenode. Also select * statement would take lot of time as it will have to merge all the files from bucket.

Solutions which solved both issues:

- Removed buckets from the two problematic tables, hece the probability of race conditions will be very less

- Added hive.support.concurrency=true before the insert statements

- Weekly Oozie workflow that uses implicit Hive concatenate command on both tables to mitigate the small file problem

FYI @Ravi Mutyala