Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Import data from remote server to HDFS

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Import data from remote server to HDFS

- Labels:

-

Apache Hadoop

Created 03-01-2018 08:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have csv data in remote server and i need to import that data to HDFS . please suggest what are the options available to do this task ?

PS: Include all the options

Created 03-01-2018 09:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @hema moger

There are couple of option like below

Hadoop provides several ways of accessing HDFS

All of the following support almost all features of the filesystem -

1. FileSystem (FS) shell commands: Provides easy access of Hadoop file system operations as well as other file systems that Hadoop supports, such as Local FS, HFTP FS, S3 FS.

This needs hadoop client to be installed and involves the client to write blocks directly to one Data Node. All versions of Hadoop do not support all options for copying between filesystems.

2. WebHDFS: It defines a public HTTP REST API, which permits clients to access Hadoop from multiple languages without installing Hadoop, Advantage being language agnostic way(curl, php etc....).

WebHDFS needs access to all nodes of the cluster and when some data is read, it is transmitted from the source node directly but there is a overhead of http over (1)FS Shell but works agnostically and no problems with different hadoop cluster and versions.

3. HttpFS. Read and write data to HDFS in a cluster behind a firewall. Single node will act as GateWay node through which all the data will be transfered and performance wise I believe this can be even slower but preferred when needs to pull the data from public source into a secured cluster.

Created 03-01-2018 09:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. Is the remote server a Linux box or Windows? If its the latter then you will need WinSCP to transfer the file to a Linux box.

2. If you set up your cluster according to the recommended architecture you should be having an edge node(s), Masternodes and data nodes. Typically your edge node will be used to recevieve the csv file.You will need to ensure there is connectivity between your edge node and the remote Linux box where your CSV file is.

Assuming you have root access to boot the remote and edge node then you can copy the CSV file to the edge node. it better to setup a passwordless connection between the edge node and the remote Linux server.

If you are on the computer from which you want to send the file to a remote computer:

# scp /file/to/send username@remote:/where/to/put

Here the remote can be an FQDN or an IP address.

On the other hand if you are on the computer wanting to receive the file from a remote computer:

# scp username@remote:/file/to/send /where/to/put

Then on the edge node, you can invoke hdfs command, assuming the csv file is in /home/transfer/test.csv

# su - hdfs $ hdfs dfs -put /home/transfer/test.csv /user/your_hdfs_directory

Validate the success of the hdfs command

$ hdfs dfs -ls /user/your_hdfs_directory/

You should be able to see your test.csv here

Created 03-01-2018 11:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Geoffrey Shelton Okot : remote server is linux

Created 03-01-2018 09:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @hema moger

if your remote server isn't belong to your cluster, you will have bring data on one of server in your cluster and than use "hdfs fs" to put data on your cluster.

in other case, i.e the remote server belong to the cluster , than you just need to run "hadoop fs -put" of you're csv file

Created 03-01-2018 06:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great if it's a Linux server then create a passwordless login between the remote server and the edge node.

First, update your /etc/hosts so that the remoter server is pingable from your edge node check the firewall rules and make sure you don't have a DENY

Here is the walkthrough

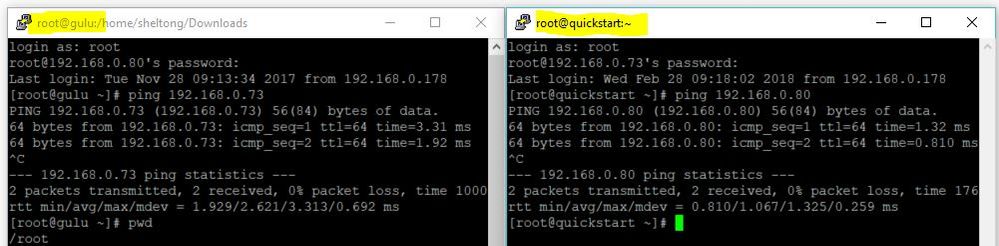

See attached pic1.jpg

In my case the I have a centos server GULU and a Cloudera Quickstart VM running in Oracle VM virtual box because they are on the same network it's easy

GULU Remote server:

I want to copy the file test.txt which is located in /home/sheltong/Downloads [root@gulu ~]# cd /home/sheltong/Downloads

[root@gulu Downloads]# ls test.txt

Edge node or localhost:

[root@quickstart home]# scp root@192.168.0.80:/home/sheltong/Downloads/test.txt . The authenticity of host '192.168.0.80 (192.168.0.80)' can't be established. RSA key fingerprint is 93:8a:6c:02:9d:1f:e1:b5:0a:05:68:06:3b:7d:a3:d3. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '192.168.0.80' (RSA) to the list of known hosts. root@192.168.0.80's password:xxxxxremote_server_root_passwordxxx test.txt 100% 136 0.1KB/s 00:00

Validate that the file was copied

[root@quickstart home]# ls cloudera test.txt

There you are I hope that helped