Support Questions

- Cloudera Community

- Support

- Support Questions

- Importing database table from MYSQL to HIVE using ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Importing database table from MYSQL to HIVE using SQOOP

- Labels:

-

Apache Sqoop

Created on

11-27-2019

11:19 PM

- last edited on

11-27-2019

11:36 PM

by

VidyaSargur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Basically, I am trying to import a database table from MySQL to hive but it turns out not importing to hive instead it gives me the following error. can anyone please help me with a solution which would be very much appreciated.

[cloudera@quickstart ~]$ sqoop import --connect jdbc:mysql://localhost:3306/sqoop --username root --password cloudera --table customer --hive-import

Warning: /usr/lib/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

19/11/27 23:11:33 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.13.0

19/11/27 23:11:33 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

19/11/27 23:11:33 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override

19/11/27 23:11:33 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc.

19/11/27 23:11:33 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

19/11/27 23:11:33 INFO tool.CodeGenTool: Beginning code generation

19/11/27 23:11:33 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `customer` AS t LIMIT 1

19/11/27 23:11:33 ERROR manager.SqlManager: Error reading from database: java.sql.SQLException: Streaming result set com.mysql.jdbc.RowDataDynamic@2bbfdaa4 is still active. No statements may be issued when any streaming result sets are open and in use on a given connection. Ensure that you have called .close() on any active streaming result sets before attempting more queries.

java.sql.SQLException: Streaming result set com.mysql.jdbc.RowDataDynamic@2bbfdaa4 is still active. No statements may be issued when any streaming result sets are open and in use on a given connection. Ensure that you have called .close() on any active streaming result sets before attempting more queries.

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:934)

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:931)

at com.mysql.jdbc.MysqlIO.checkForOutstandingStreamingData(MysqlIO.java:2735)

at com.mysql.jdbc.MysqlIO.sendCommand(MysqlIO.java:1899)

at com.mysql.jdbc.MysqlIO.sqlQueryDirect(MysqlIO.java:2151)

at com.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2619)

at com.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2569)

at com.mysql.jdbc.StatementImpl.executeQuery(StatementImpl.java:1524)

at com.mysql.jdbc.ConnectionImpl.getMaxBytesPerChar(ConnectionImpl.java:3003)

at com.mysql.jdbc.Field.getMaxBytesPerCharacter(Field.java:602)

at com.mysql.jdbc.ResultSetMetaData.getPrecision(ResultSetMetaData.java:445)

at org.apache.sqoop.manager.SqlManager.getColumnInfoForRawQuery(SqlManager.java:305)

at org.apache.sqoop.manager.SqlManager.getColumnTypesForRawQuery(SqlManager.java:260)

at org.apache.sqoop.manager.SqlManager.getColumnTypes(SqlManager.java:246)

at org.apache.sqoop.manager.ConnManager.getColumnTypes(ConnManager.java:327)

at org.apache.sqoop.orm.ClassWriter.getColumnTypes(ClassWriter.java:1858)

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1657)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:106)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:494)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:621)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

19/11/27 23:11:33 ERROR tool.ImportTool: Import failed: java.io.IOException: No columns to generate for ClassWriter

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1663)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:106)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:494)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:621)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

[cloudera@quickstart ~]$

Created 11-28-2019 12:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

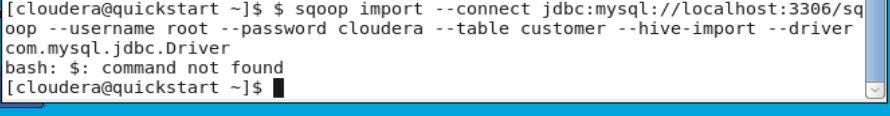

can you add the Mysql driver portion to your command

$ sqoop import --connect jdbc:mysql://localhost:3306/sqoop --username root --password cloudera --table customer --hive-import --driver com.mysql.jdbc.Driver

Please let me know

Created 11-28-2019 12:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can you add the Mysql driver portion to your command

$ sqoop import --connect jdbc:mysql://localhost:3306/sqoop --username root --password cloudera --table customer --hive-import --driver com.mysql.jdbc.Driver

Please let me know

Created 11-28-2019 12:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 11-28-2019 01:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's obvious because you have double $$ in the sqoop command you forgot to remove the one in the command I posted please do that and revert.

Thank you

Created on 11-28-2019 01:45 AM - edited 11-28-2019 01:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It works fine now with this command, I also had to add split-by id

sqoop import --connect jdbc:mysql://localhost:3306/sqoop --username root --password cloudera --split-by id --table customer --hive-import --driver com.mysql.jdbc.Driver

thank you so much

Created 09-01-2022 07:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

what worked for me is

just added --m 1