Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: In NiFi, the ConvertAvroToORC processor is ext...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

In NiFi, the ConvertAvroToORC processor is extremely slow

- Labels:

-

Apache NiFi

Created on 09-05-2017 07:58 PM - edited 08-18-2019 02:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

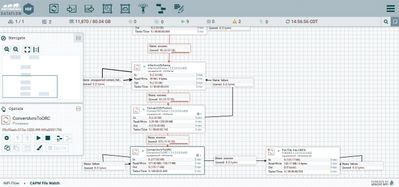

I'm taking raw pipe-delimited text files, converting them to Avro and then converting them to ORC files (because ORC files are awesome), and everything is working swimmingly, except the conversion from Avro to ORC is extremely slow, which is causing my processing to back up infinitely.

Is there a better method to convert raw text into an ORC file in NiFi or some kind of efficiency that can be gained to allow the data to flow through much faster?

Created 09-06-2017 11:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Aaron Dunlap,

Depending of the HDF version you are using, you could leverage the record-oriented processors to perform the CSV - Avro conversion in a much more efficient way. Then I assume you're doing a conversion into ORC format to query the data using Hive. If that's the case, a common pattern is to let Hive do the conversion: what I usually do is to send the data into a landing folder in HDFS as Avro data, then I use a PutHiveQL processor to execute few queries (one to create a temporary table on top of the avro data using the corresponding avro schema, one to insert select the data from the temporary table to the final table which is ORC, and one to delete the temporary table), and then a DeleteHDFS processor to delete the data used to create the temporary table (because the drop table statement does not delete the data if you created a temporary external table).

There is an ORC reader/writer on the roadmap that will replace all of that (you'll be able to directly convert from CSV to ORC using record-oriented processors) but that's not ready yet.

Hope this helps.

Created 09-06-2017 11:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Aaron Dunlap,

Depending of the HDF version you are using, you could leverage the record-oriented processors to perform the CSV - Avro conversion in a much more efficient way. Then I assume you're doing a conversion into ORC format to query the data using Hive. If that's the case, a common pattern is to let Hive do the conversion: what I usually do is to send the data into a landing folder in HDFS as Avro data, then I use a PutHiveQL processor to execute few queries (one to create a temporary table on top of the avro data using the corresponding avro schema, one to insert select the data from the temporary table to the final table which is ORC, and one to delete the temporary table), and then a DeleteHDFS processor to delete the data used to create the temporary table (because the drop table statement does not delete the data if you created a temporary external table).

There is an ORC reader/writer on the roadmap that will replace all of that (you'll be able to directly convert from CSV to ORC using record-oriented processors) but that's not ready yet.

Hope this helps.

Created 09-06-2017 11:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Another option, without modifying your current workflow, is to configure your ConvertAvroToORC processor to use parallel threads. To do that, you can change the "concurrent tasks" parameter in the "scheduling" tab of the configuration.

Created 09-06-2017 05:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This made a *huge* difference. I'll accept the top level answer, but parallel processing made a big difference in this case. The processor itself is still fairly slow, but that may be a function of the action that its taking. I'm wondering if moving the data into memory prior to processing would make any difference.

Thanks for the heads up though!

Created 01-28-2020 08:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content