Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Issue in nifi clustering

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Issue in nifi clustering

- Labels:

-

Apache NiFi

-

Cloudera DataFlow (CDF)

Created on 08-03-2016 10:12 AM - edited 08-18-2019 04:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

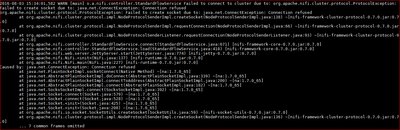

i am trying to setup 3 different nifi instance on 3 node hadoop cluster.one on each machine, but getting below error -

i am getting this error on node2. All three nifi instances are up but they are not connected. i didn't see anything in NCM graph in connected node tag.

i have a feeling that i am missing some important property.

Although when i tried setting these 3 instance on same machine than it worked fine with same set of config.

Can anyone please help. Thanks in advance.

Ankit @mclark @PJ Moutrie @Pierre Villard

Created 08-03-2016 10:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When A NiFi instance is designated as a node its starts sending out heartbeat messages after it is started. Those heartbeat messages contain important connection information for the Node. Part of that messages is the hostname for each connecting node. If left blank Java will try to determine the hostname and in many cases the hostname ends up being "localhost". This may explain why the same configs worked when all instances where on the same machine. Make sure that all of the following properties have been set on everyone of your Nodes:

# Site to Site properties nifi.remote.input.socket.host= <-- Set to the FQDN for the Node musty be resolvable by all other instances. nifi.remote.input.socket.port= <-- Set to unused port on Node. # web properties # nifi.web.http.host= <-- set to resolvable FQDN for Node nifi.web.http.port= <-- Set to unused port on Node # cluster node properties (only configure for cluster nodes) # nifi.cluster.is.node=true nifi.cluster.node.address= <-- set to resolvable FQDN for Node nifi.cluster.node.protocol.port= <-- Set to unused port on Node nifi.cluster.node.protocol.threads=2 # if multicast is not used, nifi.cluster.node.unicast.xxx must have same values as nifi.cluster.manager.xxx # nifi.cluster.node.unicast.manager.address= <-- Set to the resolvable FQDN of your NCM nifi.cluster.node.unicast.manager.protocol.port= <-- must be set to Manager protocol port assigned on your NCM.

Your NCM will need to be configured the same way as above for the Site-to-Site properties and Web properties, but instead of the "Cluster Node properties", you will need to fill out the "cluster manager properties":

# cluster manager properties (only configure for cluster manager) # nifi.cluster.is.manager=true nifi.cluster.manager.address= <-- set to resolvable FQDN for NCMnifi.cluster.manager.protocol.port= <-- Set to unused port on NCM.

The most likely cause of your issue is not having the host/address fields populated or trying to use a port that is already in use on the server. If setting the above does not resolve your issue, try setting DEBUG for the cluster logging in the logback.xml on one of your nodes and the NCM to get more details:

<logger name="org.apache.nifi.cluster" level="DEBUG"/>

Created 08-03-2016 10:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When A NiFi instance is designated as a node its starts sending out heartbeat messages after it is started. Those heartbeat messages contain important connection information for the Node. Part of that messages is the hostname for each connecting node. If left blank Java will try to determine the hostname and in many cases the hostname ends up being "localhost". This may explain why the same configs worked when all instances where on the same machine. Make sure that all of the following properties have been set on everyone of your Nodes:

# Site to Site properties nifi.remote.input.socket.host= <-- Set to the FQDN for the Node musty be resolvable by all other instances. nifi.remote.input.socket.port= <-- Set to unused port on Node. # web properties # nifi.web.http.host= <-- set to resolvable FQDN for Node nifi.web.http.port= <-- Set to unused port on Node # cluster node properties (only configure for cluster nodes) # nifi.cluster.is.node=true nifi.cluster.node.address= <-- set to resolvable FQDN for Node nifi.cluster.node.protocol.port= <-- Set to unused port on Node nifi.cluster.node.protocol.threads=2 # if multicast is not used, nifi.cluster.node.unicast.xxx must have same values as nifi.cluster.manager.xxx # nifi.cluster.node.unicast.manager.address= <-- Set to the resolvable FQDN of your NCM nifi.cluster.node.unicast.manager.protocol.port= <-- must be set to Manager protocol port assigned on your NCM.

Your NCM will need to be configured the same way as above for the Site-to-Site properties and Web properties, but instead of the "Cluster Node properties", you will need to fill out the "cluster manager properties":

# cluster manager properties (only configure for cluster manager) # nifi.cluster.is.manager=true nifi.cluster.manager.address= <-- set to resolvable FQDN for NCMnifi.cluster.manager.protocol.port= <-- Set to unused port on NCM.

The most likely cause of your issue is not having the host/address fields populated or trying to use a port that is already in use on the server. If setting the above does not resolve your issue, try setting DEBUG for the cluster logging in the logback.xml on one of your nodes and the NCM to get more details:

<logger name="org.apache.nifi.cluster" level="DEBUG"/>

Created 08-03-2016 12:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @mclark i was not setting these two properties.

- # Site to Site properties

- nifi.remote.input.socket.host=<--Set to the FQDN for the Node musty be resolvable by all other instances.

- nifi.remote.input.socket.port=<--Set to unused port on Node.

Now my nifi cluster is up. Thanks for you help.

Created 08-03-2016 01:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

+1 to mclarke's solution.