Support Questions

- Cloudera Community

- Support

- Support Questions

- Kerberos client configuration will not be updated ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Kerberos client configuration will not be updated because there is a process running

- Labels:

-

Cloudera Manager

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When attempting to perform - "Deploy Kerberos Client Configuration", I get the following error:

1 host(s) have stale Kerberos client configuration but will not be updated because there is a process running. Consider stopping roles on these hosts to ensure that they are updated by this command:

Out of the 8 hosts I have, only one host has this problem.

I have stoped all roles on this host but I still get this error. How do I find what process it is complaining about? Any help would be much appreciated.

Created 03-20-2016 09:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Michalis continued to assist me futher on this issue via Private Messages, so for the benefit of others, I will provde an update on the solution.

The solution was to identify the record in the "processes" table in the Cloudera Manager database that was reporting as running and set it to false.

The following commands were used:

scm=> select * from processes where running = true; scm=> update processes set running = False where process_id = 122;

After that I was able to Deploy Kerberos Client Configuration sucessfully on the impacted node.

Michalis has referred this issue to the appropriate Cloudera team to investigate this further.

Created 03-07-2016 03:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> 1 host(s) have stale Kerberos client configuration but will not be updated because there is a process running.

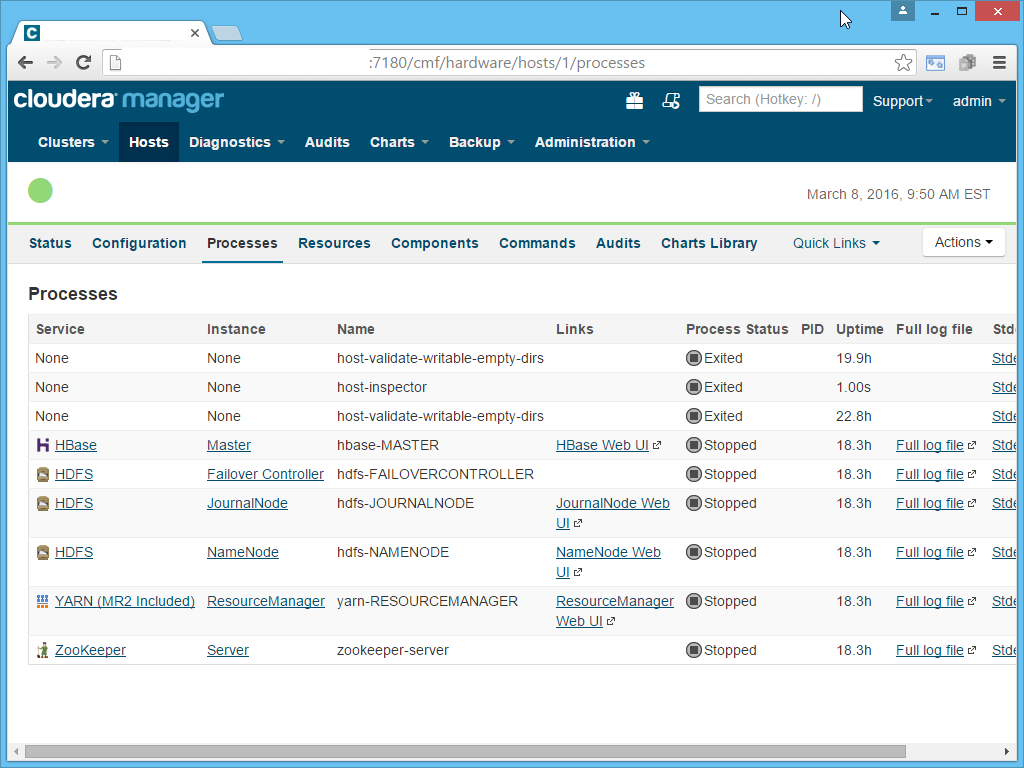

In the CM UI> Hosts> click (affected host)> click [Processes] tab to see what processes are running on that host.

Alternative, on the affected host terminal. Check [1] the child CDH role/processes that supervisord is controlling;

Stop the relevant role/process from Cloudera Manager

Let me know if this helps,

[1] option 1. [bash]# for process in $(pgrep -P $(ps aux | grep [s]upervisord | awk '{print $2}')); do ps -p $process -o euser,pid,command | awk 'NR>1'; done

option 2. ps auxwf

Created 03-07-2016 03:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply Michalis.

I have stopped all roles on the host (see screenshot below):

Here is output from 1.:

root 12080 /usr/lib64/cmf/agent/build/env/bin/python /usr/lib64/cmf/agent/src/cmf/supervisor_listener.py -l /var/log/cloudera-scm-agent/cmf_listener.log /var/run/cloudera-scm-agent/events

Here is output from 2.:

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND root 2 0.0 0.0 0 0 ? S Mar07 0:00 [kthreadd] root 3 0.0 0.0 0 0 ? S Mar07 0:00 \_ [migration/0] root 4 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ksoftirqd/0] root 5 0.0 0.0 0 0 ? S Mar07 0:00 \_ [stopper/0] root 6 0.0 0.0 0 0 ? S Mar07 0:00 \_ [watchdog/0] root 7 0.0 0.0 0 0 ? S Mar07 0:00 \_ [migration/1] root 8 0.0 0.0 0 0 ? S Mar07 0:00 \_ [stopper/1] root 9 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ksoftirqd/1] root 10 0.0 0.0 0 0 ? S Mar07 0:00 \_ [watchdog/1] root 11 0.0 0.0 0 0 ? S Mar07 0:00 \_ [migration/2] root 12 0.0 0.0 0 0 ? S Mar07 0:00 \_ [stopper/2] root 13 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ksoftirqd/2] root 14 0.0 0.0 0 0 ? S Mar07 0:00 \_ [watchdog/2] root 15 0.0 0.0 0 0 ? S Mar07 0:00 \_ [migration/3] root 16 0.0 0.0 0 0 ? S Mar07 0:00 \_ [stopper/3] root 17 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ksoftirqd/3] root 18 0.0 0.0 0 0 ? S Mar07 0:00 \_ [watchdog/3] root 19 0.0 0.0 0 0 ? S Mar07 0:03 \_ [events/0] root 20 0.0 0.0 0 0 ? S Mar07 0:02 \_ [events/1] root 21 0.0 0.0 0 0 ? S Mar07 0:03 \_ [events/2] root 22 0.0 0.0 0 0 ? S Mar07 0:04 \_ [events/3] root 23 0.0 0.0 0 0 ? S Mar07 0:00 \_ [events/0] root 24 0.0 0.0 0 0 ? S Mar07 0:00 \_ [events/1] root 25 0.0 0.0 0 0 ? S Mar07 0:00 \_ [events/2] root 26 0.0 0.0 0 0 ? S Mar07 0:00 \_ [events/3] root 27 0.0 0.0 0 0 ? S Mar07 0:00 \_ [events_long/0] root 28 0.0 0.0 0 0 ? S Mar07 0:00 \_ [events_long/1] root 29 0.0 0.0 0 0 ? S Mar07 0:00 \_ [events_long/2] root 30 0.0 0.0 0 0 ? S Mar07 0:00 \_ [events_long/3] root 31 0.0 0.0 0 0 ? S Mar07 0:00 \_ [events_power_ef] root 32 0.0 0.0 0 0 ? S Mar07 0:00 \_ [events_power_ef] root 33 0.0 0.0 0 0 ? S Mar07 0:00 \_ [events_power_ef] root 34 0.0 0.0 0 0 ? S Mar07 0:00 \_ [events_power_ef] root 35 0.0 0.0 0 0 ? S Mar07 0:00 \_ [cgroup] root 36 0.0 0.0 0 0 ? S Mar07 0:00 \_ [khelper] root 37 0.0 0.0 0 0 ? S Mar07 0:00 \_ [netns] root 38 0.0 0.0 0 0 ? S Mar07 0:00 \_ [async/mgr] root 39 0.0 0.0 0 0 ? S Mar07 0:00 \_ [pm] root 40 0.0 0.0 0 0 ? S Mar07 0:00 \_ [sync_supers] root 41 0.0 0.0 0 0 ? S Mar07 0:00 \_ [bdi-default] root 42 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kintegrityd/0] root 43 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kintegrityd/1] root 44 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kintegrityd/2] root 45 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kintegrityd/3] root 46 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kblockd/0] root 47 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kblockd/1] root 48 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kblockd/2] root 49 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kblockd/3] root 50 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kacpid] root 51 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kacpi_notify] root 52 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kacpi_hotplug] root 53 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ata_aux] root 54 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ata_sff/0] root 55 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ata_sff/1] root 56 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ata_sff/2] root 57 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ata_sff/3] root 58 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ksuspend_usbd] root 59 0.0 0.0 0 0 ? S Mar07 0:00 \_ [khubd] root 60 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kseriod] root 61 0.0 0.0 0 0 ? S Mar07 0:00 \_ [md/0] root 62 0.0 0.0 0 0 ? S Mar07 0:00 \_ [md/1] root 63 0.0 0.0 0 0 ? S Mar07 0:00 \_ [md/2] root 64 0.0 0.0 0 0 ? S Mar07 0:00 \_ [md/3] root 65 0.0 0.0 0 0 ? S Mar07 0:00 \_ [md_misc/0] root 66 0.0 0.0 0 0 ? S Mar07 0:00 \_ [md_misc/1] root 67 0.0 0.0 0 0 ? S Mar07 0:00 \_ [md_misc/2] root 68 0.0 0.0 0 0 ? S Mar07 0:00 \_ [md_misc/3] root 69 0.0 0.0 0 0 ? S Mar07 0:00 \_ [linkwatch] root 71 0.0 0.0 0 0 ? S Mar07 0:00 \_ [khungtaskd] root 72 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kswapd0] root 73 0.0 0.0 0 0 ? SN Mar07 0:00 \_ [ksmd] root 74 0.0 0.0 0 0 ? SN Mar07 0:01 \_ [khugepaged] root 75 0.0 0.0 0 0 ? S Mar07 0:00 \_ [aio/0] root 76 0.0 0.0 0 0 ? S Mar07 0:00 \_ [aio/1] root 77 0.0 0.0 0 0 ? S Mar07 0:00 \_ [aio/2] root 78 0.0 0.0 0 0 ? S Mar07 0:00 \_ [aio/3] root 79 0.0 0.0 0 0 ? S Mar07 0:00 \_ [crypto/0] root 80 0.0 0.0 0 0 ? S Mar07 0:00 \_ [crypto/1] root 81 0.0 0.0 0 0 ? S Mar07 0:00 \_ [crypto/2] root 82 0.0 0.0 0 0 ? S Mar07 0:00 \_ [crypto/3] root 89 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kthrotld/0] root 90 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kthrotld/1] root 91 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kthrotld/2] root 92 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kthrotld/3] root 93 0.0 0.0 0 0 ? S Mar07 0:00 \_ [pciehpd] root 95 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kpsmoused] root 96 0.0 0.0 0 0 ? S Mar07 0:00 \_ [usbhid_resumer] root 97 0.0 0.0 0 0 ? S Mar07 0:00 \_ [deferwq] root 130 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kdmremove] root 131 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kstriped] root 270 0.0 0.0 0 0 ? S Mar07 0:00 \_ [scsi_eh_0] root 274 0.0 0.0 0 0 ? S Mar07 0:00 \_ [scsi_eh_1] root 411 0.0 0.0 0 0 ? S Mar07 0:00 \_ [scsi_eh_2] root 412 0.0 0.0 0 0 ? S Mar07 0:00 \_ [vmw_pvscsi_wq_2] root 485 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kdmflush] root 487 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kdmflush] root 553 0.0 0.0 0 0 ? S Mar07 0:00 \_ [jbd2/dm-1-8] root 554 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ext4-dio-unwrit] root 879 0.0 0.0 0 0 ? S Mar07 0:01 \_ [vmmemctl] root 1142 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kdmflush] root 1144 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kdmflush] root 1147 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kdmflush] root 1166 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kdmflush] root 1167 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kdmflush] root 1169 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kdmflush] root 1170 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kdmflush] root 1250 0.0 0.0 0 0 ? S Mar07 0:00 \_ [jbd2/sda1-8] root 1251 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ext4-dio-unwrit] root 1252 0.0 0.0 0 0 ? S Mar07 0:00 \_ [jbd2/dm-5-8] root 1253 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ext4-dio-unwrit] root 1254 0.0 0.0 0 0 ? S Mar07 0:00 \_ [jbd2/dm-7-8] root 1255 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ext4-dio-unwrit] root 1256 0.0 0.0 0 0 ? S Mar07 0:01 \_ [jbd2/dm-8-8] root 1257 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ext4-dio-unwrit] root 1258 0.0 0.0 0 0 ? S Mar07 0:00 \_ [jbd2/dm-6-8] root 1259 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ext4-dio-unwrit] root 1260 0.0 0.0 0 0 ? S Mar07 0:00 \_ [jbd2/dm-2-8] root 1261 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ext4-dio-unwrit] root 1262 0.0 0.0 0 0 ? S Mar07 0:01 \_ [jbd2/dm-4-8] root 1263 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ext4-dio-unwrit] root 1264 0.0 0.0 0 0 ? S Mar07 0:00 \_ [jbd2/dm-3-8] root 1265 0.0 0.0 0 0 ? S Mar07 0:00 \_ [ext4-dio-unwrit] root 1307 0.0 0.0 0 0 ? S Mar07 0:00 \_ [kauditd] root 1519 0.0 0.0 0 0 ? S Mar07 0:01 \_ [flush-253:6] root 1520 0.0 0.0 0 0 ? S Mar07 0:00 \_ [flush-253:7] root 1938 0.0 0.0 0 0 ? S Mar07 0:00 \_ [scsi_tgtd/0] root 1939 0.0 0.0 0 0 ? S Mar07 0:00 \_ [scsi_tgtd/1] root 1940 0.0 0.0 0 0 ? S Mar07 0:00 \_ [scsi_tgtd/2] root 1941 0.0 0.0 0 0 ? S Mar07 0:00 \_ [scsi_tgtd/3] root 1943 0.0 0.0 0 0 ? S Mar07 0:00 \_ [fc_exch_workque] root 1944 0.0 0.0 0 0 ? S Mar07 0:00 \_ [fc_rport_eq] root 1948 0.0 0.0 0 0 ? S Mar07 0:00 \_ [fcoe_work/0] root 1949 0.0 0.0 0 0 ? S Mar07 0:00 \_ [fcoe_work/1] root 1950 0.0 0.0 0 0 ? S Mar07 0:00 \_ [fcoe_work/2] root 1951 0.0 0.0 0 0 ? S Mar07 0:00 \_ [fcoe_work/3] root 1952 0.0 0.0 0 0 ? S< Mar07 0:00 \_ [fcoethread/0] root 1953 0.0 0.0 0 0 ? S< Mar07 0:00 \_ [fcoethread/1] root 1954 0.0 0.0 0 0 ? S< Mar07 0:00 \_ [fcoethread/2] root 1955 0.0 0.0 0 0 ? S< Mar07 0:00 \_ [fcoethread/3] root 1956 0.0 0.0 0 0 ? S Mar07 0:00 \_ [cnic_wq] root 1957 0.0 0.0 0 0 ? S Mar07 0:00 \_ [bnx2fc] root 1958 0.0 0.0 0 0 ? S< Mar07 0:00 \_ [bnx2fc_l2_threa] root 1959 0.0 0.0 0 0 ? S< Mar07 0:00 \_ [bnx2fc_thread/0] root 1960 0.0 0.0 0 0 ? S< Mar07 0:00 \_ [bnx2fc_thread/1] root 1961 0.0 0.0 0 0 ? S< Mar07 0:00 \_ [bnx2fc_thread/2] root 1962 0.0 0.0 0 0 ? S< Mar07 0:00 \_ [bnx2fc_thread/3] root 9514 0.0 0.0 0 0 ? S Mar07 0:01 \_ [flush-253:8] root 28118 0.0 0.0 0 0 ? S Mar07 0:00 \_ [flush-253:4] root 28597 0.0 0.0 0 0 ? S Mar07 0:00 \_ [flush-253:3] root 8637 0.0 0.0 0 0 ? S Mar07 0:00 \_ [flush-253:2] root 16041 0.0 0.0 0 0 ? S 09:37 0:00 \_ [flush-253:1] root 1 0.0 0.0 19364 1544 ? Ss Mar07 0:00 /sbin/init root 650 0.0 0.0 11148 1252 ? S<s Mar07 0:00 /sbin/udevd -d root 1171 0.0 0.0 11144 1248 ? S< Mar07 0:00 \_ /sbin/udevd -d root 1942 0.0 0.0 11144 1236 ? S< Mar07 0:00 \_ /sbin/udevd -d root 1683 0.0 0.0 179156 4452 ? S Mar07 1:10 /usr/sbin/vmtoolsd root 1877 0.0 0.0 251196 1740 ? Sl Mar07 0:00 /sbin/rsyslogd -i /var/run/syslogd.pid -c 5 root 1892 0.0 0.0 18384 740 ? Ss Mar07 0:04 irqbalance --pid=/var/run/irqbalance.pid rpc 1910 0.0 0.0 18976 932 ? Ss Mar07 0:00 rpcbind root 1925 0.0 0.0 13584 756 ? Ss Mar07 0:06 lldpad -d root 1966 0.0 0.0 8364 420 ? Ss Mar07 0:00 /usr/sbin/fcoemon --syslog dbus 1981 0.0 0.0 21432 916 ? Ss Mar07 0:00 dbus-daemon --system root 2020 0.0 0.0 125148 3936 ? Ss Mar07 0:00 perl -Sx /opt/soe/local/caper/bin/amtime.pl -b root 2037 0.0 0.0 66224 1224 ? Ss Mar07 0:00 /usr/sbin/sshd root 16534 0.0 0.0 99972 3964 ? Ss 09:40 0:00 \_ sshd: bdpadmin [priv] bdpadmin 16536 0.0 0.0 99972 1880 ? S 09:40 0:00 \_ sshd: bdpadmin@pts/0 bdpadmin 16537 0.0 0.0 108340 1860 pts/0 Ss 09:40 0:00 \_ -bash bdpadmin 16846 0.0 0.0 110352 1184 pts/0 R+ 09:42 0:00 \_ ps auxwf root 2048 0.0 0.0 21716 940 ? Ss Mar07 0:00 xinetd -stayalive -pidfile /var/run/xinetd.pid root 2086 0.0 0.0 180860 2416 ? Ss Mar07 0:00 /usr/sbin/abrtd root 2096 0.0 0.0 178528 1860 ? Ss Mar07 0:00 abrt-dump-oops -d /var/spool/abrt -rwx /var/log/messages root 2147 0.0 0.0 116892 1276 ? Ss Mar07 0:00 crond root 9747 0.0 0.0 139780 1776 ? S 09:00 0:00 \_ CROND root 9753 0.0 0.0 106096 1144 ? Ss 09:00 0:00 | \_ /bin/sh -c /opt/soe/local/bin/mpstat.pl 1200 3 >/var/log/sa/caper.log 2>&1 root 9756 0.0 0.0 125152 5524 ? S 09:00 0:00 | \_ perl -Sx /opt/soe/local/bin/mpstat.pl 1200 3 root 9783 0.0 0.0 4120 728 ? S 09:00 0:00 | \_ /usr/bin/mpstat -P ALL 1200 3 root 9748 0.0 0.0 139780 1776 ? S 09:00 0:00 \_ CROND root 9751 0.0 0.0 106096 1140 ? Ss 09:00 0:00 \_ /bin/sh -c /opt/soe/local/bin/vmstat.pl 1200 3 >/var/log/sa/caper.log 2>&1 root 9754 0.0 0.0 125160 5524 ? S 09:00 0:00 \_ perl -Sx /opt/soe/local/bin/vmstat.pl 1200 3 root 9784 0.0 0.0 6260 640 ? S 09:00 0:00 \_ /usr/bin/vmstat 1200 4 root 2171 0.0 0.0 108340 612 ? Ss Mar07 0:00 /usr/bin/rhsmcertd root 2194 0.0 0.0 11388 232 ? Sl Mar07 0:00 filebeat-god -r / -n -p /var/run/filebeat.pid -- /usr/bin/filebeat -c /etc/filebeat/filebeat.yml root 2195 0.0 0.1 457308 20488 ? Sl Mar07 0:51 \_ /usr/bin/filebeat -c /etc/filebeat/filebeat.yml root 2226 0.0 0.0 11388 224 ? Sl Mar07 0:00 topbeat-god -r / -n -p /var/run/topbeat.pid -- /usr/bin/topbeat -c /etc/topbeat/topbeat.yml root 2227 0.0 0.1 324892 18448 ? Sl Mar07 0:44 \_ /usr/bin/topbeat -c /etc/topbeat/topbeat.yml root 2255 0.0 0.0 9580 544 ? Ss Mar07 0:01 ./nimbus /opt/nimsoft root 2257 0.0 0.0 12272 2180 ? S Mar07 0:03 \_ nimbus(controller) root 2275 0.0 0.0 85520 1664 ? Sl Mar07 0:05 \_ nimbus(spooler) root 2277 0.0 0.0 12256 1548 ? S Mar07 0:02 \_ nimbus(hdb) root 2288 0.0 0.0 238160 2676 ? Sl Mar07 0:10 \_ nimbus(cdm) root 2264 0.0 0.0 4064 548 tty1 Ss+ Mar07 0:00 /sbin/mingetty /dev/tty1 root 2266 0.0 0.0 4064 548 tty2 Ss+ Mar07 0:00 /sbin/mingetty /dev/tty2 root 2268 0.0 0.0 4064 548 tty3 Ss+ Mar07 0:00 /sbin/mingetty /dev/tty3 root 2270 0.0 0.0 4064 548 tty4 Ss+ Mar07 0:00 /sbin/mingetty /dev/tty4 root 2272 0.0 0.0 4064 552 tty5 Ss+ Mar07 0:00 /sbin/mingetty /dev/tty5 root 2274 0.0 0.0 4064 548 tty6 Ss+ Mar07 0:00 /sbin/mingetty /dev/tty6 root 12079 0.1 0.0 203048 13332 ? Ss Mar07 1:22 /usr/lib64/cmf/agent/src/cmf/../../build/env/bin/python /usr/lib64/cmf/agent/src/cmf/../../build/ root 12080 0.0 0.0 193776 11840 ? S Mar07 0:00 \_ /usr/lib64/cmf/agent/build/env/bin/python /usr/lib64/cmf/agent/src/cmf/supervisor_listener.py root 17984 0.0 0.0 149164 1596 ? S Mar07 0:00 su -s /bin/bash -c nohup /usr/sbin/cmf-agent root 17986 1.0 0.3 2316492 62988 ? Ssl Mar07 12:21 \_ /usr/lib64/cmf/agent/build/env/bin/python /usr/lib64/cmf/agent/src/cmf/agent.py --package_dir ntp 10770 0.0 0.0 26512 1956 ? Ss Mar07 0:00 ntpd -u ntp:ntp -p /var/run/ntpd.pid -g

Please advise next steps.

Created 03-07-2016 05:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you attempt the following workaround;

Stop the cluster: Cloudera Manager > Cluster > Stop

Stop Cloudera Management Service: Cloudera Manager > Management Service > Stop

Deploy Kerberos Client Configuration: Cloudera Manager > Cluster > Deploy Kerberos Client Configuration

Start Cloudera Management Service: Cloudera Manager > Management Service > Start

Start the cluster: Cloudera Manager > Cluster > Start

let me know if this helps

Created 03-07-2016 08:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. Stop the cluster: Cloudera Manager > Cluster > Stop

Done this and all stopped successfully.

2. Stop Cloudera Management Service: Cloudera Manager > Management Service > Stop

Done this and all stopped successfully.

3. Deploy Kerberos Client Configuration: Cloudera Manager > Cluster > Deploy Kerberos Client Configuration

Attempted this, but this is where I get the error saying that a process is still running on one of the servers.

Created 03-14-2016 02:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I still don't have a solution to this problem.

Created 03-15-2016 07:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So far we confirmed that nothing is actually running on the nodes and attempting a simple workaround has not given you a positive outcome.

Let's do it the hard way 😉

per your description

1 host(s) have stale Kerberos client configuration but will not be updated because there is a process running. Consider stopping roles on these hosts to ensure that they are updated by this command:

can you check in your CM database under the _roles_ table and list all the _configured_status - we could set it to STOPPED and see if you can proceed withe deploying Kerberos client-config

ie: login to your cloudera manager database and execute the below SQL query;

select name, configured_status from roles;

can you paste the output so I can check which role is RUNNING

Created 03-15-2016 03:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Michalis, here is the output:

scm=> select name, configured_status from roles;

name | configured_s

tatus

------------------------------------------------------------------+-------------

------

yarn-NODEMANAGER-81a55c9ca4fbd9c9374636c398a13383 | STOPPED

hbase-HBASERESTSERVER-085be415c1b106027e55c36376d524f9 | STOPPED

hbase-HBASETHRIFTSERVER-085be415c1b106027e55c36376d524f9 | STOPPED

mgmt-HOSTMONITOR-f21c6cbb6cdac5606715a5cf46309c28 | STOPPED

hdfs-DATANODE-5b7bea319444e550909d079365f4ed9b | STOPPED

hdfs-SECONDARYNAMENODE-0c6abc1ce520275dade64829a7d84cd9 | STOPPED

impala-IMPALAD-5b7bea319444e550909d079365f4ed9b | STOPPED

impala-STATESTORE-0c6abc1ce520275dade64829a7d84cd9 | STOPPED

hive-GATEWAY-085be415c1b106027e55c36376d524f9 | NA

hdfs-GATEWAY-085be415c1b106027e55c36376d524f9 | NA

yarn-JOBHISTORY-0c6abc1ce520275dade64829a7d84cd9 | STOPPED

mgmt-ALERTPUBLISHER-f21c6cbb6cdac5606715a5cf46309c28 | STOPPED

mgmt-NAVIGATOR-f21c6cbb6cdac5606715a5cf46309c28 | STOPPED

mgmt-EVENTSERVER-f21c6cbb6cdac5606715a5cf46309c28 | STOPPED

hdfs-BALANCER-0c6abc1ce520275dade64829a7d84cd9 | NA

hdfs-DATANODE-81a55c9ca4fbd9c9374636c398a13383 | STOPPED

hue-HUE_SERVER-0448f5d888968211b3f80c15282a73c5 | STOPPED

hdfs-NAMENODE-01c06bb8c514524684eaf2cdf1f33b30 | STOPPED

zookeeper-SERVER-01c06bb8c514524684eaf2cdf1f33b30 | STOPPED

impala-IMPALAD-04a695b93dcaac72e6cda936f0e70c14 | STOPPED

impala-IMPALAD-81a55c9ca4fbd9c9374636c398a13383 | STOPPED

yarn-RESOURCEMANAGER-01c06bb8c514524684eaf2cdf1f33b30 | STOPPED

sqoop_client-GATEWAY-085be415c1b106027e55c36376d524f9 | NA

spark_on_yarn-GATEWAY-085be415c1b106027e55c36376d524f9 | NA

spar40365358-SPARK_YARN_HISTORY_SERVER-0c6abc1ce520275dade64829a | STOPPED

hbase-MASTER-01c06bb8c514524684eaf2cdf1f33b30 | STOPPED

hbase-MASTER-0c6abc1ce520275dade64829a7d84cd9 | STOPPED

hdfs-DATANODE-04a695b93dcaac72e6cda936f0e70c14 | STOPPED

mgmt-SERVICEMONITOR-f21c6cbb6cdac5606715a5cf46309c28 | STOPPED

mgmt-NAVIGATORMETASERVER-f21c6cbb6cdac5606715a5cf46309c28 | STOPPED

impala-CATALOGSERVER-0c6abc1ce520275dade64829a7d84cd9 | STOPPED

zookeeper-SERVER-0c6abc1ce520275dade64829a7d84cd9 | STOPPED

kms-KMS-f21c6cbb6cdac5606715a5cf46309c28 | STOPPED

hdfs-NFSGATEWAY-085be415c1b106027e55c36376d524f9 | STOPPED

hbase-REGIONSERVER-5b7bea319444e550909d079365f4ed9b | STOPPED

hdfs-HTTPFS-085be415c1b106027e55c36376d524f9 | STOPPED

zookeeper-SERVER-0448f5d888968211b3f80c15282a73c5 | STOPPED

mgmt-ACTIVITYMONITOR-f21c6cbb6cdac5606715a5cf46309c28 | STOPPED

hbase-REGIONSERVER-04a695b93dcaac72e6cda936f0e70c14 | STOPPED

hbase-REGIONSERVER-81a55c9ca4fbd9c9374636c398a13383 | STOPPED

hive-WEBHCAT-0448f5d888968211b3f80c15282a73c5 | STOPPED

hive-HIVEMETASTORE-0448f5d888968211b3f80c15282a73c5 | STOPPED

mgmt-REPORTSMANAGER-f21c6cbb6cdac5606715a5cf46309c28 | STOPPED

hive-HIVESERVER2-0448f5d888968211b3f80c15282a73c5 | STOPPED

oozie-OOZIE_SERVER-0448f5d888968211b3f80c15282a73c5 | STOPPED

sentry-SENTRY_SERVER-0448f5d888968211b3f80c15282a73c5 | STOPPED

hue-KT_RENEWER-0448f5d888968211b3f80c15282a73c5 | STOPPED

yarn-NODEMANAGER-5b7bea319444e550909d079365f4ed9b | STOPPED

yarn-NODEMANAGER-04a695b93dcaac72e6cda936f0e70c14 | STOPPED

(49 rows)Unfortunately there is nothing showing as RUNNING.

Created 03-20-2016 09:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Michalis continued to assist me futher on this issue via Private Messages, so for the benefit of others, I will provde an update on the solution.

The solution was to identify the record in the "processes" table in the Cloudera Manager database that was reporting as running and set it to false.

The following commands were used:

scm=> select * from processes where running = true; scm=> update processes set running = False where process_id = 122;

After that I was able to Deploy Kerberos Client Configuration sucessfully on the impacted node.

Michalis has referred this issue to the appropriate Cloudera team to investigate this further.

Created 08-02-2019 05:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Friends,

I got same issue on my cluster.

I did following steps :

1.the CM UI> Hosts> click (affected host)> click [Processes] tab to see what processes are running on that host.

2. Stop the all process on running host.

3. You need to run the Deploy Kerberos Client Configuration and it will deploy on affected host.

The above steps has helped me to solved this issue on cluster.