Support Questions

- Cloudera Community

- Support

- Support Questions

- ListSFTP and FetchFTP duplicate files generated

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ListSFTP and FetchFTP duplicate files generated

- Labels:

-

Apache NiFi

Created on

11-14-2019

09:55 AM

- last edited on

11-14-2019

03:04 PM

by

cjervis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have a process that generates text files in a ftp server. The process generates the files by writing multiple passes and could take from say more than a minute to a few minutes to generate the files.

The nifi ListSFTP in this case generates multiple files as we are using tracking timestamps. The file's timestamp is getting updated continuously during the generation process.

We dont have control over the existing process that generates the files and has no notification mechanism in place that can tell us when the file generation is complete.

What would be the best practice to handle this scenario in a List+FetchSFTP flow to avoid duplicate files generated in the target system.

Please advice.

Thank you

Created 11-14-2019 10:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The ListSFTP processor's only mechanism for ignoring files in the listing directory are those marked as hidden (start with . on linux based systems). The dot rename of file transfer is pretty common with SFTP.

Now if the files are being streamed into the SFTP server by another processes that does not use some form of dot rename or filename change, you would need the new feature added to listSFTP as part of https://issues.apache.org/jira/browse/NIFI-5977

This new feature is part of Apache NiFi 1.10 which adds a couple new configuration properties to the listSFTP processor. Minimum File Age is what you would need to use. Only files where the last update time is older than this configured value would be listed.

Hope this helps,

Matt

Created 11-14-2019 01:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The WAIT processor requires a release signal that is typically created using the NOTIFY processor.

So that really will not help here.

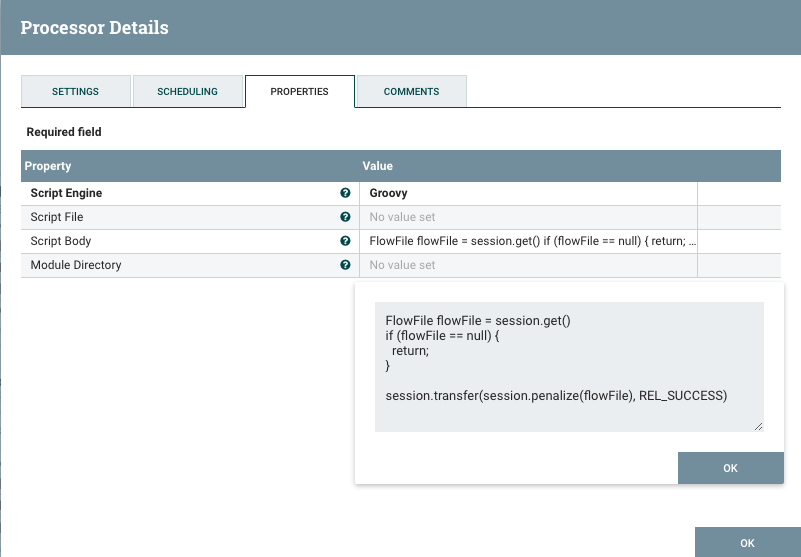

Perhaps you could try setting a penalty on each FlowFile. Penalized FlowFiles are not processed by the follow on processor until the penalty duration has ended. This can be done using the ExecuteScript processor after listSFTP:

You then set length of "Penalty Duration" via the settings tab. Set penalty high enough to ensure the file writes have completed. Of course this does introduce some latency.

What this will not help with is listSFTP still listing the same files multiple times. As data is written, the timestamp written on that source FlowFile updates, which means it will get listed again as if it is a new file.

but the delay here allows full data to be written and then perhaps you can use a detectDuplicate processor to remove duplicates based on filename before you actually fetch the content.

just some thoughts here, but that JIra is probably best path....

Matt

Created 11-14-2019 10:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The ListSFTP processor's only mechanism for ignoring files in the listing directory are those marked as hidden (start with . on linux based systems). The dot rename of file transfer is pretty common with SFTP.

Now if the files are being streamed into the SFTP server by another processes that does not use some form of dot rename or filename change, you would need the new feature added to listSFTP as part of https://issues.apache.org/jira/browse/NIFI-5977

This new feature is part of Apache NiFi 1.10 which adds a couple new configuration properties to the listSFTP processor. Minimum File Age is what you would need to use. Only files where the last update time is older than this configured value would be listed.

Hope this helps,

Matt

Created 11-14-2019 12:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @MattWho , in case we are not able to upgrade to 1.10 or backport it to the current version. Would Wait processor be an acceptable direction to pursue?

Created 11-14-2019 01:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The WAIT processor requires a release signal that is typically created using the NOTIFY processor.

So that really will not help here.

Perhaps you could try setting a penalty on each FlowFile. Penalized FlowFiles are not processed by the follow on processor until the penalty duration has ended. This can be done using the ExecuteScript processor after listSFTP:

You then set length of "Penalty Duration" via the settings tab. Set penalty high enough to ensure the file writes have completed. Of course this does introduce some latency.

What this will not help with is listSFTP still listing the same files multiple times. As data is written, the timestamp written on that source FlowFile updates, which means it will get listed again as if it is a new file.

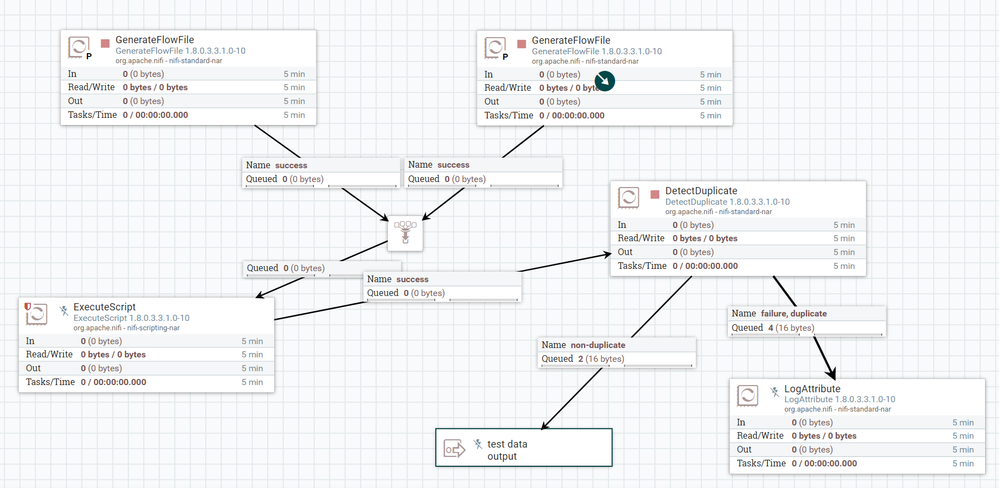

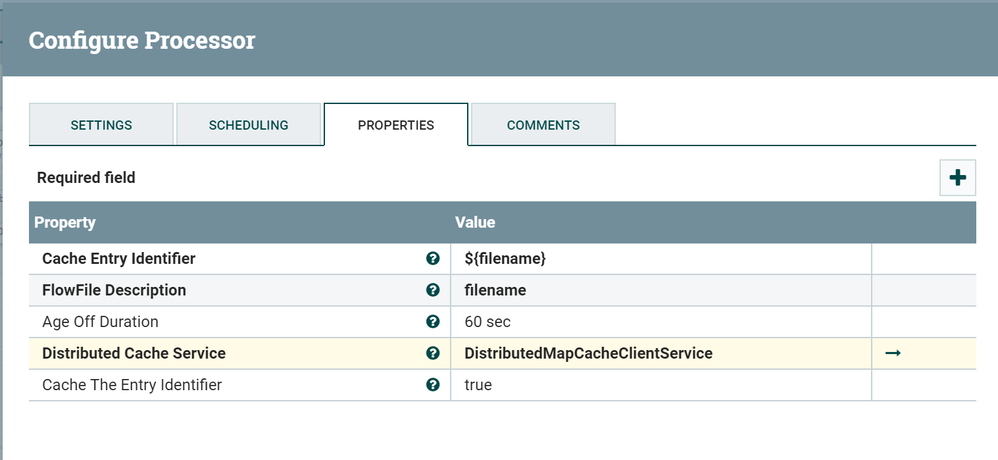

but the delay here allows full data to be written and then perhaps you can use a detectDuplicate processor to remove duplicates based on filename before you actually fetch the content.

just some thoughts here, but that JIra is probably best path....

Matt

Created on 11-18-2019 11:39 AM - edited 11-18-2019 11:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MattWho

We are pursuing cloudera to get a backport of this feature in the current release, as we are unable to upgrade hdf to a version having this feature.

Meanwhile I am trying to implement the same using the ExecuteScript(penalize flow file), and eliminate duplicates.

When trying to eliminate the duplicates, the detectduplicate does not work as expected when 2 different file names are introduced in the flowfile. Is this expected behaviour? How do we handle this scenario to have unique file list of multiple files over a period of time.

The first generateflowfile sets filename as file1.txt and second generateflowfile sets filename as file2.txt.

When i disable one of the generateflowfile, it is filtering the duplicates as expected. But when both the generateflowfile are running, we are getting duplicates.

Thank you