Support Questions

- Cloudera Community

- Support

- Support Questions

- Low memory Impala nodes (e.g. 15GB RAM)

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Low memory Impala nodes (e.g. 15GB RAM)

- Labels:

-

Apache Impala

Created on 04-18-2017 01:04 PM - edited 09-16-2022 04:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I notice that in the documentation it suggests 64GB to 128GB or RAM for each node. On the other hand, I have seen clusters configured with 15GB nodes (EC2 c3.2xlarge)

I don't understand why the documentation suggests such a high memory requirement (+64GB) if the cluster is able to run fine on more modest hardware (e.g. 15GB).

Are those low-memory clusters actually under-performing? There don't seem to be an out-of-memory errors.

Does the recommendation just assume that the database itself is a certain size? Perhaps 15GB is ok because the database is relatively small and the queries are not too demanding.

What is the actual minimum memory necessary for an Impala node?

Created 04-18-2017 01:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We can use 15GB and above for our practice purpose but 64GB and above is recommendated for the enterprise level

To make it more clear, you can go to the below link, it will show you the memory consumption by each services.

Cloudera manager -> select a Host -> Resource (menu) -> memory

1. check it in all the nodes including the node where you have installed Cloudera Manager. In an enterprise, it is no wonder if Cloudera Management Service (mgmt) alone consumed upto 20 GB depends upon the trafic.

2. In addition to impala, the following services also requires memory by default, ex: hdfs, yarn, zookeeper, spark, etc

Also there is a difference in one person using multi node (1 to n) and multiple persons using mult node (n to n). So the document refering 64Gb and above based on n to n

Created 04-18-2017 01:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We can use 15GB and above for our practice purpose but 64GB and above is recommendated for the enterprise level

To make it more clear, you can go to the below link, it will show you the memory consumption by each services.

Cloudera manager -> select a Host -> Resource (menu) -> memory

1. check it in all the nodes including the node where you have installed Cloudera Manager. In an enterprise, it is no wonder if Cloudera Management Service (mgmt) alone consumed upto 20 GB depends upon the trafic.

2. In addition to impala, the following services also requires memory by default, ex: hdfs, yarn, zookeeper, spark, etc

Also there is a difference in one person using multi node (1 to n) and multiple persons using mult node (n to n). So the document refering 64Gb and above based on n to n

Created 04-18-2017 02:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, saranvisa.

I think that basically we are a very light system, effectively a 1 to n relationship because of so few users right now.

I checked the memory usage of all the nodes in the cluster and it is around 2GB for virtually all of them, except for the one running cloudera manager which is at 10GB.

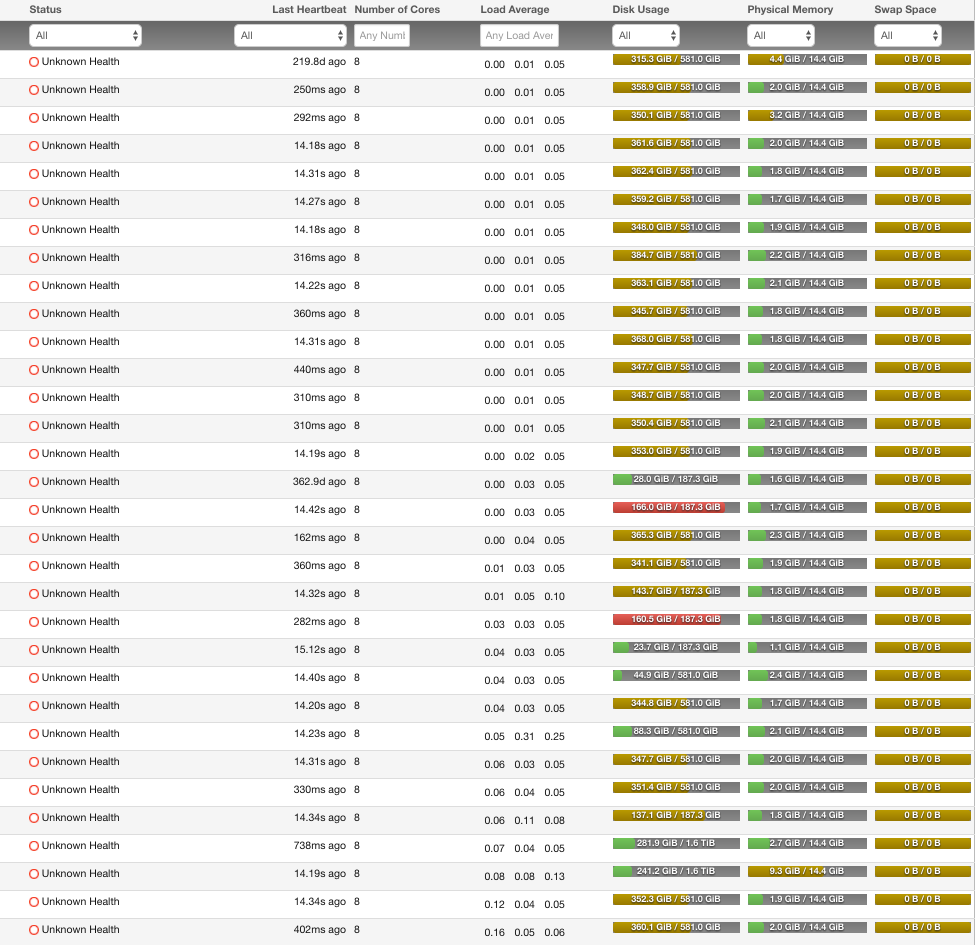

Here is a screenshot showing about 90% of the nodes. I am not sure why they say Unknown Health. I think there are errors in the DNS checks.

Does everything else look OK to you?

Thank you so much for your help and quick response. I really, really appreciate it!

Created 04-18-2017 05:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On the Impala dev team we do plenty of testing on machines with 16GB-32GB RAM (e.g. my development machine has 32GB RAM). So Impala definitely works with that amount of memory.

It's just that with that amount of memory it's not too hard to run into capacity problems if you have a reasonable number of concurrent queries with larger data sizes or more complex queries.

It sounds like maybe the smaller memory instances work well for your workload.