Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Mapreduce job hang, waiting for AM container t...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

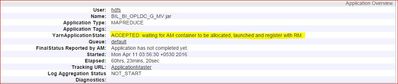

Mapreduce job hang, waiting for AM container to be allocated.

- Labels:

-

Apache Hadoop

-

Apache YARN

Created on 04-13-2016 12:59 PM - edited 08-18-2019 04:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Team,

Job hang while importing tables via sqoop shown following message in web UI.

ACCEPTED: waiting for AM container to be allocated, launched and register with RM

Kindly suggest.

Created 04-13-2016 06:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are 2 unhealthy nodes, if you click on "2" under unhealthy nodes section, you will get reason why they are unhealthy, it could be because of bad disk etc. Please check and try to check nodemanager's logs, you will get more info in there.

Created 01-11-2017 01:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Nilesh,

I am too facing same issue, could you please suggest me if you have solution.

Created 08-03-2018 02:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All

I am also facing the same issue. but My server hard disk is new. There is no warning/alert in my Ambari level.

Once my current job completes, then only the second job is allowed to execute..

If my first job is running for 60 minutes, my second job is on hold. Any suggestions.

server capacity : 16 core , 64 GB RAM

Thx

Muthu

Created 09-04-2018 03:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, this Looks like fifo-scheduling / capacity scheduling with 1 group only Try to switch to fair scheduling in yarn.

Regards,

Volker

- « Previous

-

- 1

- 2

- Next »