Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Message size exceeded in Publish_Kafka_0_10

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Message size exceeded in Publish_Kafka_0_10

- Labels:

-

Apache Kafka

-

Apache NiFi

Created on 06-12-2017 03:01 PM - edited 08-17-2019 09:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From what I understand according to: https://community.hortonworks.com/questions/70355/nifi-putkafka-processor-maximum-allowed-data-size....

Publish_Kakfa_0_10 was configured to receive messages larger than 1 MB but I am still getting the same error on this (see screenshot) Does anyone know if they fixed this in the Publish_Kafka_0_10 processor?

My Nifi version is 1.1.2

Created 06-12-2017 05:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What do you have the value of "Max Request Size" set to in PublishKafka_0_10?

From the error message it looks like you still have it set to 1MB which is 1048576 bytes.

Created 06-12-2017 05:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What do you have the value of "Max Request Size" set to in PublishKafka_0_10?

From the error message it looks like you still have it set to 1MB which is 1048576 bytes.

Created 06-12-2017 05:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah I just tried to change it to 2 MB and got the error shown in my response above.

Created 06-12-2017 05:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The TokenTooLargeException was coming from NiFi's code comparing against the "Max Request Size" property, which is why changing it to 2MB got past that error.

The RecordTooLargeException is coming from the Kafka client code... I think the Kafka client is talking to broker and determining that the message is too large based config on the broker, but I'm not 100% sure.

The first answer on this post might help:

https://stackoverflow.com/questions/21020347/kafka-sending-a-15mb-message

Also may want to make sure you can publish a 2MB message from outside of NiFi, which right now I would suspect you can't until changing some config on the broker.

Created 06-13-2017 12:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Bryan. Just for my understanding, these aren't events right? They are messages? What is a message, in terms of this kind of error?

I was thinking this was a single log file event that was over 2 MB or whatever. But now I suspect its different. Can you help me understand?

Created 06-13-2017 01:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A message just means whatever you are sending to Kafka. For example, if you used Kafka's console producer from their documentation like:

bin/kafka-console-producer.sh --broker-list localhost:9092 --topic streams-file-input < file-input.txt

Each line of file-input.txt would be published to the topic, so each line here is what I am describing as a message.

The RecordTooLargeException is indicating there was a call to publish a message, and the message exceeded to maximum size that the broker is configured for.

In NiFi, the messages being published will be based off the content of the flow file... If you specify a "Message Demarcator" in the processor properties, then it will read the content of the flow file and separate it based on the demarcator, sending each separate piece of data as a message. If you don't specify a demarcator then it will take the entire content of the flow file and make a single publish call for the whole content.

In the latest version of NiFi, there is also PublishKafka_0_10_Record which would read the incoming flow file using a configured record reader and send each record to Kafka as a message.

Created 06-13-2017 02:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Bryan I think you have led me to where I need to go. I think the configurable property is message.max.bytes that needs to be changed in the config for the broker. I thought I could do that in Ambari but I can't find that property there so I am trying to hunt where to change that property.

Created 06-13-2017 02:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We figured it out Bryan. We didn't have a message demarcator set and once we set it, the error went away! Thank you!

Created on 06-12-2017 05:54 PM - edited 08-17-2019 09:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

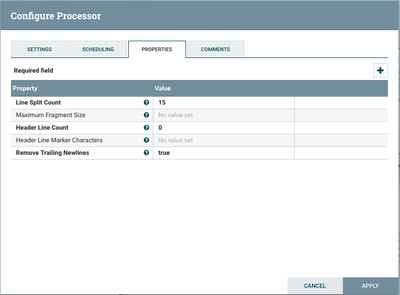

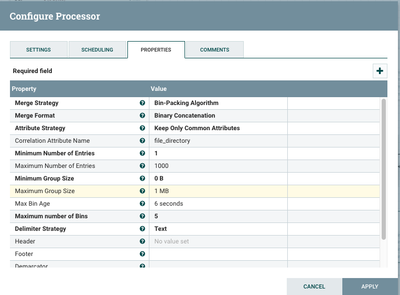

Im starting to suspect its something to do with either MergeContent or the SplitText we're using. I've noticed that when I switch SplitText to Line Count 1 it doesn't happen but then we get terrible bottlenecks that break the system. We currently have it at 15 but this produces the error in the PublishKafka_0_10. Also when I checked the actual data provenance for Publish_Kakfa_0_10, I saw that it was producing files of size 2.5+ MB which makes me think its MergeContent. Here are the screenshots

Created on 06-12-2017 05:38 PM - edited 08-17-2019 09:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I updated the Max Request Size in Publish_Kafka_0_10 to 2 MB and it produced a different error related to the it being a message larger than the server will accept but doesn't list the size of the limitation anymore. This one is a RecordTooLargeException while the last one was a TokenTooLargeException.