Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Metadata and lineage collection for S3

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Metadata and lineage collection for S3

- Labels:

-

Cloudera Manager

-

Cloudera Navigator

Created 11-26-2018 06:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

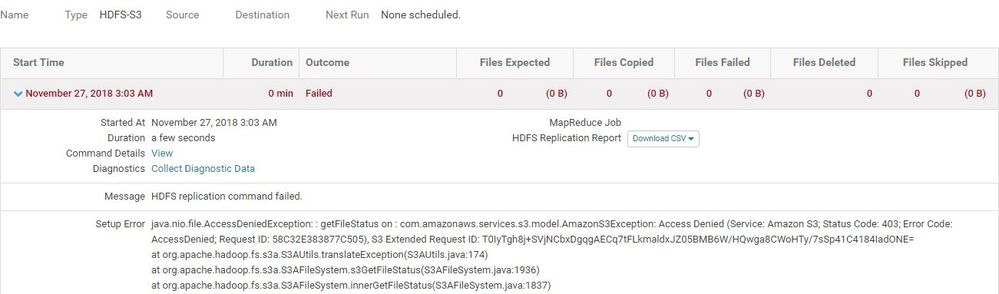

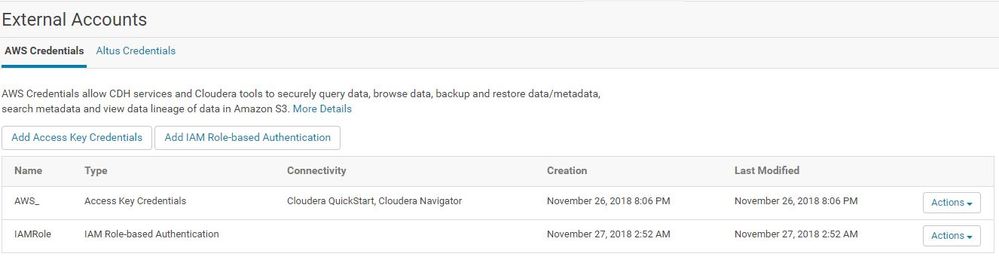

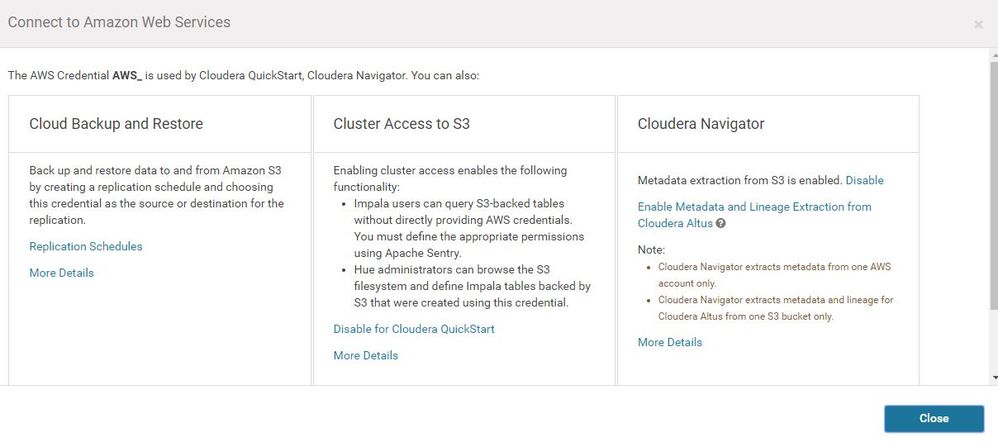

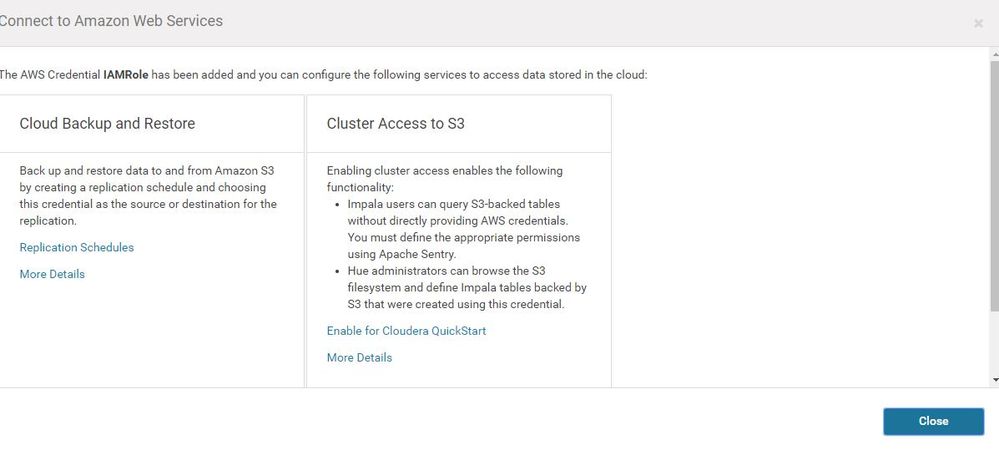

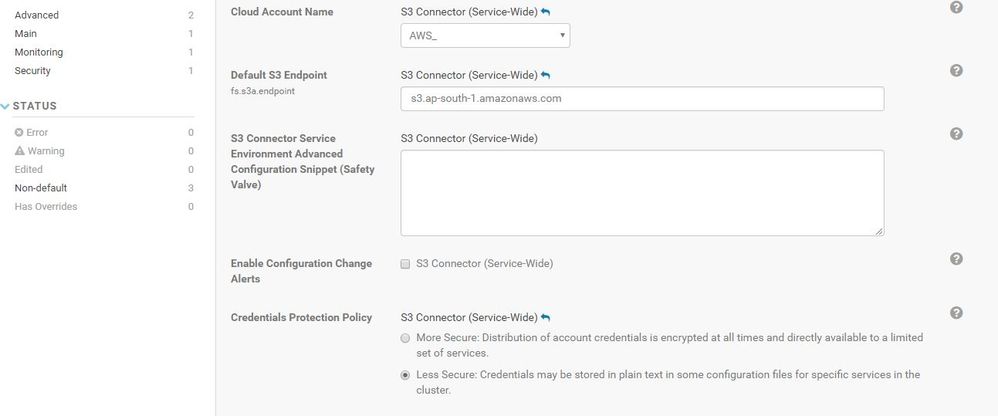

I am trying to collect metadata and lineage information in Cloudera Navigator for S3. For this I have done the following configuration and while doing HDFS replication I am getting the following error

CDH version used: 5.13.0

Could you please help in creading metadata and lineage for S3 in Cloudera Navigator.

Created 11-28-2018 12:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nukala,

From error generated in BDR, there is a problem accessing the path used S3 destination path with credentials provided.

You can validate if credentails are correct via cli

hadoop distcp -Dfs.s3a.access.key=myAccessKey -Dfs.s3a.secret.key=mySecretKey /user/hdfs/mydata s3a://myBucket/mydata_backup

Let us know if this resolves the issue and results if not.

LINKS:

Created on 11-29-2018 07:56 AM - edited 11-29-2018 08:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Seth,

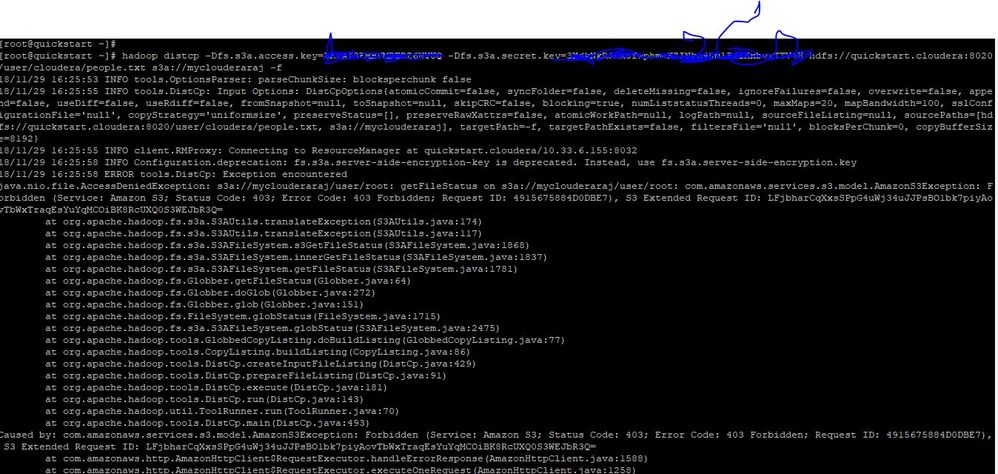

I tried through CLI and now I am getting the below error

I used the below command

hadoop distcp -Dfs.s3a.access.key=<myaccesskey> -Dfs.s3a.secret.key=<mysecretkey> /user/cloudera/hdfs_error.xml s3a://myclouderaraj

18/11/29 17:14:32 INFO tools.OptionsParser: parseChunkSize: blocksperchunk false

18/11/29 17:14:36 INFO Configuration.deprecation: fs.s3a.server-side-encryption-key is deprecated. Instead, use fs.s3a.server-side-encryption.key

18/11/29 17:14:36 ERROR tools.DistCp: Invalid arguments:

java.lang.IllegalArgumentException: path must be absolute

at com.google.common.base.Preconditions.checkArgument(Preconditions.java:88)

at org.apache.hadoop.fs.s3a.s3guard.PathMetadata.<init>(PathMetadata.java:63)

at org.apache.hadoop.fs.s3a.s3guard.PathMetadata.<init>(PathMetadata.java:55)

at org.apache.hadoop.fs.s3a.s3guard.PathMetadata.<init>(PathMetadata.java:51)

at org.apache.hadoop.fs.s3a.s3guard.S3Guard.putAndReturn(S3Guard.java:138)

at org.apache.hadoop.fs.s3a.

Also i tried this command and found the following issue

Not sure why this is looking for s3a://myclouderaraj/user/root

Thanks & Regards,

Rajesh

Created 11-29-2018 10:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nukula,

You can validate with AWS but you need a deeper path to the s3 bucket for this to work. Confirm with the s3 tools from the vendor and use that path your user has access to test.

You can also test with this expression

hdfs dfs -ls -Dfs.s3a.access.key=myAccessKey -Dfs.s3a.secret.key=mySecretKey s3a://myBucket/mydata_backup

Created on 11-29-2018 10:49 AM - edited 11-29-2018 10:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Seth,

From AWS CLI i tried the following and it was success

C:\Users\rajesh.nukala\Desktop>aws s3 cp temp.json s3://myclouderaraj/root/

upload: .\temp.json to s3://myclouderaraj/root/temp.json

Also,I tried the one which was provided by you but seeing the error as mentioned below

[root@quickstart ~]# hdfs dfs -ls -Dfs.s3a.access.key=<myaccesskey> -Dfs.s3a.secret.key=<mysecretkey> s3a://myclouderaraj/root

-ls: Illegal option -Dfs.s3a.access.key=<myaccesskey>

Usage: hadoop fs [generic options] -ls [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [<path> ...]

[root@quickstart ~]#

[root@quickstart ~]# hdfs dfs -Dfs.s3a.access.key=<myaccesskey> -Dfs.s3a.secret.key=<mysecretkey> -ls s3a://myclouderaraj/root

18/11/29 19:19:31 INFO Configuration.deprecation: fs.s3a.server-side-encryption-key is deprecated. Instead, use fs.s3a.server-side-encryption.key

ls: s3a://myclouderaraj/root: getFileStatus on s3a://myclouderaraj/root: com.amazonaws.services.s3.model.AmazonS3Exception: Forbidden (Service: Amazon S3; Status Code: 403; Error Code: 403 Forbidden; Request ID: 062979117C7D7717), S3 Extended Request ID: HKTksk3mPhuVDGDxTfZjE6ElvqYGwO8+a7ryv5IQ14mBF721gfNGI6Xluvv/m0csI05KH0mLO7g=

[root@quickstart ~]#

Created on 11-29-2018 11:07 AM - edited 11-29-2018 06:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In Hortonworks I was able run the following command with same credentials

[root@sandbox ~]# hdfs dfs -Dfs.s3a.access.key=myaccesskey -Dfs.s3a.secret.key=mysecretkey -ls s3a://myclouderaraj/root

Found 1 items

-rw-rw-rw- 1 root 2121 2018-11-29 18:32 s3a://myclouderaraj/root/temp.json

Is there anything specific to Cloudera?

Created 12-03-2018 03:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see I can copy in CDH 5.7, but the error i mentioned is seen in CDH 5.13. To get work in CDH 5.13, do we need to update any thing. Can you please help on this issue.

Thanks & Regards,

Rajesh

Created 12-03-2018 07:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Rajesh,

Try including the -DFS.s3a flags prior to the -ls flags, as this works in your example from other HortonWorks cluster.

- not working [quickstart]-

hdfs dfs -ls -Dfs.s3a.access.key=<myaccesskey> -Dfs.s3a.secret.key=<mysecretkey> s3a://myclouderaraj/root

-ls: Illegal option -Dfs.s3a.access.key=<myaccesskey>

- working [sandbox] -

hdfs dfs -Dfs.s3a.access.key=myaccesskey -Dfs.s3a.secret.key=mysecretkey -ls s3a://myclouderaraj/root

Try from Cloudera [quickstart]:

hdfs dfs -Dfs.s3a.access.key=myaccesskey -Dfs.s3a.secret.key=mysecretkey -ls s3a://myclouderaraj/root

If successful, you will need to add these parameters to *-site.xml to work.

S3 credentials can be provided in a configuration file (for example, core-site.xml):

<property>

<name>fs.s3a.access.key</name>

<value>...</value>

</property>

<property>

<name>fs.s3a.secret.key</name>

<value>...</value>

</property>

Let me know if you are successful.

Thanks,

Seth

Created 12-03-2018 09:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Seth,

Tried the following which are not working

[root@quickstart ~]# hdfs dfs -Dfs.s3a.access.key=myaccesskey -Dfs.s3a.secret.key=mysecretkey -DFS.s3a -ls s3a://myclouderaraj/root

18/12/03 18:01:27 INFO Configuration.deprecation: fs.s3a.server-side-encryption-key is deprecated. Instead, use fs.s3a.server-side-encryption.key

ls: s3a://myclouderaraj/root: getFileStatus on s3a://myclouderaraj/root: com.amazonaws.services.s3.model.AmazonS3Exception: Forbidden (Service: Amazon S3; Status Code: 403; Error Code: 403 Forbidden; Request ID: 78B0773829C23DB5), S3 Extended Request ID: gOFP7ib+VT7lkUo3A/AMjXGKrqNebIPNHSbHRXGsZU9dzkxtw8dceLCNfkkFkypbfPNFN6Pqe1M=

[root@quickstart ~]#

[root@quickstart ~]# hdfs dfs -Dfs.s3a.access.key=myaccesskey -Dfs.s3a.secret.key=mysecretkey -ls s3a://myclouderaraj/root 18/12/03 18:07:11 INFO Configuration.deprecation: fs.s3a.server-side-encryption-key is deprecated. Instead, use fs.s3a.server-side-encryption.key

ls: s3a://myclouderaraj/root: getFileStatus on s3a://myclouderaraj/root: com.amazonaws.services.s3.model.AmazonS3Exception: Forbidden (Service: Amazon S3; Status Code: 403; Error Code: 403 Forbidden; Request ID: 4BC4A44FB6133284), S3 Extended Request ID: dD8H3FqvwSrWxqfIWmNbjS+xzC5v8t0yUtQOGwJztzpKtMZ2sZ0k73RbUDxsv3pZzsC5u9Hg4as=

[root@quickstart ~]#

[cloudera@quickstart ~]$ hdfs dfs -Dfs.s3a.access.key=myaccesskey -Dfs.s3a.secret.key=mysecretkey -DFS.s3a -ls s3a://myclouderaraj/root

18/12/03 18:04:56 INFO Configuration.deprecation: fs.s3a.server-side-encryption-key is deprecated. Instead, use fs.s3a.server-side-encryption.key

ls: s3a://myclouderaraj/root: getFileStatus on s3a://myclouderaraj/root: com.amazonaws.services.s3.model.AmazonS3Exception: Forbidden (Service: Amazon S3; Status Code: 403; Error Code: 403 Forbidden; Request ID: 9CCAB47BBC71B470), S3 Extended Request ID: Z/N0DveFbFio6AmyEk5EkIn4BfgNnRutSIdQ26xNYy9PDyliY87AadWM8ONEDggd1hRN+XVu1yw=

[cloudera@quickstart ~]$

[cloudera@quickstart ~]$

[cloudera@quickstart ~]$ hdfs dfs -Dfs.s3a.access.key=myaccesskey -Dfs.s3a.secret.key=mysecretkey -ls s3a://myclouderaraj/root 18/12/03 18:05:12 INFO Configuration.deprecation: fs.s3a.server-side-encryption-key is deprecated. Instead, use fs.s3a.server-side-encryption.key

ls: s3a://myclouderaraj/root: getFileStatus on s3a://myclouderaraj/root: com.amazonaws.services.s3.model.AmazonS3Exception: Forbidden (Service: Amazon S3; Status Code: 403; Error Code: 403 Forbidden; Request ID: 75349677808F4357), S3 Extended Request ID: ObgEtqWMjCZQ4R8uMJf/R+x0msuPE5IQiMLnSFFTTJSH2NvZf4AtPUM8m5uncrxLeLqDfe8jEIM=

[cloudera@quickstart ~]$

Thanks & Regards,

Rajesh

Created 12-06-2018 07:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Rajesh,

You are passing a different key or a different configuration on the connection attempt to s3a:// in working and not working environment.

To check you could enable debug logger and see what working/sandbox shows vs not-working/quickstart is passing to the S3a endpoint

[quickstart]

# export HADOOP_ROOT_LOGGER=TRACE,console

# export HADOOP_JAAS_DEBUG=true

# export HADOOP_OPTS="-Dsun.security.krb5.debug=true"

# hdfs dfs -Dfs.s3a.access.key=myaccesskey -Dfs.s3a.secret.key=mysecretkey -ls s3a://myclouderaraj/root

** note that the values of each parameter like "myaccesskey" need to be correct or connection will fail.

Thanks

Seth