Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NIFI : RPG site-to-site

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NIFI : RPG site-to-site

- Labels:

-

Apache NiFi

Created 11-23-2016 01:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I'll trying to make a dataflow as explain in this tuto : site-to-site

it explains about ListHDFS and FetchHDFS

It is possible to use RPG inside the local cluster (I have one cluster with 3 nodes) ?

Why I can not use RPG and input port in side the specific process group but I must to create dataflow in the top level.

Created 11-23-2016 01:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @mayki wogno

It is certainly possible to use site-to-site (s2s) to send data to and from the same cluster of nodes and is done commonly as a way to rebalance data across a cluster at key user chosen points.

As to your second question regarding why it works the way it does for RPG placement and port placement here are the scenarios.

1) You want to push data to another system using s2s

For this you can place an RPG anywhere you like in the flow and direct your data to it on a specific s2s port.

2) You want to pull data from another system using s2s

For this you can place an RPG anywhere you like in the flow and source data from on it on a specific s2s port.

3) You want to allow another system to push to yours using s2s

For this you can have a remote input port exposed at the root level of the flow. Other systems can then push to it as described in #1.

4) You want to allow another system to pull from yours using s2s

For this you can have a remote output port exposed at the root level of the flow. Other systems can then pull from it as described in #2 above.

When thinking about scenarios 3 and 4 here the idea is that your system is acting as a broker of data and it is the external systems in control of when they give it to you and take it from you. Your system is simply providing the well published/documented/control points for what those ports are for. We want to make sure this is very explicit and clear and so we require them to be at the root group level. You can then direct any data received to specific internal groups as you need or source from internal groups as you need to expose it for pulling.

If we were instead to allow these to live at any point while it would work what we've found is that it makes the flows harder to maintain and people end up furthering the approach of each flow being a discrete one-off/stovepipe type configuration which is just generally not reflective of what really ends up happening with flows (rarely is it from one place to one place - it is often a graph of inter system exchange).

Anyway, hopefully that helps give context for why it works the way it does.

Created 11-23-2016 01:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @mayki wogno

It is certainly possible to use site-to-site (s2s) to send data to and from the same cluster of nodes and is done commonly as a way to rebalance data across a cluster at key user chosen points.

As to your second question regarding why it works the way it does for RPG placement and port placement here are the scenarios.

1) You want to push data to another system using s2s

For this you can place an RPG anywhere you like in the flow and direct your data to it on a specific s2s port.

2) You want to pull data from another system using s2s

For this you can place an RPG anywhere you like in the flow and source data from on it on a specific s2s port.

3) You want to allow another system to push to yours using s2s

For this you can have a remote input port exposed at the root level of the flow. Other systems can then push to it as described in #1.

4) You want to allow another system to pull from yours using s2s

For this you can have a remote output port exposed at the root level of the flow. Other systems can then pull from it as described in #2 above.

When thinking about scenarios 3 and 4 here the idea is that your system is acting as a broker of data and it is the external systems in control of when they give it to you and take it from you. Your system is simply providing the well published/documented/control points for what those ports are for. We want to make sure this is very explicit and clear and so we require them to be at the root group level. You can then direct any data received to specific internal groups as you need or source from internal groups as you need to expose it for pulling.

If we were instead to allow these to live at any point while it would work what we've found is that it makes the flows harder to maintain and people end up furthering the approach of each flow being a discrete one-off/stovepipe type configuration which is just generally not reflective of what really ends up happening with flows (rarely is it from one place to one place - it is often a graph of inter system exchange).

Anyway, hopefully that helps give context for why it works the way it does.

Created 11-23-2016 01:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks it is clear.

Another question about URL of RPG, it is possible to use a VIP as the url ?

Because, url is a point of failure if the nifi node crash.

Created 11-24-2016 12:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @mayki wogno

Yes, a VIP should work as an URL of RPG. A load balancer such as HAProxy can also be used in front of a NiFi cluster and use its host:port as RPG URL.

After RPG got cluster information from the such URL (propagating the request to one of NiFi node), it will access each node with its IP or hostname directly to transfer data.

Also, there's an ongoing effort to allow multiple URLs as RPG URL to avoid making it a SPOF:

Created 11-24-2016 08:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@kkawamura thanks

Created on 11-24-2016 08:47 AM - edited 08-19-2019 03:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

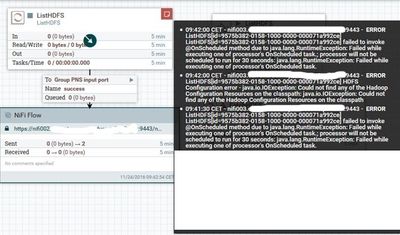

I got a dataflow with listHDFS and RPG but i don't know explain this error :

Created 11-24-2016 09:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The second error message on bulletin indicating that any Hadoop configuration file could not be found.

Please check file path configured at 'Hadoop Configuration Resources' of ListHDFS. It should point at core-site.xml and hdfs-site.xml, and should be accessible by the user running NiFi.

Created 11-25-2016 09:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@kkawamura : the second error is very clear. I'll talked about first message error.

Created 11-25-2016 11:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @mayki wogno

The first error message was also written by the same error with the second error message. The processor reported the error twice, because it logged an error message when the ListHDFS processor caught the exception, then re-throw it, and NiFi framework caught the exception and logged another error message.

When NiFi framework catches an exception thrown by a processor, it yields the processor for the amount of time specified by 'Yield Duration'.

Once the processor successfully accesses core-site.xml and hdfs-site.xml, both error messages will be cleared.