Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NIFI : deleteHDFS

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NIFI : deleteHDFS

- Labels:

-

Apache NiFi

Created 02-21-2017 10:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

Do you know how use deleteHDFS to remove empty directories ?

thanks

Created on 02-21-2017 01:16 PM - edited 08-19-2019 03:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Make sure the user your NiFi is running as is authorized to delete files and directories in your target HDFS.

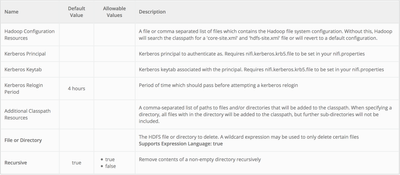

The DeleteHDFS processor properties are as follows:

Thanks,

Matt

Created on 02-21-2017 01:16 PM - edited 08-19-2019 03:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Make sure the user your NiFi is running as is authorized to delete files and directories in your target HDFS.

The DeleteHDFS processor properties are as follows:

Thanks,

Matt

Created on 02-21-2017 01:48 PM - edited 08-19-2019 03:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

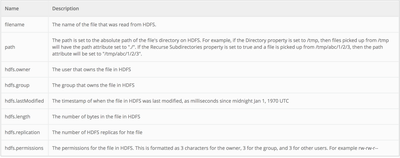

FlowFiles generated by the listHDFS processor all have a "path" attribute created on them:

That attribute could be used to trigger you directory deletion via the DeleteHDFS processor.

What is difficult here is determining when all data has been successfully pulled from an HDFS directory before deleting the directory itself.

You could try using two DeleteHDFS processors in series with one another. The first DeleteHDFS deletes the files from the target "path" of the incoming FlowFiles and the second deletes the directory (Recursive property set to false).

Matt

Created 02-21-2017 01:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Matt : thanks, i've already used this processor for deleleting files, but how use it with listHDFS to delete empty directories.

Created 02-21-2017 02:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@matt: it is partially worked but we received errors for directory non-empty

2017-02-21 15:00:28,938 WARN [Timer-Driven Process Thread-8] o.a.nifi.processors.hadoop.DeleteHDFS DeleteHDFS[id=85d330b2-6cdd-1d81-a764-460fe51ef064] Error processing delete for file or directory org.apache.hadoop.ipc.RemoteException: `/user/ml/apply/toto/03 is non empty': Directory is not empty

Created 02-21-2017 02:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That was the intent... It would only be successful after all files where deleted first. So only after the last file was removed would the directory deletion be successful.

Created 02-21-2017 02:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It worked but it is not clean to have warning in the log file.

Created 02-21-2017 03:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

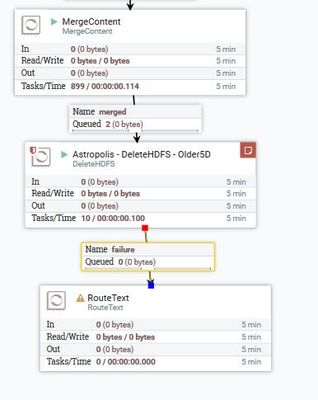

You can reduce or even eliminate the WARN messages by placing a MergeContent processor between your first and second DeleteHDFS processors that merges using "path" as the value to the "Correlation Attribute Name" property. The resulting merged FlowFile(s) would still have the same "path" that would be used by the second DeleteHDFS to remove your directory.

Matt

Created on 02-21-2017 04:02 PM - edited 08-19-2019 03:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Matt thanks for your helps.

Do you know if it is possible to move to the next flowfile and send failed flowfile to the next processor ?

I'm trying to send one of failure flowfile from deleteHDFS to RouteText but nothing goes to.

Created 02-21-2017 04:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One thing you could do is set "FlowFile Expiration" on the connection containing the "merged" relationship. And set the "Available Prioritizers" to " Newest FlowFileFirstPrioritizer". FlowFile expiration is measured against the age of the FlowFile (from creation time to now) and not how long it has been in a particular connection. If the FlowFile age exceeds this configured value, it is purged from the queue.