Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Need help on constructing oozie wf

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Need help on constructing oozie wf

- Labels:

-

Apache Oozie

Created 10-05-2016 03:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

My requirement is, I need to run the job in every 30 min.

I need to create folder (yyyymmdd) in this format dynamically.

I need to copy the data from the table and put it in to that generated table.

How do I acheive that. I have referred many link, but no luck.

also, kindly clarify below terms

<input-events> ,<output-events>, <datasets>,<uri-template>

Created 10-05-2016 08:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need a Oozie coordinator and a workflow with 2 actions (if I understand your conditions correctly). Set the coordinator frequency = "0/30 * * * *" to run the workflow on every full hour, and 30 minutes after every hour. In your coordinator set a property named for example DATE and set its value like below, see here for details

${coord:formatTime(coord:nominalTime(), 'yyyy-MM-dd')}In your workflow.xml, create 2 actions, the first will be an fs action to crate that directory, for example:

<mkdir path='${nameNode}/user/user1/data/${wf.conf("DATE")}' />And the second action will be a hive or hive2 action to "copy the data from the table and put it in to that generated table". [For another coordinator example see this, and click "Previous page" links to find examples of the property file and a workflow with multiple actions, including hive actions.]

And finally, input-events and datasets is used if the condition for the coordinator to start is availability of a new dataset. uri-template is used to define a dataset, and output-events refers to the coordinator output. You can find more details here: datasets, coordinator concepts, and an example of a coordinator based on input-events. If you run your coordinator by time frequency you don't need that.

Created 10-05-2016 08:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need a Oozie coordinator and a workflow with 2 actions (if I understand your conditions correctly). Set the coordinator frequency = "0/30 * * * *" to run the workflow on every full hour, and 30 minutes after every hour. In your coordinator set a property named for example DATE and set its value like below, see here for details

${coord:formatTime(coord:nominalTime(), 'yyyy-MM-dd')}In your workflow.xml, create 2 actions, the first will be an fs action to crate that directory, for example:

<mkdir path='${nameNode}/user/user1/data/${wf.conf("DATE")}' />And the second action will be a hive or hive2 action to "copy the data from the table and put it in to that generated table". [For another coordinator example see this, and click "Previous page" links to find examples of the property file and a workflow with multiple actions, including hive actions.]

And finally, input-events and datasets is used if the condition for the coordinator to start is availability of a new dataset. uri-template is used to define a dataset, and output-events refers to the coordinator output. You can find more details here: datasets, coordinator concepts, and an example of a coordinator based on input-events. If you run your coordinator by time frequency you don't need that.

Created on 10-05-2016 07:38 PM - edited 08-19-2019 04:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

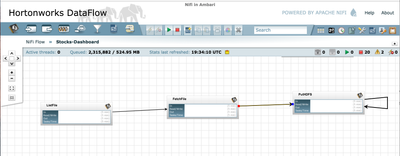

@Gobi Subramani I would suggest that you download and install HDP. It can handle creating the data flow for you. Here's an example of it collecting logs. Instead of writing to an Event bus you could use putHDFS connector and it would write it to hdfs for you. There isn't a lot of trickery to get the date/folder to work, you just need to ${now()} in place of the folder name to get the schema you are looking for. If you look around there are lots of walk throughs and templates. I have included a pic of a simple flow that would likely solve your issue.