Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Need help please. I have used Ambari and HDP 2...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Need help please. I have used Ambari and HDP 2.3 and all the services got started manually the first time but then it's not starting. Not able to start data node or name node or secondary node.

- Labels:

-

Apache Ambari

-

Apache Hadoop

Created 02-04-2016 11:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Below is the exception I am getting:

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/namenode.py", line 433, in <module>

NameNode().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 219, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/namenode.py", line 102, in start

namenode(action="start", hdfs_binary=hdfs_binary, upgrade_type=upgrade_type, env=env)

File "/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py", line 89, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/hdfs_namenode.py", line 112, in namenode

create_log_dir=True

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/utils.py", line 267, in service

Execute(daemon_cmd, not_if=process_id_exists_command, environment=hadoop_env_exports)

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 154, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 158, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 121, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 238, in action_run

tries=self.resource.tries, try_sleep=self.resource.try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 70, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 92, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 140, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 291, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of 'ambari-sudo.sh su hdfs -l -s /bin/bash -c 'ulimit -c unlimited ; /usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start namenode'' returned 1. starting namenode, logging to /var/log/hadoop/hdfs/hadoop-hdfs-namenode-pp-hdp-m.out

Created 02-08-2016 03:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Prakash

Have you tried using internal ip instead?

Please give it a shot if not already done.

Created 02-06-2016 03:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Saurabh Kumar @Neeraj Sabharwal

I tried rebooting the server and infact tried to redo everything. Fixed the host-name issue but still not able to start the namenode. It's finding port 50070 already in use. I even tried changing this port to something but then it says that port is also in use. Below is what it says in the log file:

2016-02-06 00:47:14,934 ERROR namenode.NameNode (NameNode.java:main(1712)) - Failed to start namenode. java.net.BindException: Port in use: pp-hdp-m.asotc:50070

Created 02-06-2016 03:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

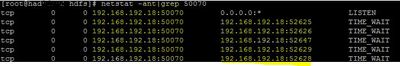

Whats the output of netstat -ant|grep 50070?

@Prakash Punj See this https://community.hortonworks.com/articles/14912/osquery-tool-to-troubleshoot-os-processes.html

Use the above to get more idea on processes running.

Look into the network configs of your hosts.

Created 02-06-2016 03:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Prakash Punj This thread is very long. Please paste the output of netstat -anp | grep 50070

Created 02-06-2016 04:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Prakash Punj See this https://community.hortonworks.com/articles/14912/osquery-tool-to-troubleshoot-os-processes.html

Use the above to get more idea on processes running.

Created on 02-06-2016 08:26 AM - edited 08-19-2019 02:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Saurabh Kuma

I see you are installing Amabari from 192.168.24.118 pp-hdp-m but the output of your netstat shows this IP 172.24.64.98 using the port 50070.

Can you identify this host 172.24.64.98 ?

Typically the netstat command should have your hostname 4th and 6th line see the attached out put from my Ambari server. I guess youe network configuration is wrong.See my attached screenshot

My Ambarai server is listeneing to the correct port

Your hostname looks okay

1. Do the following

[root@had etc] # vi /etc/sysconfig/network

NETWORKING=yes

HOSTNAME= pp-hdp-m

2. Edit the ifcfg-eth0 - ifcfg-eth0 depending on attached NIC’s

[root@had ] vi /etc/sysconfig/network-scripts

DEVICE=eth0

HWADDR=00:15:17:12:F2:50

TYPE=Ethernet

UUID=6428569e-e729-4985-974d-e87fc81a8796

NM_CONTROLLED=yes

ONBOOT=yes

BOOTPROTO=dhcp

3. See the output of ifconfig check the IP addresses mapped to all the NIC's attached:

[root@had ] ifconfig

Your cuurent IP should map to one of the NIC’s attached

If you are on a vmware check the IP's of other hosts

Created 02-07-2016 04:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Neeraj Sabharwal @Saurabh Kumar @

Issue is still there. Below is what i get when I try to start name node from Ambari and the namenode log still says "port in use 50070". Looks like there is something very basic that I am missing. I am very new to this so don't much. I tried reimage all the instance and gave all the devices new IP but still the same result.

I dont get anything when I try the command: netstat -anp | grep 50070

Thanks

resource_management.core.exceptions.Fail: Execution of 'ambari-sudo.sh su hdfs -l -s /bin/bash -c 'ulimit -c unlimited ; /usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start namenode'' returned 1. starting namenode, logging to /var/log/hadoop/hdfs/hadoop-hdfs-namenode-hdp-m.out

Created 02-07-2016 05:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Prakash Punj Are you running this in your laptop?

Created 02-07-2016 05:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using Ambari 2.2 with HDP 2.3 and getting the error when installing the hadoop services from Ambari. DataNode gets sttarted just fine but it's NameNode which is failing. It says port is in use 50070 there is nothing running on that port. I also tried changing the port to 50071 but the error remains the same. It then says port is in use 50071. So it's not a question of finding that process and killing. Something else is missing from the network configuration.

I am using VM.

Thanks

Prakash, Cary, USA

Created 02-07-2016 05:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Prakash Punj I know that but where is VM? In your laptop?

Created 02-07-2016 05:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am running VM in a private cloud environment running centos 6.7 image. It's not on my laptop.

Thanks

Prakash