Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Ni-Fi GenerateTableFetch produces no output if...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Ni-Fi GenerateTableFetch produces no output if the input table is too big

- Labels:

-

Apache NiFi

Created 09-25-2023 01:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HIi

I have created an Apache Ni-Fi data pipeline that fetches data from a MySQL table and after some data transformation loads the data into a postgreSQL table. The first processor is a GenerateTableFetch which is followed by an ExecuteSQL processor.

The pipeline works perfectly with source tables containing over 14 million entries (around 2700 MB). However, with tables containing over 420 million entries (around 69000 MB), the GenerateTableFetch processor does not provide any output.

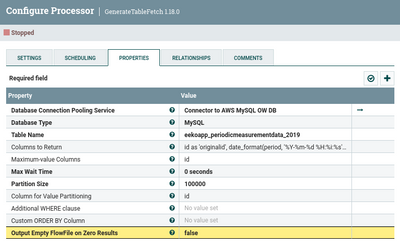

I have used a partition size of 100000 rows and I am using as Column for Value Partitioning the column id which is the primary key in the source table. The same column is also used as Maximimum-value Columns. The Max Wait Time is set to zero, allowing also long SQL queries.

The attached figure shows the other properties of the processor.

In the ExecuteSQL processor I have configured the Max Rows Per Flow File to the same value as the partitioning size: 100000.

I am running Ni-Fi 1.18.0 (java 11.0.20.1) on Linux Ubuntu 22.04.

Any hint how I would manage to load such a huge table?

Thanks,

Bernardo

Created 09-25-2023 02:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@BerniHacker, I have no experience with AWS, however I am using GCP .... so it should mostly be the same thing. In terms of GenerateTableFetch, you do not need to use Column for Value Partitioning in the same time as Maximum-value Columns ... I suggest you to only use Maximum-value Columns as you will get the same result but a little bit faster.

Now, regarding your problem. I encountered something similar to what you are stating and if was related to connectivity and quotas set on the cloud environment. I set the GenerateTableFetch on Debug and used Execute Once to see what gets written in the Bulletin Board. In the same time I also opened the NiFi logs and used tail on nifi-app.log to see anything out of the ordinary.

Once the quotas have been increased on the GCP side, I was able to extract around 1,000,000,000 rows without encountering any further issues.

In the same time, I am also extracting data from local Oracle and MySQL Instances, totaling to more than 5B rows between 09:00 and 10:00AM, using a combination of GenerateTableFetch and ExecuteSQLRecord. Never have I encountered an problem with GenerateTableFetch due the size of the table.

What you could also try is to execute the SELECT statement in your IDE and see how much it takes to get the results. Take note that NiFi might be a little slower, depending on where you have it configured as it could waste much time going through proxy and so on.

Created 09-25-2023 04:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot for your answer!

By running GenerateTableFetch in DEBUG mode I noticed that it was searching for entries whose id was bigger that the max. The processor has been used with the same source table in testing phase and this was the reason why it was not producing any output from that table.

I have cleared the state of the processor and now it is working as expected.

Have a nice day,

Bernardo

Created 09-25-2023 04:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@BerniHacker, I did not even take into consideration the state 🙂 I figured you were trying to execute it for the first time and I assumed from the start that you had nothing saved in your state. Congrats on solving your issue.