Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NiFI Server Configuration

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NiFI Server Configuration

- Labels:

-

Apache NiFi

Created 09-13-2016 08:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi , We are trying to setup a stand alone NiFi server in our HADOOP environment on cloud and trying to determine the best configurations for it. We will have one stand alone server on-site to do site-to-site with cloud NiFi.

We don't have many use cases as of now and may get more in future , based on it we may go to a clustered environment.

we may have to load 2 TB data for a future project , keeping that in mind i am trying to figure out the suitable configurations for our servers for Number of Cores ,RAM,Hard Drive etc

Thanks,

Sai

Created 10-11-2016 03:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The retention settings in the nifi.properties file are for NiFi data archive only. They do not apply to files that are active (queued or still being processed) in any of your dataflows. NiFi will allow you to continue to queue data in your dataflow all the way up to the point where your content repository disk is 100% utilized. That is why backpressure on dataflow connections throughout your dataflow is important to control the amount of FlowFiles that can be queued. Also important to isolate the content repository from other NiFi repositories so if it fills the disk, it does not cause corruption of those other repositories.

If content repository archiving is enabled

nifi.content.repository.archive.enabled=true

then the retention and usage percentage settings in the nifi.properties file take affect. NiFi will archive FlowFiles once they are auto-terminated at the end of a dataflow. Data active your dataflow will always take priority over archived data. If your dataflow should queue to the point your content repository disk is full, the archive will be empty.

The purpose of archiving data is to allow users to replay data from any point in the dataflow or be able to download and examine the content of a FlowFile post processing through a dataflow via the NiFi provenance UI. For many this is a valuable feature and to other not so important. If is not important for your org to archive any data, you can simply set archive enabled to false.

FlowFiles that are not processed successfully within your dataflow are routed to failure relationships. As long as you do not auto-terminate any of your failure relationships, the FlowFiles remain active/queued in your dataflow. You can then build some failure handling dataflow if you like to make sure you do not lose that data.

Matt

Created 10-10-2016 07:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mclark,

can you please input your thoughts..

If we go with eight(8) 600 GB RAID 10 disks. I think we only get 4 disks for storage out of 8 as RAID 10 will use the other 4 for mirroring and stripping.

Can we point 3 of them to content repo and 1 to provenance repo.? so that makes 1.8 TB for content repo.

so our configuration looks like

16 core 128 MB RAM Server

One disk 600 GB RAID 1 for OS and Nifi Software (flowfile,data repo etc)

One disk 600 GB RAID 10 for Provenance repo

Three disks 600 GB RAID 10 for Content repo

My main doubt was since RAID 10 comes in 4 disks (2 for storage and 2 for mirroring).i am sure we can use one set 1.2 TB for cont repo. Out of the remaining 2 disks (second set) ,can i point one to cont repo and another one to prov repo.??

Thanks,

Created 10-10-2016 07:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since RAID 1 requires a minimum of 2 disks and RAID 10 requires a minimum of 4 disks. You can build either:

a. (2) RAID 10

b. (2) RAID 1 and (1) RAID 10

or

c. (4) RAID 1

My recommendation for you would be to provision your (8) 600GB disks as follows:

- Provision your 8 disks in to (4) RAID 1 (2 disks: 600 GB + 600 GB mirrored (Total capacity 600 GB)) configurations.

--------------

(1) RAID 1 (~600 GB capacity) with the following mounted logical volumes:

100 - 150 GB --> /var/log/nifi

100 GB --> /opt/nifi/flowfile_repo

50 GB --> /opt/nifi/database_repo

remainder --> /

(1) RAID 1 (~600 GB capacity) with the following mounted logical volumes:

Entire RAID as single logical volume --> /opt/nifi/provenance_repo

(1) RAID 1 (~600 GB capacity) with the following mounted logical volumes:

Entire RAID as single logical volume --> /opt/nifi/content_repo1

(1) RAID 1 (~600 GB capacity) with the following mounted logical volumes:

Entire RAID as single logical volume --> /opt/nifi/content_repo2

---------------

The above will give you ~1.2TB of content_repo storage and ~600GB of Provenance history storage.

If provenance history is not as important to you, you could carve off another logical volume on the first RAID 1 for your provenance_repo and allocate all (3) remaining RAID 1 for content repositories.

*** Note: NIFi can be configured to use multiple content repositories in the nifi.properties file:

nifi.content.repository.directory.default=/opt/nifi/content_repo1/content_repository <-- This line exists already

nifi.content.repository.directory.repo2=/opt/nifi/content_repo2/content_repository <-- This line would be manually added.

nifi.content.repository.directory.repo3=/opt/nifi/content_repo3/content_repository <-- This line would be manually added.

*** NiFi will do file based striping across all content repos.

Thanks,

Matt

Created 10-12-2016 02:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mclark ,

looks like we have finally decided to go with the below configuration. Please let me know if you see any thing alarming. I don't think 1.2 TB is needed for flow file , but with RAID 10 that the default. I will ask our vendor to see if we can some space from it to be allocated to Content repo.

HDF Server (RHEL 7) ***128 GB**16 Core 2 x 600GB 15K OS (RAID1) 4 x 600GB 15K FileFlow (RAID10) *** gives us 1.2 TB 6 x 600GB 15K Content (RAID10) *** gives us 1.8 TB 4 x 600GB 15K Provenance (RAID10) **gives us 1.2 TB

Thanks,

Sai

Created 10-12-2016 02:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The FlowFile repo will never get close to 1.2 TB in size. That is a lot of wasted money on hardware. You should inquire with your vendor about having them split that Raid in to multiple logical volumes, so you can allocate a large portion of it to other things. Logical Volumes is also a safe way to protect your RAID1 where you OS lives. If some error condition should occur that results in a lot of logging, the application logs may eat up all your disk space affecting you OS. With logical volumes you can protect your root disk. If not possible, I would recommend changing you setup to a a bunch of RAID1 setups.

With 16 x 600 GB hard drives you have allocated above, you could create 8 RAID1 disk arrays.

- 1 for root + software install + database repo + logs (need to make sure you have some monitioring setup to monitor disk usage on this RAID if logical volumes can not be supported)

- 1 for flowfile repo

- 3 for content repo

- 3 for provenance repo

Thanks,

Matt

Created 10-10-2016 09:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mclark

Let's say if we have 10 disks , 2 RAid 1 and 8 RAID 10..all 600 gb

then can I have 3 RAID 10s for content report

1 RAID 10 FOR prov repo

And 1 RAID 1 for /var/log/nifi,/opt/nifi/flowfile_repo, /opt/nifi/database_repo

I am trying to see how best we can configure

with 8 RAID 10 disks and 2 RAid 1 disks..I can add more if need be..

Created 10-11-2016 02:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mclark

Thanks for your help on this so far.. we are almost there..

does content repository holds all files that are processed during the time period specified in the nifi.conf setting.? or will it only hold the files that have failed or errors in processing.??

if it holds all what is the use of holding all the files that are processed successfully.? is there a setting that i can set to delete files that are processed sucessfully.??

Regards,

Sai

Created 10-11-2016 03:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The retention settings in the nifi.properties file are for NiFi data archive only. They do not apply to files that are active (queued or still being processed) in any of your dataflows. NiFi will allow you to continue to queue data in your dataflow all the way up to the point where your content repository disk is 100% utilized. That is why backpressure on dataflow connections throughout your dataflow is important to control the amount of FlowFiles that can be queued. Also important to isolate the content repository from other NiFi repositories so if it fills the disk, it does not cause corruption of those other repositories.

If content repository archiving is enabled

nifi.content.repository.archive.enabled=true

then the retention and usage percentage settings in the nifi.properties file take affect. NiFi will archive FlowFiles once they are auto-terminated at the end of a dataflow. Data active your dataflow will always take priority over archived data. If your dataflow should queue to the point your content repository disk is full, the archive will be empty.

The purpose of archiving data is to allow users to replay data from any point in the dataflow or be able to download and examine the content of a FlowFile post processing through a dataflow via the NiFi provenance UI. For many this is a valuable feature and to other not so important. If is not important for your org to archive any data, you can simply set archive enabled to false.

FlowFiles that are not processed successfully within your dataflow are routed to failure relationships. As long as you do not auto-terminate any of your failure relationships, the FlowFiles remain active/queued in your dataflow. You can then build some failure handling dataflow if you like to make sure you do not lose that data.

Matt

Created on 10-11-2016 03:51 PM - edited 08-18-2019 05:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

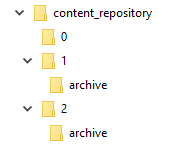

so when the flow file is archived after auto termination at the end of the data flow , it is moved inside content repository from a folder to folder\archive.??

Created 10-11-2016 05:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Almost... NiFi stores FlowFile content in claims. A claim can contain 1 to many FlowFile's content. Claims allow NiFi to use large disk more efficiently when dealing with small content files. These claims will only be moved in to the archive directory once every FlowFile associated to that claim has beed auto-terminated in the dataflow(s). Also keep in mind that you can have multiple FlowFiles pointing at the same content (This happens for example when you connect the same relationship multiple times from a processor). Let say you routed a success relationship twice off of an updateAttribute processor. NiFi does not replicate the content, but rather create another FlowFile that points at that same content. So both those FlowFiles now need to reach an auto-termination point before that content claim would be moved to archive.

The content claims are defined in the nifi.properties file:

nifi.content.claim.max.appendable.size=10 MB nifi.content.claim.max.flow.files=100

The above are the defaults.

If a file comes in at less then 10 MB in size, NIFi will try to append it to the next file(s) unless the combination of those files were to exceed the 10 MB max or the claim has already reach 100 files.

If a file comes in that is larger then 10 MB it ends up in a claim all by itself.

Thanks,

Matt

- « Previous

-

- 1

- 2

- Next »