Support Questions

- Cloudera Community

- Support

- Support Questions

- NiFi: GC tuning issues

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NiFi: GC tuning issues

- Labels:

-

Apache NiFi

Created on 10-04-2016 10:12 AM - edited 08-19-2019 04:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

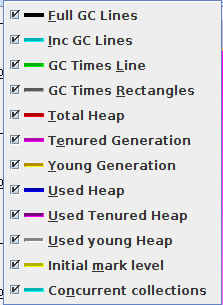

UPDATE I've played a little bit with GC1 using Oracle documentation. I still do not have a definitive solution. I settled to the default configuration, modifying the region size accordingly to the heap (54GB, 30MB region size). I tried to better understand the problem and obtained a detailed picture of the heap using GCViewer. That's the associated heap chart after three consecutive full GCs:

A little bit of explanation for the output if you have not played with GCViewer:

It looks to me that the issue is in that the tenured heap: while the young heap is constantly cleaned in the GC young cycles (the grey line oscillating at the top of the picture), the tenured heap is used but never cleaned up. Some questions: Could it be a viable option to triggering more mixed GCs? How do I obtain that using the configuration parameters? Should I switch to the old CMS GC even if it proved itself to be not that good on huge heaps? Any suggestion is really appreciated. Thank you _________________________________________________________________________________________________ Hello everybody, I am developing a flow which requires good performances. It is running on a single instance with 16 cores and 64GB memory. I've configured NiFi to use 54GB of them with the G1 garbage collector. I am currently facing this memory issue: with a fresh NiFi start, all processors running but no input, it uses 1GB. I get some input in (1.5GB), the flow starts, consumes all the data and ends the computation without triggering any full GC. Tracking the memory using the system diagnostic page, I can see that it is constantly eating memory, at the point that only 2-3 free GB are left when no input is left in the flow. No space is released after that.

I would expect that that's the most memory it needs to consume that input data, but if I try to get the same input size back in, it basically does not release any memory, in fact it continues eating it until full GCs are triggered. It takes really too much time to complete them, that's a screenshot of the system diagnostic page after the first one is triggered:

Is this a normal behavior for the JVM? Is there a way I can avoid triggering full GCs?

I've run over the custom processors code, trying to limit object creations as much as I could (not a java expert, tried to google around a little bit in particular regarding Strings). A great part of the computation is performed accessing flowfile attributes (except for one processor that loads the input as flowfiles attributes, and one that writes them back to the flowfile content). Maybe this could be a programming issue, but given my limited experience I cannot tell, I would be glad to read suggestions and discover this is indeed the case.

I've also tried to reduce the assigned heap down to 16GB. Full GCs are quicker, but more frequent as I expected.

Is there a way to completely avoid full GCs? Thank you all

Created 10-11-2016 01:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Riccardo Iacomini Looks like you're doing some really good stuff to think through this. Some things I would add is that it can often be quite ok to generate a lot of objects. A common source of GC pressure is the length of object retention and how many/how large retained objects are. For short lived objects that are created then eligible for cleaning I've generally found that causes little challenge for collection. The logic is a bit different with G1 but in any event I think we can go back to basics a bit here before spending time tuning the GC.

I recommend running your flow with as small a heap as possible. Consider a 512 MB or 1GB heap for instance. Run with a single thread in the flow controller or very few. Run every processor with a single thread. Then let your flow run at full rate. Measure the latencies. Profile the code. You will find some very interesting and useful things this way.

If you're designing a flow for maximum performance (lowest latency and highest throughput with minimal CPU utilization) then you really want to think about the design of the flow. I do not recommend using flow file attributes as a go between mechanism to deserialize and serialize content from one form to another. Take the original format and convert it to the desired output in another processor. If you need to make routing and filtering decisions then either do that on the raw format or the converted format. Which is the best choice depends on your case.

Extract things to attributes so that you can reuse existing processes is attractive of course but if you primary aim above all else is raw speed then you want to design for that before other tradeoffs like reusability.

Created 10-11-2016 02:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for sharing your knowledge, I will try your tips. This specific GC issue is happening only when I assign multiple threads to the processors and try to speed up the flow, that otherwise runs at roughly 10MB/s in single thread. I originally designed the flow to use flowfile attributes cause I was tempted to make the computation happen in memory. I thought that it would have been faster with respect to reading the flowfile content in each processor, and consequently parsing it to get specific fields. Do you suggest trying to implement a version that works, let's say, "on disk" on flowfile content instead of attributes?