Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NiFi: JSON to CSV to Hive

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NiFi: JSON to CSV to Hive

- Labels:

-

Apache Hive

-

Apache NiFi

Created on 12-13-2017 12:39 PM - edited 08-17-2019 06:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a use case where JSON files are read from an API, transformed to CSV and imported to Hive tables, however my flow fails at the replace text processor. Can you give some advice on the configuration of the processor or on where my approach fails?

InvokeHTTP --> EvaluateJsonPath --> ReplaceText --> MergeContent --> UpdateAttribute --> PutHDFS

My flow does several HTTP calls with InvokeHTTP (Each call with different ID), extracts attributes from each JSON that is returned (each JSON is unique) and then creates the csv's in the ReplaceText processor as following:

${attribute1},${attribute2},${attribute3},${attribute4},${attribute5},${attribute6},${attribute7}

However after the MergeContent processor inthe merged CSV there is really a lot of duplicate data while all incoming JSONs contain unique data.

Created on 12-14-2017 02:46 PM - edited 08-17-2019 06:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I suspect duplicates are from Replace Text processor you have configured

Evaluation Mode

Line-by-Line

That means let's take the your json having more than 1 new line, Replace text processor is going to be Replace the whole line with

Replacement Value

${attribute1}${attribute2}${attribute3}Example:-

Input:-

{

"features": [{

"feature": {

"paths": [[[214985.27600000054,

427573.33100000024],

[215011.98900000006,

427568.84200000018],

[215035.35300000012,

427565.00499999896],

[215128.48900000006,

427549.4290000014],

[215134.43699999899,

427548.65599999949],

[215150.86800000072,

427546.87900000066],

[215179.33199999854,

427544.19799999893]]]

},

"attributes": {

"attribute1": "value",

"attribute2": "value",

"attribute3": "value",

"attribute4": "value"

}

}]

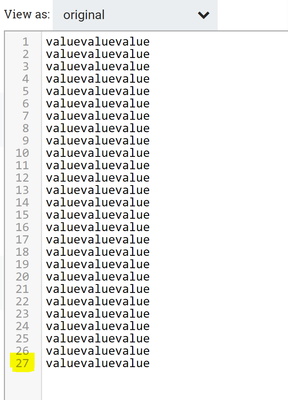

}In this input json message we are having 27 lines and My evaluate Json Path configs are same as you mentioned in comments.

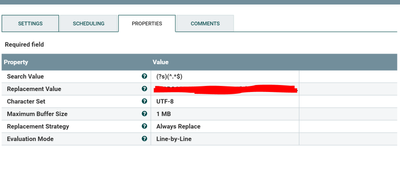

Replace Text Configs:-

Output:-

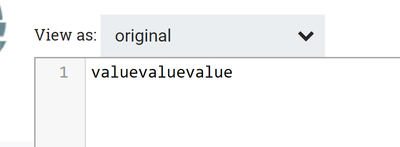

As output we got 27 lines because we are having evaluation mode as line by line.

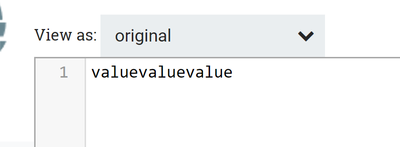

If you change the Evaluation mode to Entire text then

Output:-

And you are having json message in one line i.e

{"features":[{"feature":{"paths":[[[214985.27600000054,427573.33100000024],[215011.98900000006,427568.84200000018],[215035.35300000012,427565.00499999896],[215128.48900000006,427549.4290000014],[215134.43699999899,427548.65599999949],[215150.86800000072,427546.87900000066],[215179.33199999854,427544.19799999893]]]},"attributes":{"attribute1":"value","attribute2":"value","attribute3":"value","attribute4":"value",}}]}Then if you keep replace text configs as line by line or entire text it doesn't matter because we are having just one line as input to the processor and we will get result from replace text as

Try to change the configs as per your Input Json Message and run again the processor.

Let us know if the processor still resulting duplicate data.

Created 12-13-2017 12:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i ve no idea why my screenshots are doubleposted, whatever i tried to fix it fails 🙂

Created 12-13-2017 01:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you share an example or two of incoming JSON data, your config for EvaluateJSONPath, and an example of the flow file after MergeContent (perhaps setting number of entries much lower to fit here)?

Created on 12-14-2017 10:19 AM - edited 08-17-2019 06:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Matt Burgess, here is an example of the incoming JSON files, all have same attributes:

{

"features": [

{

"feature": {

"paths": [

[

[

214985.27600000054,

427573.33100000024

],

[

215011.98900000006,

427568.84200000018

],

[

215035.35300000012,

427565.00499999896

],

[

215128.48900000006,

427549.4290000014

],

[

215134.43699999899,

427548.65599999949

],

[

215150.86800000072,

427546.87900000066

],

[

215179.33199999854,

427544.19799999893

]

]

]

},

"attributes": {

"attribute1": "value",

"attribute2": "value",

"attribute3": "value",

"attribute4": "value",

}

}

]

}

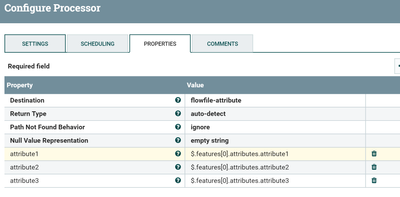

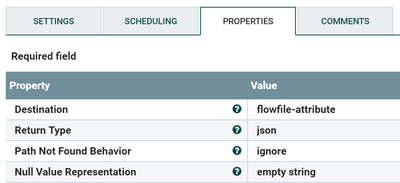

EvaluateJSONpath:

Where i add properties for each attribute i want to parse:

attribute1: $.features[0].attributes.attribute1 etc. etc.

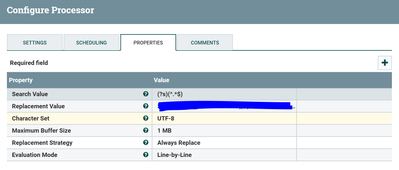

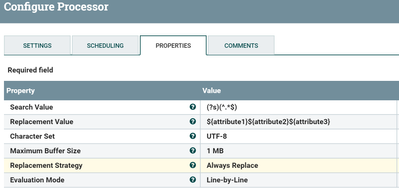

ReplaceText:

I think something goes wrong in my configuration here, because even before the MergeContent the single CSVs created per JSON file contain hundreds of duplicate rows, whereas it should be just one row per CSV that they are gonna be later merged into a big CSV file.

Created on 12-14-2017 02:46 PM - edited 08-17-2019 06:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I suspect duplicates are from Replace Text processor you have configured

Evaluation Mode

Line-by-Line

That means let's take the your json having more than 1 new line, Replace text processor is going to be Replace the whole line with

Replacement Value

${attribute1}${attribute2}${attribute3}Example:-

Input:-

{

"features": [{

"feature": {

"paths": [[[214985.27600000054,

427573.33100000024],

[215011.98900000006,

427568.84200000018],

[215035.35300000012,

427565.00499999896],

[215128.48900000006,

427549.4290000014],

[215134.43699999899,

427548.65599999949],

[215150.86800000072,

427546.87900000066],

[215179.33199999854,

427544.19799999893]]]

},

"attributes": {

"attribute1": "value",

"attribute2": "value",

"attribute3": "value",

"attribute4": "value"

}

}]

}In this input json message we are having 27 lines and My evaluate Json Path configs are same as you mentioned in comments.

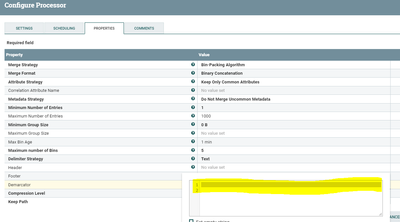

Replace Text Configs:-

Output:-

As output we got 27 lines because we are having evaluation mode as line by line.

If you change the Evaluation mode to Entire text then

Output:-

And you are having json message in one line i.e

{"features":[{"feature":{"paths":[[[214985.27600000054,427573.33100000024],[215011.98900000006,427568.84200000018],[215035.35300000012,427565.00499999896],[215128.48900000006,427549.4290000014],[215134.43699999899,427548.65599999949],[215150.86800000072,427546.87900000066],[215179.33199999854,427544.19799999893]]]},"attributes":{"attribute1":"value","attribute2":"value","attribute3":"value","attribute4":"value",}}]}Then if you keep replace text configs as line by line or entire text it doesn't matter because we are having just one line as input to the processor and we will get result from replace text as

Try to change the configs as per your Input Json Message and run again the processor.

Let us know if the processor still resulting duplicate data.

Created on 12-14-2017 03:49 PM - edited 08-17-2019 06:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

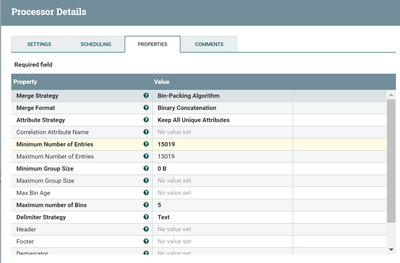

Hi @Shu yes that was exactly the problem, now the individual CSVs are created just fine but in the meantime another problem occured. When the individual CSVs are merged with the MergedContent processor then the Merged CSV is all in one line instead of seperate lines. Is there a way to bypass this?

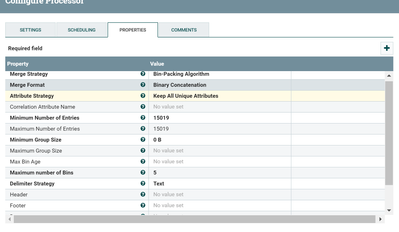

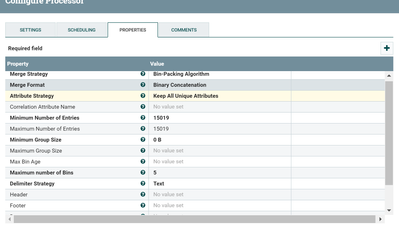

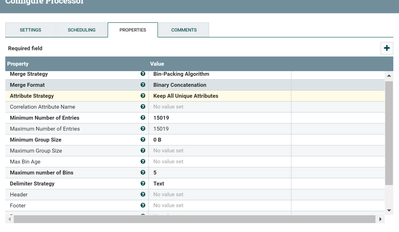

MergeContent:

Created on 12-14-2017 03:58 PM - edited 08-17-2019 06:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@balalaika

For that case you need to specify Demarcator property as

Shift+enterConfigs:-

For merge content reference